27 Field Energy and Field Momentum

Field Energy and Field Momentum

27–1Local conservation

It is clear that the energy of matter is not conserved. When an object radiates light it loses energy. However, the energy lost is possibly describable in some other form, say in the light. Therefore the theory of the conservation of energy is incomplete without a consideration of the energy which is associated with the light or, in general, with the electromagnetic field. We take up now the law of conservation of energy and, also, of momentum for the fields. Certainly, we cannot treat one without the other, because in the relativity theory they are different aspects of the same four-vector.

2025.10.19: $Et - p\cdot x$ scalar invariant

Very early in Volume I, we discussed the conservation of energy; we said then merely that the total energy in the world is constant. Now we want to extend the idea of the energy conservation law in an important way—in a way that says something in detail about how energy is conserved. The new law will say that if energy goes away from a region, it is because it flows away through the boundaries of that region. It is a somewhat stronger law than the conservation of energy without such a restriction.

2025.10.19: radiation resistance

To see what the statement means, let’s look at how the law of the conservation of charge works. We described the conservation of charge by saying that there is a current density $\FLPj$ and a charge density $\rho$, and that when the charge decreases at some place there must be a flow of charge away from that place. We call that the conservation of charge. The mathematical form of the conservation law is \begin{equation} \label{Eq:II:27:1} \FLPdiv{\FLPj}=-\ddp{\rho}{t}. \end{equation}

2025.10.8: 핵심은 discrete

This law has the consequence that the total charge in the world is always constant—there is never any net gain or loss of charge. However, the total charge in the world could be constant in another way. Suppose that there is some charge $Q_1$ near some point $(1)$ while there is no charge near some point $(2)$ some distance away (Fig. 27–1). Now suppose that, as time goes on, the charge $Q_1$ were to gradually fade away and that simultaneously with the decrease of $Q_1$ some charge $Q_2$ would appear near point $(2)$, and in such a way that at every instant the sum of $Q_1$ and $Q_2$ was a constant. In other words, at any intermediate state the amount of charge lost by $Q_1$ would be added to $Q_2$. Then the total amount of charge in the world would be conserved. That’s a “world-wide” conservation, but not what we will call a “local” conservation, because in order for the charge to get from $(1)$ to $(2)$, it didn’t have to appear anywhere in the space between point $(1)$ and point $(2)$. Locally, the charge was just “lost.”There is a difficulty with such a “world-wide” conservation law in the theory of relativity. The concept of “simultaneous moments” at distant points is one which is not equivalent in different systems. Two events that are simultaneous in one system are not simultaneous for another system moving past. For “world-wide” conservation of the kind described, it is necessary that the charge lost from $Q_1$ should appear simultaneously in $Q_2$. Otherwise there would be some moments when the charge was not conserved. There seems to be no way to make the law of charge conservation relativistically invariant without making it a “local” conservation law. As a matter of fact, the requirement of the Lorentz relativistic invariance seems to restrict the possible laws of nature in surprising ways. In modern quantum field theory, for example, people have often wanted to alter the theory by allowing what we call a “nonlocal” interaction—where something here has a direct effect on something there—but we get in trouble with the relativity principle.

“Local” conservation involves another idea. It says that a charge can get from one place to another only if there is something happening in the space between. To describe the law we need not only the density of charge, $\rho$, but also another kind of quantity, namely $\FLPj$, a vector giving the rate of flow of charge across a surface. Then the flow is related to the rate of change of the density by Eq. (27.1). This is the more extreme kind of a conservation law. It says that charge is conserved in a special way—conserved “locally.”

It turns out that energy conservation is also a local process. There is not only an energy density in a given region of space but also a vector to represent the rate of flow of the energy through a surface. For example, when a light source radiates, we can find the light energy moving out from the source. If we imagine some mathematical surface surrounding the light source, the energy lost from inside the surface is equal to the energy that flows out through the surface.

27–2Energy conservation and electromagnetism

We want now to write quantitatively the conservation of energy for electromagnetism. To do that, we have to describe how much energy there is in any volume element of space, and also the rate of energy flow. Suppose we think first only of the electromagnetic field energy. We will let $u$ represent the energy density in the field (that is, the amount of energy per unit volume in space) and let the vector $\FLPS$ represent the energy flux of the field (that is, the flow of energy per unit time across a unit area perpendicular to the flow). Then, in perfect analogy with the conservation of charge, Eq. (27.1), we can write the “local” law of energy conservation in the field as \begin{equation} \label{Eq:II:27:2} \ddp{u}{t}=-\FLPdiv{\FLPS}. \end{equation}

2025.10.18: 기 보존... $c^2\mathbf{\nabla} \cdot \vec{A} +\ddp{\phi}{t}=0$와 같은 형태

2024.7.8: 잘 아는 방정식으로부터 새로운 방정식 찾거나 해를 찿는다

Of course, this law is not true in general; it is not true that the field energy is conserved. Suppose you are in a dark room and then turn on the light switch. All of a sudden the room is full of light, so there is energy in the field, although there wasn’t any energy there before. Equation (27.2) is not the complete conservation law, because the field energy alone is not conserved, only the total energy in the world—there is also the energy of matter. The field energy will change if there is some work being done by matter on the field or by the field on matter.

However, if there is matter inside the volume of interest, we know how much energy it has: Each particle has the energy $m_0c^2/\sqrt{1-v^2/c^2}$. The total energy of the matter is just the sum of all the particle energies, and the flow of this energy through a surface is just the sum of the energy carried by each particle that crosses the surface. We want now to talk only about the energy of the electromagnetic field. So we must write an equation which says that the total field energy in a given volume decreases either because field energy flows out of the volume or because the field loses energy to matter (or gains energy, which is just a negative loss).

2024.7.9: field 에너지, 즉 '기'가 질량에 흡수되기도 하고 방출된다는 거

The field energy inside a volume $V$ is \begin{equation*} \int_Vu\,dV, \end{equation*} and its rate of decrease is minus the time derivative of this integral. The flow of field energy out of the volume $V$ is the integral of the normal component of $\FLPS$ over the surface $\Sigma$ that encloses $V$, \begin{equation} \int_\Sigma\FLPS\cdot\FLPn\,da.\notag \end{equation} So \begin{equation} \label{Eq:II:27:3} -\ddt{}{t}\int_Vu\,dV=\int_\Sigma\FLPS\cdot\FLPn\,da+ (\text{work done on matter inside $V$}). \end{equation}We have seen before that the field does work on each unit volume of matter at the rate $\FLPE\cdot\FLPj$. [The force on a particle is $\FLPF=q(\FLPE+\FLPv\times\FLPB)$, and the rate of doing work is $\FLPF\cdot\FLPv=q\FLPE\cdot\FLPv$. If there are $N$ particles per unit volume, the rate of doing work per unit volume is $Nq\FLPE\cdot\FLPv$, but $Nq\FLPv=\FLPj$.]

2024.7.8: 작용-반작용이라 법칙화했듯이, 떠나기 위해 $E$는 주먹으로 치든 뭔가 내던져야 한다는 거. Nature는 give and take, zero sum !!!

7.13: light pushing momentum

This is our conservation law for energy in the field. We can convert it into a differential equation like Eq. (27.2) if we can change the second term to a volume integral. That is easy to do with Gauss’ theorem. The surface integral of the normal component of $\FLPS$ is the integral of its divergence over the volume inside. So Eq. (27.3) is equivalent to \begin{equation*} -\int_V\ddp{u}{t}\,dV=\int_V\FLPdiv{\FLPS}\,dV+ \int_V\FLPE\cdot\FLPj\,dV, \end{equation*} where we have put the time derivative of the first term inside the integral. Since this equation is true for any volume, we can take away the integrals and we have the energy equation for the electromagnetic fields: \begin{equation} \label{Eq:II:27:5} -\ddp{u}{t}=\FLPdiv{\FLPS}+\FLPE\cdot\FLPj. \end{equation}

Now this equation doesn’t do us a bit of good unless we know what $u$ and $\FLPS$ are. Perhaps we should just tell you what they are in terms of $\FLPE$ and $\FLPB$, because all we really want is the result. However, we would rather show you the kind of argument that was used by Poynting in 1884 to obtain formulas for $\FLPS$ and $u$, so you can see where they come from. (You won’t, however, need to learn this derivation for our later work.)

27–3Energy density and energy flow in the electromagnetic field

The idea is to suppose that there is a field energy density $u$ and a flux $\FLPS$ that depend only upon the fields $\FLPE$ and $\FLPB$. (For example, we know that in electrostatics, at least, the energy density can be written $\tfrac{1}{2}\epsO\FLPE\cdot\FLPE$.) Of course, the $u$ and $\FLPS$ might depend on the potentials or something else, but let’s see what we can work out. We can try to rewrite the quantity $\FLPE\cdot\FLPj$ in such a way that it becomes the sum of two terms: one that is the time derivative of one quantity and another that is the divergence of a second quantity. The first quantity would then be $u$ and the second would be $\FLPS$ (with suitable signs). Both quantities must be written in terms of the fields only; that is, we want to write our equality as \begin{equation} \label{Eq:II:27:6} \FLPE\cdot\FLPj=-\ddp{u}{t}-\FLPdiv{\FLPS}. \end{equation}

The left-hand side must first be expressed in terms of the fields only. How can we do that? By using Maxwell’s equations, of course. From Maxwell’s equation for the curl of $\FLPB$, \begin{equation*} \FLPj=\epsO c^2\FLPcurl{\FLPB}-\epsO\,\ddp{\FLPE}{t}. \end{equation*} Substituting this in (27.6) we will have only $\FLPE$’s and $\FLPB$’s: \begin{equation} \label{Eq:II:27:7} \FLPE\cdot\FLPj=\epsO c^2\FLPE\cdot(\FLPcurl{\FLPB})- \epsO\FLPE\cdot\ddp{\FLPE}{t}. \end{equation} We are already partly finished. The last term is a time derivative—it is$(\ddpl{}{t})(\tfrac{1}{2}\epsO\FLPE\cdot\FLPE)$. So $\tfrac{1}{2}\epsO\FLPE\cdot\FLPE$ is at least one part of $u$. It’s the same thing we found in electrostatics. Now, all we have to do is to make the other term into the divergence of something.

Notice that the first term on the right-hand side of (27.7) is the same as \begin{equation} \label{Eq:II:27:8} (\FLPcurl{\FLPB})\cdot\FLPE. \end{equation} And, as you know from vector algebra, $(\FLPa\times\FLPb)\cdot\FLPc$ is the same as $\FLPa\cdot(\FLPb\times\FLPc)$; so our term is also the same as \begin{equation} \label{Eq:II:27:9} \FLPdiv{(\FLPB\times\FLPE)} \end{equation} and we have the divergence of “something,” just as we wanted. Only that’s wrong! We warned you before that $\FLPnabla$ is “like” a vector, but not “exactly” the same. The reason it is not is because there is an additional convention from calculus: when a derivative operator is in front of a product, it works on everything to the right. In Eq. (27.7), the $\FLPnabla$ operates only on $\FLPB$, not on $\FLPE$. But in the form (27.9), the normal convention would say that $\FLPnabla$ operates on both $\FLPB$ and $\FLPE$. So it’s not the same thing. In fact, if we work out the components of $\FLPdiv{(\FLPB\times\FLPE)}$ we can see that it is equal to $\FLPE\cdot(\FLPcurl{\FLPB})$ plus some other terms. It’s like what happens when we take a derivative of a product in algebra. For instance, \begin{equation*} \ddt{}{x}(fg)=\ddt{f}{x}\,g+f\,\ddt{g}{x}. \end{equation*}

Rather than working out all the components of $\FLPdiv{(\FLPB\times\FLPE)}$, we would like to show you a trick that is very useful for this kind of problem. It is a trick that allows you to use all the rules of vector algebra on expressions with the $\FLPnabla$ operator, without getting into trouble. The trick is to throw out—for a while at least—the rule of the calculus notation about what the derivative operator works on. You see, ordinarily, the order of terms is used for two separate purposes. One is for calculus: $f(d/dx)g$ is not the same as $g(d/dx)f$; and the other is for vectors: $\FLPa\times\FLPb$ is different from $\FLPb\times\FLPa$. We can, if we want, choose to abandon momentarily the calculus rule. Instead of saying that a derivative operates on everything to the right, we make a new rule that doesn’t depend on the order in which terms are written down. Then we can juggle terms around without worrying.

Here is our new convention: we show, by a subscript, what a differential operator works on; the order has no meaning. Suppose we let the operator $D$ stand for $\ddpl{}{x}$. Then $D_f$ means that only the derivative of the variable quantity $f$ is taken. Then \begin{equation*} D_ff=\ddp{f}{x}. \end{equation*} But if we have $D_ffg$, it means \begin{equation*} D_ffg=\biggl(\ddp{f}{x}\biggr)g. \end{equation*} But notice now that according to our new rule, $fD_fg$ means the same thing. We can write the same thing any which way: \begin{equation*} D_ffg=gD_ff=fD_fg=fgD_f. \end{equation*} You see, the $D_f$ can even come after everything. (It’s surprising that such a handy notation is never taught in books on mathematics or physics.)

You may wonder: What if I want to write the derivative of $fg$? I want the derivative of both terms. That’s easy, you just say so; you write $D_f(fg)+D_g(fg)$. That is just $g(\ddpl{f}{x})+f(\ddpl{g}{x})$, which is what you mean in the old notation by $\ddpl{(fg)}{x}$.

You will see that it is now going to be very easy to work out a new expression for $\FLPdiv{(\FLPB\times\FLPE)}$. We start by changing to the new notation; we write \begin{equation} \label{Eq:II:27:10} \FLPdiv{(\FLPB\times\FLPE)}=\FLPnabla_B\cdot(\FLPB\times\FLPE)+ \FLPnabla_E\cdot(\FLPB\times\FLPE). \end{equation} The moment we do that we don’t have to keep the order straight any more. We always know that $\FLPnabla_E$ operates on $\FLPE$ only, and $\FLPnabla_B$ operates on $\FLPB$ only. In these circumstances, we can use $\FLPnabla$ as though it were an ordinary vector. (Of course, when we are finished, we will want to return to the “standard” notation that everybody usually uses.) So now we can do the various things like interchanging dots and crosses and making other kinds of rearrangements of the terms. For instance, the middle term of Eq. (27.10) can be rewritten as $\FLPE\cdot\FLPnabla_B\times\FLPB$. (You remember that $\FLPa\cdot\FLPb\times\FLPc=\FLPb\cdot\FLPc\times\FLPa$.) And the last term is the same as $\FLPB\cdot\FLPE\times\FLPnabla_E$. It looks freakish, but it is all right. Now if we try to go back to the ordinary convention, we have to arrange that the $\FLPnabla$ operates only on its “own” variable. The first one is already that way, so we can just leave off the subscript. The second one needs some rearranging to put the $\FLPnabla$ in front of the $\FLPE$, which we can do by reversing the cross product and changing sign: \begin{equation*} \FLPB\cdot(\FLPE\times\FLPnabla_E)= -\FLPB\cdot(\FLPnabla_E\times\FLPE). \end{equation*} Now it is in a conventional order, so we can return to the usual notation. Equation (27.10) is equivalent to \begin{equation} \label{Eq:II:27:11} \FLPdiv{(\FLPB\times\FLPE)}= \FLPE\cdot(\FLPcurl{\FLPB})-\FLPB\cdot(\FLPcurl{\FLPE}). \end{equation} (A quicker way would have been to use components in this special case, but it was worth taking the time to show you the mathematical trick. You probably won’t see it anywhere else, and it is very good for unlocking vector algebra from the rules about the order of terms with derivatives.)

We now return to our energy conservation discussion and use our new result, Eq. (27.11), to transform the $\FLPcurl{\FLPB}$ term of Eq. (27.7). That energy equation becomes \begin{equation} \label{Eq:II:27:12} \FLPE\cdot\FLPj=\epsO c^2\FLPdiv{(\FLPB\times\FLPE)}+\epsO c^2 \FLPB\cdot(\FLPcurl{\FLPE})-\ddp{}{t}(\tfrac{1}{2}\epsO \FLPE\cdot\FLPE). \end{equation} Now you see, we’re almost finished. We have one term which is a nice derivative with respect to $t$ to use for $u$ and another that is a beautiful divergence to represent $\FLPS$. Unfortunately, there is the center term left over, which is neither a divergence nor a derivative with respect to $t$. So we almost made it, but not quite. After some thought, we look back at the differential equations of Maxwell and discover that $\FLPcurl{\FLPE}$ is, fortunately, equal to $-\ddpl{\FLPB}{t}$, which means that we can turn the extra term into something that is a pure time derivative: \begin{equation*} \FLPB\cdot(\FLPcurl{\FLPE})=\FLPB\cdot\biggl( -\ddp{\FLPB}{t}\biggr)=-\ddp{}{t}\biggl( \frac{\FLPB\cdot\FLPB}{2}\biggr). \end{equation*} Now we have exactly what we want. Our energy equation reads \begin{equation} \label{Eq:II:27:13} \FLPE\cdot\FLPj=\FLPdiv{(\epsO c^2\FLPB\times\FLPE)}- \ddp{}{t}\biggl(\frac{\epsO c^2}{2}\,\FLPB\cdot\FLPB+ \frac{\epsO}{2}\,\FLPE\cdot\FLPE\biggr), \end{equation} which is exactly like Eq. (27.6), if we make the definitions \begin{equation} \label{Eq:II:27:14} u=\frac{\epsO}{2}\,\FLPE\cdot\FLPE+ \frac{\epsO c^2}{2}\,\FLPB\cdot\FLPB \end{equation} and \begin{equation} \label{Eq:II:27:15} \FLPS=\epsO c^2\FLPE\times\FLPB. \end{equation} (Reversing the cross product makes the signs come out right.)

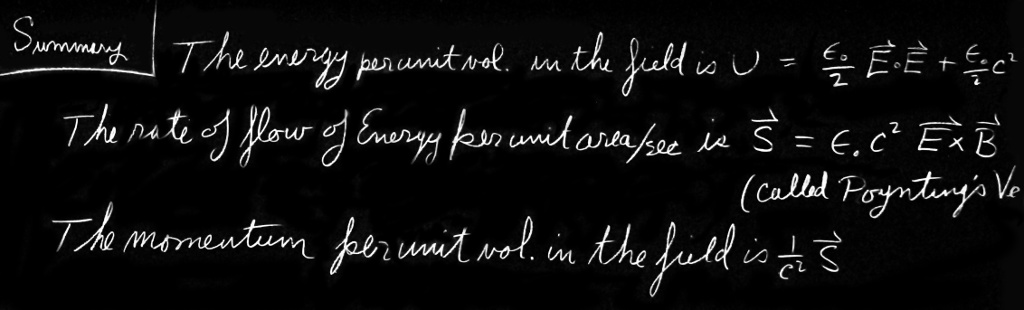

Our program was successful. We have an expression for the energy density that is the sum of an "electric" energy density and a "magnetic" energy density, whose forms are just like the ones we found in statics when we worked out the energy in terms of the fields. Also, we have found a formula for the energy flow vector of the electromagnetic field. This new vector, $\FLPS=\epsO c^2\FLPE\times\FLPB$, is called “Poynting’s vector,” after its discoverer. It tells us the rate at which the field energy moves around in space. The energy which flows through a small area $da$ per second is $\FLPS\cdot\FLPn\,da$, where $\FLPn$ is the unit vector perpendicular to $da$. (Now that we have our formulas for $u$ and $\FLPS$, you can forget the derivations if you want.)

27–4The ambiguity of the field energy

Before we take up some applications of the Poynting formulas [Eqs. (27.14) and (27.15)], we would like to say that we have not really “proved” them. All we did was to find a possible “$u$” and a possible “$\FLPS$.” How do we know that by juggling the terms around some more we couldn’t find another formula for “$u$” and another formula for “$\FLPS$”? The new $\FLPS$ and the new $u$ would be different, but they would still satisfy Eq. (27.6). It’s possible. It can be done, but the forms that have been found always involve various derivatives of the field (and always with second-order terms like a second derivative or the square of a first derivative). There are, in fact, an infinite number of different possibilities for $u$ and $\FLPS$, and so far no one has thought of an experimental way to tell which one is right! People have guessed that the simplest one is probably the correct one, but we must say that we do not know for certain what is the actual location in space of the electromagnetic field energy. So we too will take the easy way out and say that the field energy is given by Eq. (27.14). Then the flow vector $\FLPS$ must be given by Eq. (27.15).

2025.10.5: 에너지는 벡터로 표현되는 기들 뭉치고 흐름은 그들이 뭉친, 즉 vector addition 방향으로

기 벡터들 $E, B$의 collective, 빛 에너지 흐름

10.6: 파인만은 맥스웰과 같은 맥락의 기, 내공을 쌓은 것

It is interesting that there seems to be no unique way to resolve the indefiniteness in the location of the field energy. It is sometimes claimed that this problem can be resolved by using the theory of gravitation in the following argument. In the theory of gravity, all energy is the source of gravitational attraction. Therefore the energy density of electricity must be located properly if we are to know in which direction the gravity force acts. As yet, however, no one has done such a delicate experiment that the precise location of the gravitational influence of electromagnetic fields could be determined. That electromagnetic fields alone can be the source of gravitational force is an idea it is hard to do without. It has, in fact, been observed that light is deflected as it passes near the sun—we could say that the sun pulls the light down toward it. Do you not want to allow that the light pulls equally on the sun? Anyway, everyone always accepts the simple expressions we have found for the location of electromagnetic energy and its flow. And although sometimes the results obtained from using them seem strange, nobody has ever found anything wrong with them—that is, no disagreement with experiment. So we will follow the rest of the world—besides, we believe that it is probably perfectly right.

We should make one further remark about the energy formula. In the first place, the energy per unit volume in the field is very simple: It is the electrostatic energy plus the magnetic energy, if we write the electrostatic energy in terms of $E^2$ and the magnetic energy as $B^2$. We found two such expressions as possible expressions for the energy when we were doing static problems. We also found a number of other formulas for the energy in the electrostatic field, such as $\rho\phi$, which is equal to the integral of $\FLPE\cdot\FLPE$ in the electrostatic case. However, in an electrodynamic field the equality failed, and there was no obvious choice as to which was the right one. Now we know which is the right one. Similarly, we have found the formula for the magnetic energy that is correct in general. The right formula for the energy density of dynamic fields is Eq. (27.14).

27–5Examples of energy flow

Our formula for the energy flow vector $\FLPS$ is something quite new. We want now to see how it works in some special cases and also to see whether it checks out with anything that we knew before. The first example we will take is light. In a light wave we have an $\FLPE$ vector and a $\FLPB$ vector at right angles to each other and to the direction of the wave propagation. (See Fig. 27–2.) In an electromagnetic wave, the magnitude of $\FLPB$ is equal to $1/c$ times the magnitude of $\FLPE$, and since they are at right angles, \begin{equation*} \abs{\FLPE\times\FLPB}=\frac{E^2}{c}. \end{equation*} Therefore, for light, the flow of energy per unit area per second is \begin{equation} \label{Eq:II:27:16} S=\epsO cE^2. \end{equation} For a light wave in which $E=E_0\cos\omega(t-x/c)$, the average rate of energy flow per unit area, $\av{S}$—which is called the “intensity” of the light—is the mean value of the square of the electric field times $\epsO c$: \begin{equation} \label{Eq:II:27:17} \text{Intensity} = \av{S} = \epsO c\av{E^2}. \end{equation}

Believe it or not, we have already derived this result in Section 31–5 of Vol. I, when we were studying light. We can believe that it is right because it also checks against something else. When we have a light beam, there is an energy density in space given by Eq. (27.14). Using $cB=E$ for a light wave, we get that \begin{equation*} u=\frac{\epsO}{2}\,E^2+\frac{\epsO c^2}{2}\biggl( \frac{E^2}{c^2}\biggr)=\epsO E^2. \end{equation*} But $\FLPE$ varies in space, so the average energy density is \begin{equation} \label{Eq:II:27:18} \av{u} = \epsO\av{E^2}. \end{equation} Now the wave travels at the speed $c$, so we should think that the energy that goes through a square meter in a second is $c$ times the amount of energy in one cubic meter. So we would say that \begin{equation*} \av{S} = \epsO c\av{E^2}. \end{equation*} And it’s right; it is the same as Eq. (27.17).

Now we take another example. Here is a rather curious one. We look at the energy flow in a capacitor that we are charging slowly. (We don’t want frequencies so high that the capacitor is beginning to look like a resonant cavity, but we don’t want dc either.) Suppose we use a circular parallel plate capacitor of our usual kind, as shown in Fig. 27–3. There is a nearly uniform electric field inside which is changing with time. At any instant the total electromagnetic energy inside is $u$ times the volume. If the plates have a radius $a$ and a separation $h$, the total energy between the plates is \begin{equation} \label{Eq:II:27:19} U=\biggl(\frac{\epsO}{2}\,E^2\biggr)(\pi a^2h). \end{equation}

* $u$가 에너지 밀도니까, 부피 곱하면 총에너지, $U=(u=\frac{\epsO}{2}\,E^2)\times{(원판넓이=\pi a^2)}\times{(높이=h)}$ 근데 왜? 자기장 에너지는 없는가? 안쪽은 없나? 아래를 보면 가장 바깥쪽 자기장만 고려하고.

2021.8.20: 위에서 가정했듯이, 낮은 진동수의 교류 사용하면 자기장이 무시할 정도로 작게되기 때문.

23.5 식, $B=\frac{i\omega r}{2c^2}\,E_0e^{i\omega t}$에서 $\omega=0$이면, $B=0$. 그렇기에 진동수가 높을 때는 자기장 변화에 따른 전기장을 iteratively 더한 것인데, 그렇지 않기에 전기장이 거의 uniform이라고까지 한 것이요 그건 바로 내부 자기장=0으로 가정한것. 보조 문구

그리고 여기서 파인만이 보여 주고자 한 것은 the energy is not coming down the wires; it is coming in through the edges of the gap. 내가 지금 여길 들여다 보는 것도 에너지가 어떻게 전달되느냐고.

You remember, of course, that there is a magnetic field that circles around the axis when the capacitor is charging. We discussed that in Chapter 23. Using the last of Maxwell’s equations, we found that the magnetic field at the edge of the capacitor is given by \begin{equation*} 2\pi ac^2B=\dot{E}\cdot\pi a^2, \end{equation*} or \begin{equation*} B=\frac{a}{2c^2}\,\dot{E}. \end{equation*} Its direction is shown in Fig. 27–3. So there is an energy flow proportional to $\FLPE\times\FLPB$ that comes in all around the edges, as shown in the figure. The energy isn’t actually coming down the wires, but from the space surrounding the capacitor.

Let’s check whether or not the total amount of flow through the whole surface between the edges of the plates checks with the rate of change of the energy inside—it had better; we went through all that work proving Eq. (27.15) to make sure, but let’s see. The area of the surface is $2\pi ah$, and $\FLPS=\epsO c^2\FLPE\times\FLPB$ is in magnitude \begin{equation*} \epsO c^2E\biggl(\frac{a}{2c^2}\,\dot{E}\biggr), \end{equation*} so the total flux of energy is \begin{equation*} \pi a^2h\epsO E\dot{E}. \end{equation*} It does check with Eq. (27.20). But it tells us a peculiar thing: that when we are charging a capacitor, the energy is not coming down the wires; it is coming in through the edges of the gap. That’s what this theory says!

How can that be? That’s not an easy question, but here is one way of thinking about it. Suppose that we had some charges above and below the capacitor and far away. When the charges are far away, there is a weak but enormously spread-out field that surrounds the capacitor. (See Fig. 27–4.) Then, as the charges come together, the field gets stronger nearer to the capacitor. So the field energy which is way out moves toward the capacitor and eventually ends up between the plates.

As another example, we ask what happens in a piece of resistance wire when it is carrying a current. Since the wire has resistance, there is an electric field along it, driving the current. Because there is a potential drop along the wire, there is also an electric field just outside the wire, parallel to the surface. (See Fig. 27–5.) There is, in addition, a magnetic field which goes around the wire because of the current. The $\FLPE$ and $\FLPB$ are at right angles; therefore there is a Poynting vector directed radially inward, as shown in the figure. There is a flow of energy into the wire all around. It is, of course, equal to the energy being lost in the wire in the form of heat. So our “crazy” theory says that the electrons are getting their energy to generate heat because of the energy flowing into the wire from the field outside. Intuition would seem to tell us that the electrons get their energy from being pushed along the wire, so the energy should be flowing down (or up) along the wire. But the theory says that the electrons are really being pushed by an electric field, which has come from some charges very far away, and that the electrons get their energy for generating heat from these fields. The energy somehow flows from the distant charges into a wide area of space and then inward to the wire.

Finally, in order to really convince you that this theory is obviously nuts, we will take one more example—an example in which an electric charge and a magnet are at rest near each other—both sitting quite still. Suppose we take the example of a point charge sitting near the center of a bar magnet, as shown in Fig. 27–6. Everything is at rest, so the energy is not changing with time. Also, $\FLPE$ and $\FLPB$ are quite static. But the Poynting vector says that there is a flow of energy, because there is an $\FLPE\times\FLPB$ that is not zero. If you look at the energy flow, you find that it just circulates around and around. There isn’t any change in the energy anywhere—everything which flows into one volume flows out again. It is like incompressible water flowing around. So there is a circulation of energy in this so-called static condition. How absurd it gets!

2025.10.17: hologram 세상, steady 에너지 방향

2024.7.11: No, not absurd

돌개바람, 태풍을 보면, 에너지는 어떤 결정체가 아니라 accumulating 움직임들 to some fixed point, i.e. zeros of a vector field

파인만도 인정할 수 밖에 없는

7.14: for more, 기 흐름

Perhaps it isn’t so terribly puzzling, though, when you remember that what we called a “static” magnet is really a circulating permanent current. In a permanent magnet the electrons are spinning permanently inside. So maybe a circulation of the energy outside isn’t so queer after all.

You no doubt begin to get the impression that the Poynting theory at least partially violates your intuition as to where energy is located in an electromagnetic field. You might believe that you must revamp all your intuitions, and, therefore have a lot of things to study here. But it seems really not necessary. You don’t need to feel that you will be in great trouble if you forget once in a while that the energy in a wire is flowing into the wire from the outside, rather than along the wire. It seems to be only rarely of value, when using the idea of energy conservation, to notice in detail what path the energy is taking. The circulation of energy around a magnet and a charge seems, in most circumstances, to be quite unimportant. It is not a vital detail, but it is clear that our ordinary intuitions are quite wrong.

27–6Field momentum

Next we would like to talk about the momentum in the electromagnetic field. Just as the field has energy, it will have a certain momentum per unit volume. Let us call that momentum density $\FLPg$. Of course, momentum has various possible directions, so that $\FLPg$ must be a vector. Let’s talk about one component at a time; first, we take the $x$-component. Since each component of momentum is conserved we should be able to write down a law that looks something like this: \begin{equation*} -\ddp{}{t} \begin{pmatrix} \text{momentum}\\ \text{of matter} \end{pmatrix} _x\!\!=\ddp{g_x}{t}+ \begin{pmatrix} \text{momentum}\\ \text{outflow} \end{pmatrix} _x. \end{equation*} The left side is easy. The rate-of-change of the momentum of matter is just the force on it. For a particle, it is $\FLPF=q(\FLPE+\FLPv\times\FLPB)$; for a distribution of charges, the force per unit volume is $(\rho\FLPE+\FLPj\times\FLPB)$. The “momentum outflow” term, however, is strange. It cannot be the divergence of a vector because it is not a scalar; it is, rather, an $x$-component of some vector. Anyway, it should probably look something like \begin{equation*} \ddp{a}{x}+\ddp{b}{y}+\ddp{c}{z}, \end{equation*} because the $x$-momentum could be flowing in any one of the three directions. In any case, whatever $a$, $b$, and $c$ are, the combination is supposed to equal the outflow of the $x$-momentum.

Now the game would be to write $\rho\FLPE+\FLPj\times\FLPB$ in terms only of $\FLPE$ and $\FLPB$—eliminating $\rho$ and $\FLPj$ by using Maxwell’s equations—and then to juggle terms and make substitutions to get it into a form that looks like \begin{equation*} \ddp{g_x}{t}+\ddp{a}{x}+\ddp{b}{y}+\ddp{c}{z}. \end{equation*} Then, by identifying terms, we would have expressions for $g_x$, $a$, $b$, and $c$. It’s a lot of work, and we are not going to do it. Instead, we are only going to find an expression for $\FLPg$, the momentum density—and by a different route.

There is an important theorem in mechanics which is this: whenever there is a flow of energy in any circumstance at all (field energy or any other kind of energy), the energy flowing through a unit area per unit time, when multiplied by $1/c^2$, is equal to the momentum per unit volume in the space. In the special case of electrodynamics, this theorem gives the result that $\FLPg$ is $1/c^2$ times the Poynting vector: \begin{equation} \label{Eq:II:27:21} \FLPg=\frac{1}{c^2}\,\FLPS. \end{equation} So the Poynting vector gives not only energy flow but, if you divide by $c^2$, also the momentum density. The same result would come out of the other analysis we suggested, but it is more interesting to notice this more general result. We will now give a number of interesting examples and arguments to convince you that the general theorem is true.

2024.7.14: Notation이 다를 뿐, $\frac{E}{c^2}=m$, which is momentum density=질량. 엔트로피 $S=\frac QT$

2025.10.7: 에너지

First example: Suppose that we have a lot of particles in a box—let’s say $N$ per cubic meter—and that they are moving along with some velocity $\FLPv$. Now let’s consider an imaginary plane surface perpendicular to $\FLPv$. The energy flow through a unit area of this surface per second is equal to $Nv$, the number which flow through the surface per second, times the energy carried by each one. The energy in each particle is $m_0c^2/\sqrt{1-v^2/c^2}$. So the energy flow per second is \begin{equation*} Nv\,\frac{m_0c^2}{\sqrt{1-v^2/c^2}}. \end{equation*} But the momentum of each particle is $m_0v/\sqrt{1-v^2/c^2}$, so the density of momentum is \begin{equation*} N\,\frac{m_0v}{\sqrt{1-v^2/c^2}}, \end{equation*} which is just $1/c^2$ times the energy flow—as the theorem says. So the theorem is true for a bunch of particles.

It is also true for light. When we studied light in Volume I, we saw that when the energy is absorbed from a light beam, a certain amount of momentum is delivered to the absorber. We have, in fact, shown in Chapter 34 of Vol. I that the momentum is $1/c$ times the energy absorbed [Eq. (34.24) of Vol. I]. If we let $\FLPU$ be the energy arriving at a unit area per second, then the momentum arriving at a unit area per second is $\FLPU/c$. But the momentum is travelling at the speed $c$, so its density in front of the absorber must be $\FLPU/c^2$. So again the theorem is right.

Finally we will give an argument due to Einstein which demonstrates the same thing once more. Suppose that we have a railroad car on wheels (assumed frictionless) with a certain big mass $M$. At one end there is a device which will shoot out some particles or light (or anything, it doesn’t make any difference what it is), which are then stopped at the opposite end of the car. There was some energy originally at one end—say the energy $U$ indicated in Fig. 27–7(a)—and then later it is at the opposite end, as shown in Fig. 27–7(c). The energy $U$ has been displaced the distance $L$, the length of the car. Now the energy $U$ has the mass $U/c^2$, so if the car stayed still, the center of gravity of the car would be moved. Einstein didn’t like the idea that the center of gravity of an object could be moved by fooling around only on the inside, so he assumed that it is impossible to move the center of gravity by doing anything inside. But if that is the case, when we moved the energy $U$ from one end to the other, the whole car must have recoiled some distance $x$, as shown in part (c) of the figure.

2025.10.17: recoil은 reaction ... 뭔가 주고 튀어나와야 한다, 외부의 기를 유입하지 않는다면, 구조 보존 법칙

You can see, in fact, that the total mass of the car, times $x$, must equal the mass of the energy moved, $U/c^2$ times $L$ (assuming that $U/c^2$ is much less than $M$): \begin{equation} \label{Eq:II:27:22} Mx=\frac{U}{c^2}\,L. \end{equation}2025.10.9: 무게 중심이 바뀌지 않는다는 가정하였으니, 총 질량 곱하기 위치 변화는 $0$이어야 한다, 즉 $-(M-\frac{U}{c^2})x+\frac{U}{c^2})L=0$

헌데, 위 추가 가정에 의하여, $(M-\frac{U}{c^2})x \approx Mx$ and we get 27.22

10.10: The other around... 27.22는 에너지/운동량보다 더 원천적인 기 보존 법칙으로부터 나오는 것이므로 그를 받아들이면 center of gravity가 변함없다는 거

Let’s now look at the special case of the energy being carried by a light flash. (The argument would work as well for particles, but we will follow Einstein, who was interested in the problem of light.) What causes the car to be moved? Einstein argued as follows: When the light is emitted there must be a recoil, some unknown recoil with momentum $p$. It is this recoil which makes the car roll backward. The recoil velocity $v$ of the car will be this momentum divided by the mass of the car: \begin{equation*} v=\frac{p}{M}. \end{equation*} The car moves with this velocity until the light energy $U$ gets to the opposite end. Then, when it hits, it gives back its momentum and stops the car. If $x$ is small, then the time the car moves is nearly equal to $L/c$; so we have that \begin{equation*} x=vt=v\,\frac{L}{c}=\frac{p}{M}\,\frac{L}{c}. \end{equation*} Putting this $x$ in Eq. (27.22), we get that \begin{equation*} p=\frac{U}{c}. \end{equation*}

2025.10.12: Let $t_0$ be the time the car move. $ct_0+vt_0=L, vt_0=x => t_0=\frac{L-x}{c}$

참조: 상대 속도, 시간 그 거리 관계

You may well wonder: What is so important about the center-of-gravity theorem? Maybe it is wrong. Perhaps, but then we would also lose the conservation of angular momentum. Suppose that our boxcar is moving along a track at some speed $v$ and that we shoot some light energy from the top to the bottom of the car—say, from $A$ to $B$ in Fig. 27–8. Now we look at the angular momentum of the system about the point $P$. Before the energy $U$ leaves $A$, it has the mass $m=U/c^2$ and the velocity $v$, so it has the angular momentum $mvr_A$. When it arrives at $B$, it has the same mass and, if the linear momentum of the whole boxcar is not to change, it must still have the velocity $v$. Its angular momentum about $P$ is then $mvr_B$. The angular momentum will be changed unless the right recoil momentum was given to the car when the light was emitted—that is, unless the light carries the momentum $U/c$. It turns out that the angular momentum conservation and the theorem of center-of-gravity are closely related in the relativity theory. So the conservation of angular momentum would also be destroyed if our theorem were not true. At any rate, it does turn out to be a true general law, and in the case of electrodynamics we can use it to get the momentum in the field.

2025.10.15: 작용 반작용의 특수한 경우일 뿐...

작용 반작용, 아르키메데스 펌프, 스파이럴 roller 등 기의 spiral helix에 의한 듯.

10.23: 상대성 이론 이론 하는데... 명확하게 얘기하면

모든 물리 법칙은 로렌쯔 변환에 불변해야 한다는 포앙카레 제안에 따라, 아인슈타인이 뉴튼 제 2법칙 수정함으로써, 얻은 $E=mc^2$에 의하여

빛의 에너지가 $E$라 하면, 그 질량, 모멘텀은 $m=\frac{E}{c^2}, p=mc=\frac{E}{c} $

<= 이걸 말하는 거다

We will mention two further examples of momentum in the electromagnetic field. We pointed out in Section 26–2 the failure of the law of action and reaction when two charged particles were moving on orthogonal trajectories. The forces on the two particles don’t balance out, so the action and reaction are not equal; therefore the net momentum of the matter must be changing. It is not conserved. But the momentum in the field is also changing in such a situation. If you work out the amount of momentum given by the Poynting vector, it is not constant. However, the change of the particle momenta is just made up by the field momentum, so the total momentum of particles plus field is conserved.

2024.7.11: 질량, field 등 기/움직임들에 의하여 구성된 것으로 모였다 흩어졌다... 모든 게 essentially 기의 흐름

12.2: 모멘텀보다 원천적인 기 보존이 핵심.

Finally, another example is the situation with the magnet and the charge, shown in Fig. 27–6. We were unhappy to find that energy was flowing around in circles, but now, since we know that energy flow and momentum are proportional, we know also that there is momentum circulating in the space. But a circulating momentum means that there is angular momentum. So there is angular momentum in the field. Do you remember the paradox we described in Section 17–4 about a solenoid and some charges mounted on a disc? It seemed that when the current turned off, the whole disc should start to turn. The puzzle was: Where did the angular momentum come from? The answer is that if you have a magnetic field and some charges, there will be some angular momentum in the field. It must have been put there when the field was built up. When the field is turned off, the angular momentum is 기의 given back. So the disc in the paradox would start rotating. This mystic circulating flow of energy, which at first seemed so ridiculous, is absolutely necessary. There is really a momentum flow. It is needed to maintain the conservation of angular momentum in the whole world.

2026.1.9: 자연은 고차원 드나드는 기 flow, interaction이 만드는 3차원 세계

양자 역학과 상대성 이론은 기들 flow에 대한 수학적 description

1. steady한 정전기장, 불확정성 원리 등

2. 2025.10.18:

'기 흐름'에 대한 파인만의 어렴풋한 설명들(물리 concept 변천)

(1) 충돌1, 두드림, internal random motion

(2) gyroscope에 주입된 기의 방향

근육 힘

(3) force trying to turn the wheel

(4) passes energy to the molecules in just the right way

(5) average velocity of gas molecules depends on the temperature

(6) circulating flow 등

(7) orbital 운동량 <=> (linear) momentum, spin 운동량 <=> orbital 운동량

(8) equilibrium, 2, 3, 4, 5

2024.7.15: As one starts to diappear, another starts to appear just like $x^2+y^2=1$

3. 기의 매듭

2025.1.28: 아래층 위층

질량 안의 기 흐름

① 스프링 쿨러처럼 전자는 기를 임의로 분산시키는데

② 전자들 배열 구조에 의하여 좌충우돌 집적된 기 뭉치는 강의 I Fig. 31-3와 같이 움직이며 불투명 장벽을 마주치면 반사/굴절되는 등 좌충우돌한다

질량의 움직임/drift, 연동 진자는 그 결과고

연동진자에서 2번째 진자가 첫번째 진자와 같은 오리엔테이션으로 진동하는 것은, 외부에서 유입되지 않는 한, 각 방향의 기의 양들은 변함없다는 직선 운동량, 각 운동량 보존 법칙으로 설명되고

기로 말하자면 기들의 양과 방향성은 변하지 않는다는 거.

본래의 기질에 같은 구조를 만났으니 그 안에서 노니는 행태가 같을 수밖에 없지 않느냐는 거. 자이로스코프의 세차 방향 설명도 가능.

③ 그리고 위의 파인만 설명들과 불투명(장벽)에 비추어 보면, 접촉한 질량 사이의 기 흐름은 기가 형성한 구조적 균열/변형에 의하여 생긴 틈으로 발생하는 것