23 Resonance

Resonance

23–1Complex numbers and harmonic motion

In the present chapter we shall continue our discussion of the harmonic oscillator and, in particular, the forced harmonic oscillator, using a new technique in the analysis. In the preceding chapter we introduced the idea of complex numbers, which have real and imaginary parts and which can be represented on a diagram in which the ordinate represents the imaginary part and the abscissa represents the real part. If $a$ is a complex number, we may write it as $a = a_r + ia_i$, where the subscript $r$ means the real part of $a$, and the subscript $i$ means the imaginary part of $a$. Referring to Fig. 23–1, we see that we may also write a complex number $a = x + iy$ in the form $x + iy = re^{i\theta}$, where $r^2 = x^2 + y^2 = (x + iy)(x - iy) = aa\cconj$. (The complex conjugate of $a$, written $a\cconj$, is obtained by reversing the sign of $i$ in $a$.) So we shall represent a complex number in either of two forms, a real plus an imaginary part, or a magnitude $r$ and a phase angle $\theta$, so-called. Given $r$ and $\theta$, $x$ and $y$ are clearly $r\cos\theta$ and $r\sin\theta$ and, in reverse, given a complex number $x + iy$, $r = \sqrt{x^2 + y^2}$ and $\tan\theta= y/x$, the ratio of the imaginary to the real part.

We are going to apply complex numbers to our analysis of physical phenomena by the following trick. We have examples of things that oscillate; the oscillation may have a driving force which is a certain constant times $\cos\omega t$. Now such a force, $F = F_0\cos\omega t$, can be written as the real part of a complex number $F = F_0e^{i\omega t}$ because $e^{i\omega t} = \cos\omega t + i\sin\omega t$. The reason we do this is that it is easier to work with an exponential function than with a cosine. So the whole trick is to represent our oscillatory functions as the real parts of certain complex functions. The complex number $F$ that we have so defined is not a real physical force, because no force in physics is really complex; actual forces have no imaginary part, only a real part. We shall, however, speak of the “force” $F_0e^{i\omega t}$, but of course the actual force is the real part of that expression.

2025.4.22: $i$가 등장할 수 밖에 없었던 이유

1. 전자가 인간 인식한계 3차원 넘어 고차원 드나들고

2026.2.3: push/squeeze 기 up to top

2. (자연 묘사하는 시뮬레이션 도구) 수학으로 보이지 않는 차원 드나드는 기를 추적 묘사하다 보니,

전류, 포텐셜 등 묘사 함수, 벡터 포텐셜과 phase,

해밀톤 방정식 등 그리고 디랙 방정식(아래) ...

* 고차원 표현의 imaginary를 허수라 명명한 건, 동양 수준을 보여주는 거.

2024.3.8: 실수 부분만 고려한다는 것은 고차원의 circular action이 3차원에 project된 것만 인식하는 인간을 위한 거..

실버만의 complex variables에서의 극한 적분값 구할 때 복소 평면의 contour로 접근 또한 허수 $i$*의 고차원 계산을 3차원으로 투사

3. 4.29: 전자 움직임에 대한 Dirac 방정식

아르키메데스, 갈릴레이, 뉴튼, 리만, 맥스웰, 아인슈타인 등으로 이어져온 흐름에 따라

실험 결과들에 맞는 수학적 description함으로써 물리 현상 matching시키는(포지트론 예측) 전형을 보여준다

(1) 1926년 발표된 전자 운동에 대한 슈레딩거 방정식이 수소 원자 스펙트럼에 근사한 해를 품었기에

(2) 디랙은 포앙카레, 아인슈타인를 따라 그에 대한 relativistic, 즉 로렌쯔 수축에 invariant한 방정식 발견하고자 했는데,

relativistic하지 않은 뉴튼 방정식처럼 2차 미분을 포함하고 있어... 쉽지 않을 듯한 변환 대신에

(3) relativistic한 1차 맥스웰 방정식에 따라, $p_0+\alpha_1 p_1+ \alpha_2 p_2 + \alpha_3 p_3 +\beta =p_0+D$로 출발

Dirac은 Gordon, Klein 등 언급하며 복잡하게 설명했는데...

핵심은 해밀톤이 시도했던 것처럼, (Laplacian $\nabla^2$ 변형인) 슈레딩거 operator $\nabla^2+V$의 제곱근 1차 operator 찾는 것, i.e. $|p_0+D|^2==\pm(\nabla ^2+ V)$...

10&5.10&7.31: 그래서 얻은 게 ${\alpha_r}^2=1,\, \alpha_r \alpha_s + \alpha_s \alpha_r=0$ 등의 관계식이고 $\alpha_r, \beta$는 Hamilton의 quaternion처럼 4차원에 속하니

(4) 전자는 고차원 존재

① $(p_0+D)\psi$=0 <=>

$\frac{\partial \psi}{\partial t} = - i\frac{\partial \psi}{\partial x_1}I - i\frac{\partial \psi}{\partial x_2}J - i\frac{\partial \psi}{\partial x_3}K -\beta \psi$ 로부터(참조: gyroscope)

$(p_0+D) \overline{(p_0+D)}\psi=

\frac{\partial^2 \psi}{\partial t^2}-\nabla^2 \psi -V\psi$=0

=> 파동 방정식

② 5.15: 예, $S^1$에 정의되는 간단한 디랙 operator

$A$={$미분가능 복소함수 : C^2 \rightarrow C^2$} 하고, $\frac{d}{dx}-\lambda$ : $A$-> {복소 함수}를 restricted to $S^1=x^2+y^2=1$ 하면

$\frac{d}{dx}-\lambda$ 는 $S^1$에 정의된 operator인 거고,

1) 투사된 $x$축의 단위 변화는, $\lambda$가 복소수인 경우, 새로운 차원으로의 변화가 추가된다는 거며 디랙 방정식은 전자가 6차 이상에 존재한다는 걸 의미.

2) 그의 kernel, 즉

${\frac{d}{dx}-\lambda}\psi=0$의 해는> $\psi(x)=exp(\lambda x)$인데, if $x=1, -1$, $exp(\lambda)=exp(-\lambda) => exp(2\lambda)=1 => \lambda=n\pi, n 정수$

$\lambda=n\pi$인 경우에만 커널 존재

(5) 디랙의 접근은 자연 현상의 simulation 도구인 수학적 manipulation으로

① $\sqrt{\nabla^2}는, $ $x^2=-1$의 해를 위해 도메인을 $\mathbb{R}$에서 $\mathbb{C}$로 확장했듯이, 4차원으로 확장(* one half factorial or derivative)... $\sqrt{\nabla \times \nabla}=\nabla \times$

② 마이켈슨 몰리 실험에 맞추기 위한 로렌츠 수축

③ black box 문제의 cutoff 함수 만들기 위해 플랑크 상수 도입한 것과 맥락이 같고,

④ 방정식의 다른 해가 양의 전하 갖는 포지트론의 관측되었고

⑤ Atiyah-Singer가 디랙 operator를 구조보존 정리 일반화에 응용함으로써, 성공적인 수학적 simulation임을 뒷받침, 그리고 색의 기하구조 등

Let us take another example. Suppose we want to represent a force which is a cosine wave that is out of phase with a delayed phase $\Delta $. This, of course, would be the real part of $F_0e^{i(\omega t-\Delta)}$, but exponentials being what they are, we may write $e^{i(\omega t-\Delta)}= e^{i\omega t}e^{-i\Delta}$. Thus we see that the algebra of exponentials is much easier than that of sines and cosines; this is the reason we choose to use complex numbers. We shall often write \begin{equation} \label{Eq:I:23:1} F=F_0e^{-i\Delta}e^{i\omega t}=\hat{F}e^{i\omega t}. \end{equation} We write a little caret ($\hat{\enspace}$) over the $F$ to remind ourselves that this quantity is a complex number(* see 37-4): here the number is \begin{equation*} \hat{F}=F_0e^{-i\Delta}. \end{equation*}

Now let us solve an equation, using complex numbers, to see whether we can work out a problem for some real case. For example, let us try to solve \begin{equation} \label{Eq:I:23:2} \frac{d^2x}{dt^2}+\frac{kx}{m}=\frac{F}{m}=\frac{F_0}{m}\cos\omega t, \end{equation} where $F$ is the force which drives the oscillator and $x$ is the displacement. Now, absurd though it may seem, let us suppose that $x$ and $F$ are actually complex numbers, for a mathematical purpose only. That is to say, $x$ has a real part and an imaginary part times $i$, and $F$ has a real part and an imaginary part times $i$. Now if we had a solution of (23.2) with complex numbers, and substituted the complex numbers in the equation, we would get \begin{equation*} \frac{d^2(x_r+ix_i)}{dt^2}+\frac{k(x_r+ix_i)}{m}= \frac{F_r+iF_i}{m} \end{equation*} or \begin{equation*} \frac{d^2x_r}{dt^2}+\frac{kx_r}{m}+i\biggl( \frac{d^2x_i}{dt^2}+\frac{kx_i}{m}\biggr)= \frac{F_r}{m}+\frac{iF_i}{m}. \end{equation*} Now, since if two complex numbers are equal, their real parts must be equal and their imaginary parts must be equal, we deduce that the real part of $x$ satisfies the equation with the real part of the force. We must emphasize, however, that this separation into a real part and an imaginary part is not valid in general, but is valid only for equations which are linear, that is, for equations in which $x$ appears in every term only in the first power or the zeroth power. For instance, if there were in the equation a term $\lambda x^2$, then when we substitute $x_r + ix_i$, we would get $\lambda(x_r + ix_i)^2$, but when separated into real and imaginary parts this would yield $\lambda(x_r^2 - x_i^2)$ as the real part and $2i\lambda x_rx_i$ as the imaginary part. So we see that the real part of the equation would not involve just $\lambda x_r^2$, but also $-\lambda x_i^2$. In this case we get a different equation than the one we wanted to solve, with $x_i$, the completely artificial thing we introduced in our analysis, mixed in.

Let us now try our new method for the problem of the forced oscillator, that we already know how to solve. We want to solve Eq. (23.2) as before, but we say that we are going to try to solve \begin{equation} \label{Eq:I:23:3} \frac{d^2x}{dt^2}+\frac{kx}{m}=\frac{\hat{F}e^{i\omega t}}{m}, \end{equation} where $\hat{F}e^{i\omega t}$ is a complex number. Of course $x$ will also be complex, but remember the rule: take the real part to find out what is really going on. So we try to solve (23.3) for the forced solution; we shall discuss other solutions later. The forced solution has the same frequency as the applied force, and has some amplitude of oscillation and some phase, and so it can be represented also by some complex number $\hat{x}$ whose magnitude represents the swing of $x$ and whose phase represents the time delay in the same way as for the force. Now a wonderful feature of an exponential function $x=\hat{x}e^{i\omega t}$ is that $dx/dt = i\omega x$. When we differentiate an exponential function, we bring down the exponent as a simple multiplier. The second derivative does the same thing, it brings down another $i\omega$, and so it is very simple to write immediately, by inspection, what the equation is for $x$: every time we see a differentiation, we simply multiply by $i\omega$. (Differentiation is now as easy as multiplication! This idea of using exponentials in linear differential equations is almost as great as the invention of logarithms, in which multiplication is replaced by addition. Here differentiation is replaced by multiplication.) Thus our equation becomes \begin{equation} \label{Eq:I:23:4} (i\omega)^2\hat{x}+(k\hat{x}/m)=\hat{F}/m. \end{equation} (We have cancelled the common factor $e^{i\omega t}$.) See how simple it is! Differential equations are immediately converted, by sight, into mere algebraic equations; we virtually have the solution by sight, that \begin{equation*} \hat{x}=\frac{\hat{F}/m}{(k/m)-\omega^2}, \end{equation*} since $(i\omega)^2 =-\omega^2$. This may be slightly simplified by substituting $k/m = \omega_0^2$, which gives \begin{equation} \label{Eq:I:23:5} \hat{x}=\hat{F}/m(\omega_0^2-\omega^2). \end{equation} This, of course, is the solution we had before; for since $m(\omega_0^2 - \omega^2)$ is a real number, the phase angles of $\hat{F}$ and of $\hat{x}$ are the same (or perhaps $180^\circ$ apart, if $\omega^2 > \omega_0^2$), as advertised previously. The magnitude of $\hat{x}$, which measures how far it oscillates, is related to the size of the $\hat{F}$ by the factor $1/m(\omega_0^2-\omega^2)$, and this factor becomes enormous when $\omega$ is nearly equal to $\omega_0$. So we get a very strong response when we apply the right frequency $\omega$ (if we hold a pendulum on the end of a string and shake it at just the right frequency, we can make it swing very high).

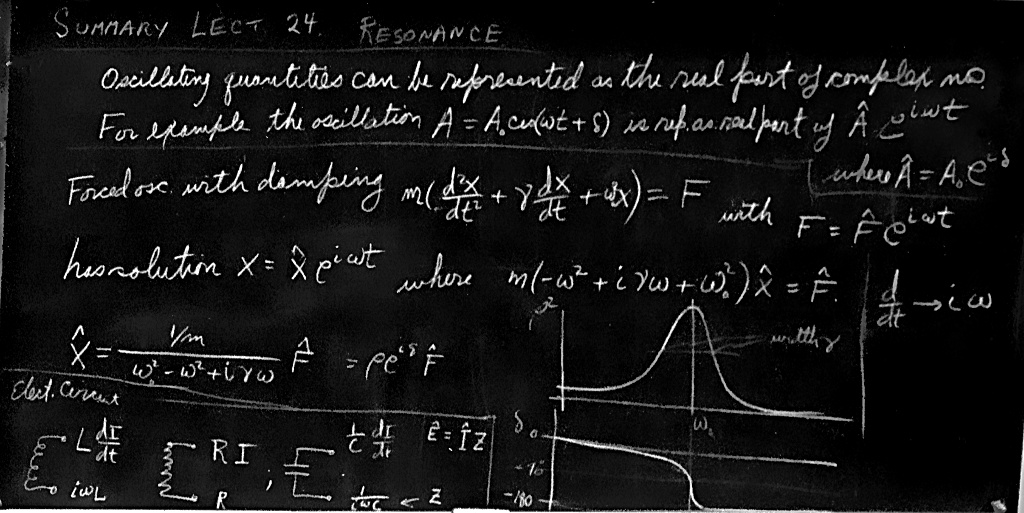

23–2The forced oscillator with damping

That, then, is how we analyze oscillatory motion with the more elegant mathematical technique. But the elegance of the technique is not at all exhibited in such a problem that can be solved easily by other methods. It is only exhibited when one applies it to more difficult problems. Let us therefore solve another, more difficult problem, which furthermore adds a relatively realistic feature to the previous one. Equation (23.5) tells us that if the frequency $\omega$ were exactly equal to $\omega_0$, we would have an infinite response. Actually, of course, no such infinite response occurs because some other things, like friction, which we have so far ignored, limits the response. Let us therefore add to Eq. (23.2) a friction term.

Ordinarily such a problem is very difficult because of the character and complexity of the frictional term. There are, however, many circumstances in which the frictional force is proportional to the speed with which the object moves. An example of such friction is the friction for slow motion of an object in oil or a thick liquid. There is no force when it is just standing still, but the faster it moves the faster the oil has to go past the object, and the greater is the resistance. So we shall assume that there is, in addition to the terms in (23.2), another term, a resistance force proportional to the velocity: $F_f =-c\,dx/dt$. It will be convenient, in our mathematical analysis, to write the constant $c$ as $m$ times $\gamma$ to simplify the equation a little. This is just the same trick we use with $k$ when we replace it by $m\omega_0^2$, just to simplify the algebra. Thus our equation will be \begin{equation} \label{Eq:I:23:6} m(d^2x/dt^2)+c(dx/dt)+kx=F \end{equation} or, writing $c = m\gamma$ and $k = m\omega_0^2$ and dividing out the mass $m$, \begin{equation*} \label{Eq:I:23:6a} (d^2x/dt^2)+\gamma(dx/dt)+\omega_0^2x=F/m. \tag{23.6a} \end{equation*}

Now we have the equation in the most convenient form to solve. If $\gamma$ is very small, that represents very little friction; if $\gamma$ is very large, there is a tremendous amount of friction. How do we solve this new linear differential equation? Suppose that the driving force is equal to $F_0\cos\,(\omega t+\Delta)$; we could put this into (23.6a) and try to solve it, but we shall instead solve it by our new method. Thus we write $F$ as the real part of $\hat{F}e^{i\omega t}$ and $x$ as the real part of $\hat{x}e^{i\omega t}$, and substitute these into Eq. (23.6a). It is not even necessary to do the actual substituting, for we can see by inspection that the equation would become \begin{equation} \label{Eq:I:23:7} [(i\omega)^2\hat{x}+\gamma(i\omega)\hat{x}+\omega_0^2\hat{x}] e^{i\omega t}=(\hat{F}/m)e^{i\omega t}. \end{equation} [As a matter of fact, if we tried to solve Eq. (23.6a) by our old straightforward way, we would really appreciate the magic of the “complex” method.] If we divide by $e^{i\omega t}$ on both sides, then we can obtain the response $\hat{x}$ to the given force $\hat{F}$; it is \begin{equation} \label{Eq:I:23:8} \hat{x}=\hat{F}/m(\omega_0^2-\omega^2+i\gamma\omega). \end{equation}

Thus again $\hat{x}$ is given by $\hat{F}$ times a certain factor. There is no technical name for this factor, no particular letter for it, but we may call it $R$ for discussion purposes: \begin{equation} R=\frac{1}{m(\omega_0^2-\omega^2+i\gamma\omega)}\notag \end{equation} and \begin{equation} \label{Eq:I:23:9} \hat{x}=\hat{F}R. \end{equation} (Although the letters $\gamma$ and $\omega_0$ are in very common use, this $R$ has no particular name.) This factor $R$ can either be written as $p+iq$, or as a certain magnitude $\rho$ times $e^{i\theta}$. If it is written as a certain magnitude times $e^{i\theta}$, let us see what it means. Now $\hat{F}= F_0e^{i\Delta}$, and the actual force $F$ is the real part of $F_0e^{i\Delta}e^{i\omega t}$, that is, $F_0 \cos\,(\omega t + \Delta)$. Next, Eq. (23.9) tells us that $\hat{x}$ is equal to $\hat{F}R$. So, writing $R = \rho e^{i\theta}$ as another name for $R$, we get \begin{equation*} \hat{x}=R\hat{F}=\rho e^{i\theta}F_0e^{i\Delta}=\rho F_0e^{i(\theta+\Delta)}. \end{equation*} Finally, going even further back, we see that the physical $x$, which is the real part of the complex $\hat{x}e^{i\omega t}$, is equal to the real part of $\rho F_0e^{i(\theta+\Delta)}e^{i\omega t}$. But $\rho$ and $F_0$ are real, and the real part of $e^{i(\theta+\Delta+\omega t)}$ is simply $\cos\,(\omega t + \Delta + \theta)$. Thus \begin{equation} \label{Eq:I:23:10} x=\rho F_0\cos\,(\omega t+\Delta+\theta). \end{equation} This tells us that the amplitude of the response is the magnitude of the force $F$ multiplied by a certain magnification factor, $\rho$; this gives us the “amount” of oscillation. It also tells us, however, that $x$ is not oscillating in phase with the force, which has the phase $\Delta$, but is shifted by an extra amount $\theta$. Therefore $\rho$ and $\theta$ represent the size of the response and the phase shift of the response.

Now let us work out what $\rho$ is. If we have a complex number, the square of the magnitude is equal to the number times its complex conjugate; thus \begin{equation} \begin{aligned} \rho^2&=\frac{1}{m^2(\omega_0^2-\omega^2+i\gamma\omega) (\omega_0^2-\omega^2-i\gamma\omega)}\\[1ex] &=\frac{1}{m^2[(\omega_0^2-\omega^2)^2+\gamma^2\omega^2]}. \end{aligned} \label{Eq:I:23:11} \end{equation}

In addition, the phase angle $\theta$ is easy to find, for if we write \begin{equation} 1/R=1/\rho e^{i\theta}=(1/\rho)e^{-i\theta}= m(\omega_0^2-\omega^2+i\gamma\omega),\notag \end{equation} we see that \begin{equation} \label{Eq:I:23:12} \tan\theta=-\gamma\omega/(\omega_0^2-\omega^2). \end{equation} It is minus because $\tan (-\theta) =-\tan\theta$. A negative value for $\theta$ results for all $\omega$, and this corresponds to the displacement $x$ lagging the force $F$.

Figure 23–2 shows how $\rho^2$ varies as a function of frequency ($\rho^2$ is physically more interesting than $\rho$, because $\rho^2$ is proportional to the square of the amplitude, or more or less to the energy that is developed in the oscillator by the force). We see that if $\gamma$ is very small, then $1/(\omega_0^2 - \omega^2)^2$ is the most important term, and the response tries to go up toward infinity when $\omega$ equals $\omega_0$. Now the “infinity” is not actually infinite because if $\omega=\omega_0$, then $1/\gamma^2\omega^2$ is still there. The phase shift varies as shown in Fig. 23–3.

In certain circumstances we get a slightly different formula than (23.8), also called a “resonance” formula, and one might think that it represents a different phenomenon, but it does not. The reason is that if $\gamma$ is very small the most interesting part of the curve is near $\omega = \omega_0$, and we may replace (23.8) by an approximate formula which is very accurate if $\gamma$ is small and $\omega$ is near $\omega_0$. Since $\omega_0^2 - \omega^2 = (\omega_0-\omega)(\omega_0 + \omega)$, if $\omega$ is near $\omega_0$ this is nearly the same as $2\omega_0(\omega_0 - \omega)$ and $\gamma\omega$ is nearly the same as $\gamma\omega_0$. Using these in (23.8), we see that $\omega_0^2-\omega^2 + i\gamma\omega \approx 2\omega_0(\omega_0-\omega+i\gamma/2)$, so that \begin{equation} \begin{gathered} \hat{x}\approx \hat{F}/2m\omega_0(\omega_0-\omega+i\gamma/2)\\[.5ex] \text{ if }\gamma\ll\omega_0\text{ and } \omega\approx\omega_0. \end{gathered} \label{Eq:I:23:13} \end{equation} It is easy to find the corresponding formula for $\rho^2$. It is \begin{equation*} \rho^2\approx 1/4m^2\omega_0^2[(\omega_0-\omega)^2+\gamma^2/4]. \end{equation*}

We shall leave it to the student to show the following: if we call the maximum height of the curve of $\rho^2$ vs. $\omega$ one unit, and we ask for the width $\Delta\omega$ of the curve, at one half the maximum height, the full width at half the maximum height of the curve is $\Delta\omega=\gamma$, supposing that $\gamma$ is small. The resonance is sharper and sharper as the frictional effects are made smaller and smaller.

2025.11.16: 디렉 delta

As another measure of the width, some people use a quantity $Q$ which is defined as $Q = \omega_0/\gamma$. The narrower the resonance, the higher the $Q$: $Q= 1000$ means a resonance whose width is only $1000$th of the frequency scale. The $Q$ of the resonance curve shown in Fig. 23–2 is $5$.

The importance of the resonance phenomenon is that it occurs in many other circumstances, and so the rest of this chapter will describe some of these other circumstances.

23–3Electrical resonance

The simplest and broadest technical applications of resonance are in electricity. In the electrical world there are a number of objects which can be connected to make electric circuits. These passive circuit elements, as they are often called, are of three main types, although each one has a little bit of the other two mixed in. Before describing them in greater detail, let us note that the whole idea of our mechanical oscillator being a mass on the end of a spring is only an approximation. All the mass is not actually at the “mass”; some of the mass is in the inertia of the spring. Similarly, all of the spring is not at the “spring”; the mass itself has a little elasticity, and although it may appear so, it is not absolutely rigid, and as it goes up and down, it flexes ever so slightly under the action of the spring pulling it. The same thing is true in electricity. There is an approximation in which we can lump things into “circuit elements” which are assumed to have pure, ideal characteristics. It is not the proper time to discuss that approximation here, we shall simply assume that it is true in the circumstances.

The three main kinds of circuit elements are the following. The first is called a capacitor (Fig. 23–4); an example is two plane metallic plates spaced a very small distance apart by an insulating material. When the plates are charged there is a certain voltage difference, that is, a certain difference in potential, between them. The same difference of potential appears between the terminals $A$ and $B$, because if there were any difference along the connecting wire, electricity would flow right away. So there is a certain voltage difference $V$ between the plates if there is a certain electric charge $+q$ and $-q$ on them, respectively. Between the plates there will be a certain electric field; we have even found a formula for it (Chapters 13 and 14): \begin{equation} \label{Eq:I:23:14} V=\sigma d/\epsO=qd/\epsO A, \end{equation} where $d$ is the spacing and $A$ is the area of the plates. Note that the potential difference is a linear function of the charge. If we do not have parallel plates, but insulated electrodes which are of any other shape, the difference in potential is still precisely proportional to the charge, but the constant of proportionality may not be so easy to compute. However, all we need to know is that the potential difference across a capacitor is proportional to the charge: $V = q/C$; the proportionality constant is $1/C$, where $C$ is the capacitance of the object.

The second kind of circuit element is called a resistor; it offers resistance to the flow of electrical current. It turns out that metallic wires and many other substances resist the flow of electricity in this manner: if there is a voltage difference across a piece of some substance, there exists an electric current $I= dq/dt$ that is proportional to the electric voltage difference: \begin{equation} \label{Eq:I:23:15} V=RI=R\,dq/dt \end{equation} The proportionality coefficient is called the resistance $R$. This relationship may already be familiar to you; it is Ohm’s law.

If we think of the charge $q$ on a capacitor as being analogous to the displacement $x$ of a mechanical system, we see that the current, $I = dq/dt$, is analogous to velocity, $1/C$ is analogous to a spring constant $k$, and $R$ is analogous to the resistive coefficient $c=m\gamma$ in Eq. (23.6). Now it is very interesting that there exists another circuit element which is the analog of mass! This is a coil which builds up a magnetic field within itself when there is a current in it. A changing magnetic field develops in the coil a voltage that is proportional to $dI/dt$ (this is how a transformer works, in fact). The magnetic field is proportional to a current, and the induced voltage (so-called) in such a coil is proportional to the rate of change of the current: \begin{equation} \label{Eq:I:23:16} V=L\,dI/dt=L\,d^2q/dt^2. \end{equation} The coefficient $L$ is the self-inductance, and is analogous to the mass in a mechanical oscillating circuit.

2023.4.1: 질량은 구조, structure

구조가 전자들 속을 헤처나가는 것과 전자들이 구조들을 지나치는 건 equivalent

Suppose we make a circuit in which we have connected the three circuit elements in series (Fig. 23–5); then the voltage across the whole thing from $1$ to $2$ is the work done in carrying a charge through, and it consists of the sum of several pieces: across the inductor, $V_L = L\,d^2q/dt^2$; across the resistance, $V_R = R\,dq/dt$; across the capacitor, $V_C = q/C$. The sum of these is equal to the applied voltage, $V$: \begin{equation} \label{Eq:I:23:17} L\,d^2q/dt^2+R\,dq/dt+q/C=V(t). \end{equation} Now we see that this equation is exactly the same as the mechanical equation (23.6), and of course it can be solved in exactly the same manner. We suppose that $V(t)$ is oscillatory: we are driving the circuit with a generator with a pure sine wave oscillation. Then we can write our $V(t)$ as a complex $\hat{V}$ with the understanding that it must be ultimately multiplied by $e^{i\omega t}$, and the real part taken in order to find the true $V$. Likewise, the charge $q$ can thus be analyzed, and then in exactly the same manner as in Eq. (23.8) we write the corresponding equation: the second derivative of $q$ is $(i\omega)^2q$; the first derivative is $(i\omega)q$. Thus Eq. (23.17) translates to \begin{equation*} \biggl[L(i\omega)^2+R(i\omega)+\frac{1}{C}\biggr]\hat{q}=\hat{V} \end{equation*} or \begin{equation*} \hat{q}=\frac{\hat{V}} {L(i\omega)^2+R(i\omega)+\dfrac{1}{C}} \end{equation*} which we can write in the form \begin{equation} \label{Eq:I:23:18} \hat{q}=\hat{V}/L(\omega_0^2-\omega^2+i\gamma\omega), \end{equation} where $\omega_0^2 = 1/LC$ and $\gamma= R/L$. It is exactly the same denominator as we had in the mechanical case, with exactly the same resonance properties! The correspondence between the electrical and mechanical cases is outlined in Table 23–1(* 더 table).

| General characteristic | Mechanical property | Electrical property |

| indep. variable | time $(t)$ | time $(t)$ |

| dep. variable | position $(x)$ | charge $(q)$ |

| inertia | mass $(m)$ | inductance $(L)$ |

| resistance | drag coeff. $(c=\gamma m)$ | resistance $(R=\gamma L)$ |

| stiffness | stiffness $(k)$ | (capacitance)$^{-1}$ $(1/C)$ |

| resonant frequency | $\omega_0^2=k/m$ | $\omega_0^2=1/LC$ |

| period | $t_0=2\pi\sqrt{m/k}$ | $t_0=2\pi\sqrt{LC}$ |

| figure of merit | $Q=\omega_0/\gamma$ | $Q=\omega_0L/R$ |

We must mention a small technical point. In the electrical literature, a different notation is used. (From one field to another, the subject is not really any different, but the way of writing the notations is often different.) First, $j$ is commonly used instead of $i$ in electrical engineering, to denote $\sqrt{-1}$. (After all, $i$ must be the current!) Also, the engineers would rather have a relationship between $\hat{V}$ and $\hat{I}$ than between $\hat{V}$ and $\hat{q}$, just because they are more used to it that way. Thus, since $I= dq/dt = i\omega q$, we can just substitute $\hat{I}/i\omega$ for $\hat{q}$ and get \begin{equation} \label{Eq:I:23:19} \hat{V}=(i\omega L+R+1/i\omega C)\hat{I}=\hat{Z}\hat{I}. \end{equation} Another way is to rewrite Eq. (23.17), so that it looks more familiar; one often sees it written this way: \begin{equation} \label{Eq:I:23:20} L\,dI/dt+RI+(1/C)\int^tI\,dt=V(t). \end{equation} At any rate, we find the relation (23.19) between voltage $\hat{V}$ and current $\hat{I}$ which is just the same as (23.18) except divided by $i\omega$, and that produces Eq. (23.19). The quantity $R + i\omega L + 1/i\omega C$ is a complex number, and is used so much in electrical engineering that it has a name: it is called the complex impedance, $\hat{Z}$. Thus we can write $\hat{V}=\hat{Z}\hat{I}$. The reason that the engineers like to do this is that they learned something when they were young: $V = RI$ for resistances, when they only knew about resistances and dc. Now they have become more educated and have ac circuits, so they want the equation to look the same. Thus they write $\hat{V}=\hat{Z}\hat{I}$, the only difference being that the resistance is replaced by a more complicated thing, a complex quantity. So they insist that they cannot use what everyone else in the world uses for imaginary numbers, they have to use a $j$ for that; it is a miracle that they did not insist also that the letter $Z$ be an $R$! (Then they get into trouble when they talk about current densities, for which they also use $j$. The difficulties of science are to a large extent the difficulties of notations, the units, and all the other artificialities which are invented by man, not by nature.)

23–4Resonance in nature

Although we have discussed the electrical case in detail, we could also bring up case after case in many fields, and show exactly how the resonance equation is the same. There are many circumstances in nature in which something is “oscillating” and in which the resonance phenomenon occurs. We said that in an earlier chapter; let us now demonstrate it. If we walk around our study, pulling books off the shelves and simply looking through them to find an example of a curve that corresponds to Fig. 23–2 and comes from the same equation, what do we find? Just to demonstrate the wide range obtained by taking the smallest possible sample, it takes only five or six books to produce quite a series of phenomena which show resonances.

The first two are from mechanics, the first on a large scale: the atmosphere of the whole earth. If the atmosphere, which we suppose surrounds the earth evenly on all sides, is pulled to one side by the moon or, rather, squashed prolate into a double tide, and if we could then let it go, it would go sloshing up and down; it is an oscillator. This oscillator is driven by the moon, which is effectively revolving about the earth; any one component of the force, say in the $x$-direction, has a cosine component, and so the response of the earth’s atmosphere to the tidal pull of the moon is that of an oscillator. The expected response of the atmosphere is shown in Fig. 23–6, curve $b$ (curve $a$ is another theoretical curve under discussion in the book from which this is taken out of context). Now one might think that we only have one point on this resonance curve, since we only have the one frequency, corresponding to the rotation of the earth under the moon, which occurs at a period of $12.42$ hours—$12$ hours for the earth (the tide is a double bump), plus a little more because the moon is going around. But from the size of the atmospheric tides, and from the phase, the amount of delay, we can get both $\rho$ and $\theta$. From those we can get $\omega_0$ and $\gamma$, and thus draw the entire curve! This is an example of very poor science. From two numbers we obtain two numbers, and from those two numbers we draw a beautiful curve, which of course goes through the very point that determined the curve! It is of no use unless we can measure something else, and in the case of geophysics that is often very difficult. But in this particular case there is another thing which we can show theoretically must have the same timing as the natural frequency $\omega_0$: that is, if someone disturbed the atmosphere, it would oscillate with the frequency $\omega_0$. Now there was such a sharp disturbance in 1883; the Krakatoa volcano exploded and half the island blew off, and it made such a terrific explosion in the atmosphere that the period of oscillation of the atmosphere could be measured. It came out to $10\tfrac{1}{2}$ hours. The $\omega_0$ obtained from Fig. 23–6 comes out $10$ hours and $20$ minutes, so there we have at least one check on the reality of our understanding of the atmospheric tides.

Next we go to the small scale of mechanical oscillation. This time we take a sodium chloride crystal, which has sodium ions and chlorine ions next to each other, as we described in an early chapter. These ions are electrically charged, alternately plus and minus. Now there is an interesting oscillation possible. Suppose that we could drive all the plus charges to the right and all the negative charges to the left, and let go; they would then oscillate back and forth, the sodium lattice against the chlorine lattice. How can we ever drive such a thing? That is easy, for if we apply an electric field on the crystal, it will push the plus charge one way and the minus charge the other way! So, by having an external electric field we can perhaps get the crystal to oscillate. The frequency of the electric field needed is so high, however, that it corresponds to infrared radiation! So we try to find a resonance curve by measuring the absorption of infrared light by sodium chloride. Such a curve is shown in Fig. 23–7. The abscissa is not frequency, but is given in terms of wavelength, but that is just a technical matter, of course, since for a wave there is a definite relation between frequency and wavelength; so it is really a frequency scale, and a certain frequency corresponds to the resonant frequency.

But what about the width? What determines the width? There are many cases in which the width that is seen on the curve is not really the natural width $\gamma$ that one would have theoretically. There are two reasons why there can be a wider curve than the theoretical curve. If the objects do not all have the same frequency, as might happen if the crystal were strained in certain regions, so that in those regions the oscillation frequency were slightly different than in other regions, then what we have is many resonance curves on top of each other; so we apparently get a wider curve. The other kind of width is simply this: perhaps we cannot measure the frequency precisely enough—if we open the slit of the spectrometer fairly wide, so although we thought we had only one frequency, we actually had a certain range $\Delta\omega$, then we may not have the resolving power needed to see a narrow curve. Offhand, we cannot say whether the width in Fig. 23–7 is natural, or whether it is due to inhomogeneities in the crystal or the finite width of the slit of the spectrometer.

Now we turn to a more esoteric example, and that is the swinging of a magnet. If we have a magnet, with north and south poles, in a constant magnetic field, the N end of the magnet will be pulled one way and the S end the other way, and there will in general be a torque on it, so it will vibrate about its equilibrium position, like a compass needle. However, the magnets we are talking about are atoms. These atoms have an angular momentum, the torque does not produce a simple motion in the direction of the field, but instead, of course, a precession. Now, looked at from the side, any one component is “swinging,” and we can disturb or drive that swinging and measure an absorption. The curve in Fig. 23–8 represents a typical such resonance curve. What has been done here is slightly different technically. The frequency of the lateral field that is used to drive this swinging is always kept the same, while we would have expected that the investigators would vary that and plot the curve. They could have done it that way, but technically it was easier for them to leave the frequency $\omega$ fixed, and change the strength of the constant magnetic field, which corresponds to changing $\omega_0$ in our formula. They have plotted the resonance curve against $\omega_0$. Anyway, this is a typical resonance with a certain $\omega_0$ and $\gamma$.

Now we go still further. Our next example has to do with atomic nuclei. The motions of protons and neutrons in nuclei are oscillatory in certain ways, and we can demonstrate this by the following experiment. We bombard a lithium atom with protons, and we discover that a certain reaction, producing $\gamma$-rays, actually has a very sharp maximum typical of resonance. We note in Fig. 23–9, however, one difference from other cases: the horizontal scale is not a frequency, it is an energy! The reason is that in quantum mechanics what we think of classically as the energy will turn out to be really related to a frequency of a wave amplitude. When we analyze something which in simple large-scale physics has to do with a frequency, we find that when we do quantum-mechanical experiments with atomic matter, we get the corresponding curve as a function of energy. In fact, this curve is a demonstration of this relationship, in a sense. It shows that frequency and energy have some deep interrelationship, which of course they do.

Now we turn to another example which also involves a nuclear energy level, but now a much, much narrower one. The $\omega_0$ in Fig. 23–10 corresponds to an energy of $100{,}000$ electron volts, while the width $\gamma$ is approximately $10^{-5}$ electron volt; in other words, this has a $Q$ of $10^{10}$! When this curve was measured it was the largest $Q$ of any oscillator that had ever been measured. It was measured by Dr. Mössbauer, and it was the basis of his Nobel prize. The horizontal scale here is velocity, because the technique for obtaining the slightly different frequencies was to use the Doppler effect, by moving the source relative to the absorber. One can see how delicate the experiment is when we realize that the speed involved is a few centimeters per second! On the actual scale of the figure, zero frequency would correspond to a point about $10^{10}$ cm to the left—slightly off the paper!

Finally, if we look in an issue of the Physical Review, say that of January 1, 1962, will we find a resonance curve? Every issue has a resonance curve, and Fig. 23–11 is the resonance curve for this one. This resonance curve turns out to be very interesting. It is the resonance found in a certain reaction among strange particles, a reaction in which a K$^-$ and a proton interact. The resonance is detected by seeing how many of some kinds of particles come out, and depending on what and how many come out, one gets different curves, but of the same shape and with the peak at the same energy. We thus determine that there is a resonance at a certain energy for the K$^-$ meson. That presumably means that there is some kind of a state, or condition, corresponding to this resonance, which can be attained by putting together a K$^-$ and a proton. This is a new particle, or resonance. Today we do not know whether to call a bump like this a “particle” or simply a resonance. When there is a very sharp resonance, it corresponds to a very definite energy, just as though there were a particle of that energy present in nature. When the resonance gets wider, then we do not know whether to say there is a particle which does not last very long, or simply a resonance in the reaction probability. In the second chapter, this point is made about the particles, but when the second chapter was written this resonance was not known, so our chart should now have still another particle in it!