40 The Principles of Statistical Mechanics

The Principles of Statistical Mechanics

40–1The exponential atmosphere

We have discussed some of the properties of large numbers of intercolliding atoms. The subject is called kinetic theory, a description of matter from the point of view of collisions between the atoms. Fundamentally, we assert that the gross properties of matter should be explainable in terms of the motion of its parts.

We limit ourselves for the present to conditions of thermal equilibrium, that is, to a subclass of all the phenomena of nature. The laws of mechanics which apply just to thermal equilibrium are called statistical mechanics, and in this section we want to become acquainted with some of the central theorems of this subject.

We already have one of the theorems of statistical mechanics, namely, the mean value of the kinetic energy for any motion at the absolute temperature $T$ is $\tfrac{1}{2}kT$ for each independent motion, i.e., for each degree of freedom. That tells us something about the mean square velocities of the atoms. Our objective now is to learn more about the positions of the atoms, to discover how many of them are going to be in different places at thermal equilibrium, and also to go into a little more detail on the distribution of the velocities. Although we have the mean square velocity, we do not know how to answer a question such as how many of them are going three times faster than the root mean square, or how many of them are going one-quarter of the root mean square speed. Or have they all the same speed exactly?

So, these are the two questions that we shall try to answer: How are the molecules distributed in space when there are forces acting on them, and how are they distributed in velocity?

It turns out that the two questions are completely independent, and that the distribution of velocities is always the same. We already received a hint of the latter fact when we found that the average kinetic energy is the same, $\tfrac{1}{2}kT$ per degree of freedom, no matter what forces are acting on the molecules. The distribution of the velocities of the molecules is independent of the forces, because the collision rates do not depend upon the forces.(See, Kinetic energy of gas )

Let us begin with an example: the distribution of the molecules in an atmosphere like our own, but without the winds and other kinds of disturbance. Suppose that we have a column of gas extending to a great height, and at thermal equilibrium—unlike our atmosphere, which as we know gets colder as we go up. We could remark that if the temperature differed at different heights, we could demonstrate lack of equilibrium by connecting a rod to some balls at the bottom (Fig. 40–1), where they would pick up $\tfrac{1}{2}kT$ from the molecules there and would shake, via the rod, the balls at the top and those would shake the molecules at the top. So, ultimately, of course, the temperature becomes the same at all heights in a gravitational field.

If the temperature is the same at all heights, the problem is to discover by what law the atmosphere becomes tenuous as we go up. If $N$ is the total number of molecules in a volume $V$ of gas at pressure $P$, then we know $PV = NkT$, or $P = nkT$, where $n = N/V$ is the number of molecules per unit volume. In other words, if we know the number of molecules per unit volume, we know the pressure, and vice versa: they are proportional to each other, since the temperature is constant in this problem. But the pressure is not constant, it must increase as the altitude is reduced, because it has to hold, so to speak, the weight of all the gas above it. That is the clue by which we may determine how the pressure changes with height. If we take a unit area at height $h$, then the vertical force from below, on this unit area, is the pressure $P$. The vertical force per unit area pushing down at a height $h + dh$ would be the same, in the absence of gravity, but here it is not, because the force from below must exceed the force from above by the weight of gas in the section between $h$ and $h + dh$. Now $mg$ is the force of gravity on each molecule, where $g$ is the acceleration due to gravity, and $n\,dh$ is the total number of molecules in the unit section. So this gives us the differential equation $P_{h + dh} - P_h =$ $\underline{dP = -mgn\,dh}$. Since $P = nkT$, and $T$ is constant, we can eliminate either $P$ or $n$, say $P$, and get \begin{equation*} \ddt{n}{h} = -\frac{mg}{kT}\,n \end{equation*} for the differential equation, which tells us how the density goes down as we go up in energy.

We thus have an equation for the particle density $n$, which varies with height, but which has a derivative which is proportional to itself. Now a function which has a derivative proportional to itself is an exponential, and the solution of this differential equation is \begin{equation} \label{Eq:I:40:1} n = n_0e^{-mgh/kT}. \end{equation} Here the constant of integration, $n_0$, is obviously the density at $h = 0$ (which can be chosen anywhere), and the density goes down exponentially with height.

Note that if we have different kinds of molecules with different masses, they go down with different exponentials. The ones which were heavier would decrease with altitude faster than the light ones. Therefore we would expect that because oxygen is heavier than nitrogen, as we go higher and higher in an atmosphere with nitrogen and oxygen the proportion of nitrogen would increase.

2023.9.9: 모든 게 linear니... 언급은 하지 않았지만, 질량이 다른 물질들에 대한 superposition 원리 적용.

This does not really happen in our own atmosphere, at least at reasonable heights, because there is so much agitation which mixes the gases back together again. It is not an isothermal atmosphere. Nevertheless, there is a tendency for lighter materials, like hydrogen, to dominate at very great heights in the atmosphere, because the lowest masses continue to exist, while the other exponentials have all died out (Fig. 40–2).40–2The Boltzmann law

Here we note the interesting fact that the numerator in the exponent of Eq. (40.1) is the potential energy of an atom. Therefore we can also state this particular law as: the density at any point is proportional to \begin{equation*} e^{-\text{the potential energy of each atom}/kT}. \end{equation*}

That may be an accident, i.e., may be true only for this particular case of a uniform gravitational field. However, we can show that it is a more general proposition. Suppose that there were some kind of force other than gravity acting on the molecules in a gas. For example, the molecules may be charged electrically, and may be acted on by an electric field or another charge that attracts them. Or, because of the mutual attractions of the atoms for each other, or for the wall, or for a solid, or something, there is some force of attraction which varies with position and which acts on all the molecules. Now suppose, for simplicity, that the molecules are all the same, and that the force acts on each individual one, so that the total force on a piece of gas would be simply the number of molecules times the force on each one. To avoid unnecessary complication, let us choose a coordinate system with the $x$-axis in the direction of the force, $\FLPF$.

In the same manner as before, if we take two parallel planes in the gas, separated by a distance $dx$, then the force on each atom, times the $n$ atoms per cm³ (the generalization of the previous $nmg$), times $dx$, must be balanced by the pressure change: $Fn\,dx = dP = kT\,dn$. Or, to put this law in a form which will be useful to us later, \begin{equation} \label{Eq:I:40:2} F = kT\,\ddt{}{x}\,(\ln n). \end{equation} For the present, observe that $-F\,dx$ is the work we would do in taking a molecule from $x$ to $x + dx$, and if $F$ comes from a potential, i.e., if the work done can be represented by a potential energy at all, then this would also be the difference in the potential energy (P.E.). The negative differential of potential energy is the work done, $F\,dx$, and we find that $d(\ln n) = -d(\text{P.E.})/kT$, or, after integrating, \begin{equation} \label{Eq:I:40:3} n = (\text{constant})e^{-\text{P.E.}/kT}. \end{equation} Therefore what we noticed in a special case turns out to be true in general. (What if $F$ does not come from a potential? Then (40.2) has no solution at all. Energy can be generated, or lost by the atoms running around in cyclic paths for which the work done is not zero, and no equilibrium can be maintained at all. Thermal equilibrium cannot exist if the external forces on the atoms are not conservative.

2025.5.29: 정리해보면,

1. 각 위치의 기들 양은 potential, 주변 또는 고차원 통해 드나드는

2. 평형 상태에서 쉴새없이 왔다갔다 주고 받는 기 양은 temperature라고 정의

(1) 5.24: 평형 상태란 어느 한점 주위로 좌충우돌 만만한 곳 없다는 thermal equillibrium

빙 돌아 왔을 때 에너지/기 차이가 생기면 안되지, 그걸 cyclic path라 표현한 거고. $Fdx$

(2) 온도는 그 평형상태에서 각 점에서에의 좌충우돌 정도 표현

3. 기들 움직임, 5.6: 파고 들어가며 쌓이는 기 양 척도, $\frac{E}{kT}= E / \hbar$

(1) 볼쯔만 법칙은 3차원에서 좌충우돌하는 기들 중 한 방향으로 계속 쌓여 포텐셜 높은 곳으로 이동하는 걸 표현한 거, $kT$를 종합적으로 $\frac{\Delta E}{kT}$번 공급받아, $\Delta E$=potential difference

기들 쌓일 확률은 곱이고 거기에 늘어나는 밀도/개수 변화가 가미되어 나타난 것이 exponential

(2) 고차원으로의 진출은 수학적 표현으로 $i$가 붙는 거고, $E$는 일종의 만드는 구조 크기고, unit는 $\hbar$, unit양을 채우면 다시 3차원으로

2021.5.30: 든 생각: 단순한 거리가 아닌, topology에 depend 할 게다, 핵심이 되는 것은 그 위치에 도달하기까지 든 기 뭉치, 에너지니까.

참조: quantum number. 주기율표, 별의 분포, 나아가 생명체 발육 제한?

$\ddt{n}{h} = -\frac{mg}{kT}\,n$는 locally 성립. 일반적인 표현은 $\ddt{f(x)}{x}= -\frac{P(x)}{kT(x)}\,f(x)$ where $f(x)$=밀도, $P(x)$ =포텐셜 에너지, $T(x)$=온도 at $x$, $\ddt{\ln f(x)}{x}=-\frac{P(x)}{kT(x)}$

potential, kinetic energy <=> 타고난 것과 노력

This, then, could tell us the distribution of molecules: Suppose that we had a positive ion in a liquid, attracting negative ions around it, how many of them would be at different distances? If the potential energy is known as a function of distance, then the proportion of them at different distances is given by this law, and so on, through many applications.

40–3Evaporation of a liquid

In more advanced statistical mechanics one tries to solve the following important problem. Consider an assembly of molecules which attract each other, and suppose that the force between any two, say $i$ and $j$, depends only on their separation $r_{ij}$, and can be represented as the derivative of a potential function $V(r_{ij})$. Figure 40–3 shows a form such a function might have. For $r > r_0$, the energy decreases as the molecules come together, because they attract, and then the energy increases very sharply as they come still closer together, because they repel strongly, which is characteristic of the way molecules behave, roughly speaking.

Now suppose we have a whole box full of such molecules, and we would like to know how they arrange themselves on the average. The answer is $e^{-\text{P.E.}/kT}$. The total potential energy in this case would be the sum over all the pairs, supposing that the forces are all in pairs (there may be three-body forces in more complicated things, but in electricity, for example, the potential energy is all in pairs). Then the probability for finding molecules in any particular combination of $r_{ij}$’s will be proportional to \begin{equation*} \exp\Bigl[-\sum_{i,j}V(r_{ij})/kT\Bigr]. \end{equation*}

2025.5.25: 전자들 사이 기 작용은 all pairs... superposition이라는 거... 각 pair는 다른 pair와 independent한 사건이니 그 수학적 확률적 표현은 각 확률의 곱. 당연히 지수는 합으로 나타날 수 밖에

아래는 몇 년전의 헛소린데 잠시 남겨둔다

* 위에서 유도된 $\ddt{f(x)}{x}= -\frac{P(x)}{kT(x)}\,f(x)$

=> $\frac{df(x)}{f(x)}=d{\ln{f(x)}}= -\frac{P(x)}{kT(x)}dx => ln{f(x)} = -\int{\frac{P(x)}{kT(x)}}dx => f(x)=\exp[{ -\int{\frac{P(x)}{kT(x)}}dx}] $

이 관련을 제한 구역안에서의 합을 생각하면

$f(x)=\exp[{ -\int_{V}{\frac{P(x)}{kT(x)}}dV}]$인데, 위 식은 한쪽 방향, 즉 지구에서 떨어진 입자에 대해 고려한 것이고 합(integration)을 하는 경우, 반대 방향도 고려하니 2로 나누어야 하고 포인트 입자이기에 적분은 Dirac measure로 표현해야 하니,

=> $f(x)=\frac12 \exp[{ -\int{\frac{P(x)}{kT(x)}}dx}]= \exp\Bigl[-\sum_{(i,j)}V(r_{ij})/kT\Bigr]$(21.6.16)

Now, if the temperature is very high, so that $kT \gg \abs{V(r_0)}$, the exponent is relatively small almost everywhere, and the probability of finding a molecule is almost independent of position. Let us take the case of just two molecules: the $e^{-\text{P.E.}/kT}$ would be the probability of finding them at various mutual distances $r$. Clearly, where the potential goes most negative, the probability is largest, and where the potential goes toward infinity, the probability is almost zero, which occurs for very small distances. That means that for such atoms in a gas, there is no chance that they are on top of each other, since they repel so strongly. But there is a greater chance of finding them per unit volume at the point $r_0$ than at any other point. How much greater, depends on the temperature. If the temperature is very large compared with the difference in energy between $r = r_0$ and $r = \infty$, the exponential is always nearly unity. In this case, where the mean kinetic energy (about $kT$) greatly exceeds the potential energy, the forces do not make much difference. But as the temperature falls, the probability of finding the molecules at the preferred distance $r_0$ gradually increases relative to the probability of finding them elsewhere and, in fact, if $kT$ is much less than $\abs{V(r_0)}$, we have a relatively large positive exponent in that neighborhood. In other words, in a given volume they are much more likely to be at the distance of minimum energy than far apart. As the temperature falls, the atoms fall together, clump in lumps, and reduce to liquids, and solids, and molecules, and as you heat them up they evaporate.

The requirements for the determination of exactly how things evaporate, exactly how things should happen in a given circumstance, involve the following. First, to discover the correct molecular-force law $V(r)$, which must come from something else, quantum mechanics, say, or experiment. But, given the law of force between the molecules, to discover what a billion molecules are going to do merely consists of studying the function $e^{-\sum V_{ij}/kT}$. Surprisingly enough, since it is such a simple function and such an easy idea, given the potential, the labor is enormously complicated; the difficulty is the tremendous number of variables.

In spite of such difficulties, the subject is quite exciting and interesting. It is often called an example of a “many-body problem,” and it really has been a very interesting thing. In that single formula must be contained all the details, for example, about the solidification of gas, or the forms of the crystals that the solid can take, and people have been trying to squeeze it out, but the mathematical difficulties are very great, not in writing the law, but in dealing with so enormous a number of variables.

That then, is the distribution of particles in space. That is the end of classical statistical mechanics, practically speaking, because if we know the forces, we can, in principle, find the distribution in space, and the distribution of velocities is something that we can work out once and for all, and is not something that is different for the different cases. The great problems are in getting particular information out of our formal solution, and that is the main subject of classical statistical mechanics.

40–4The distribution of molecular speeds

Now we go on to discuss the distribution of velocities, because

sometimes it is interesting or useful to know how many of them are

moving at different speeds. In order to do that, we may make use of

the facts which we discovered with regard to the gas in the

atmosphere. We take it to be a perfect gas, as we have already assumed

in writing the potential energy, disregarding the energy of mutual

attraction of the atoms. The only potential energy that we included in

our first example was gravity. We would, of course, have something

more complicated if there were forces between the atoms. Thus we

assume that there are no forces between the atoms and, for a moment,

disregard collisions also, returning later to the justification of

this. Now we saw that there are fewer molecules at the height $h$ than

there are at the height $0$; according to formula (40.1),

they decrease exponentially with height. How can there be fewer at

greater heights? After all, do not all the molecules which are moving

up at height $0$ arrive at $h$? No!, because some of those which are

moving up at $0$ are going too slowly, and cannot climb the potential

hill to $h$. With that clue, we can calculate how many must be moving

at various speeds, because from (40.1) we know how many are moving with less than enough speed to climb a given

distance $h$.

Those are just the ones that account for the fact that the

density at $h$ is lower than at $0$.

Now let us put that idea a little more precisely: let us count how many molecules are passing from below to above the plane $h = 0$ (by calling it height${} = 0$, we do not mean that there is a floor there; it is just a convenient label, and there is gas at negative $h$). These gas molecules are moving around in every direction, but some of them are moving through the plane, and at any moment a certain number per second of them are passing through the plane from below to above with different velocities. Now we note the following: if we call $u$ the velocity which is just needed to get up to the height $h$ (kinetic energy $mu^2/2 = mgh$), then the number of molecules per second which are passing upward through the lower plane in a vertical direction with velocity component greater than $u$ is exactly the same as the number which pass through the upper plane with any upward velocity. Those molecules whose vertical velocity does not exceed $u$ cannot get through the upper plane. So therefore we see that \begin{equation*} \text{Number passing }h = 0 \text{ with }v_z > u = \text{number passing }h = h \text{ with }v_z > 0. \end{equation*} \begin{equation*} \begin{pmatrix} \text{Number passing }h = 0\\ \text{ with }v_z > u \end{pmatrix} = \begin{pmatrix} \text{number passing }h = h\\ \text{ with }v_z > 0 \end{pmatrix}. \end{equation*} But the number which pass through $h$ with any velocity greater than $0$ is less than the number which pass through the lower height with any velocity greater than $0$, because the number of atoms is greater; that is all we need. We know already that the distribution of velocities is the same, after the argument we made earlier about the temperature being constant all the way through the atmosphere.

2023.9.12: 온도가 같은 평형상태에서는 주고 받는 것이 같다. 일정속도 이상되는 분자들이 위로 가면 내려오는 것도 있다.

10.18: 온도가 같은 상태를 물리적 말로 표현한 거... 온도 같게 하기 위해 아래 위를 연결한다는 게 뭔가? 분자들 속도 분포가 고르게 퍼지도록 한 거지 않은가?

수학적 simulation 위한 정의를 하자면... Definition of thermal equilibrium : 진동/요동치는 분자들의 속도 분포, 정확하게 얘기하면 움직임들의 방향과 크기 분포 everywhere same

질량은 tangible하여 사용한다는 걸 잊지 마라, 핵심은 움직임=기.

2023.9.12: 속도 분포가 같다는 것이 확보되면, 분자 갯수가 $e^{-mg\text{{높이 차이}}/kT}$ 비율로 줄어든다는 사실로부터.

But $n_{> 0}(h) = n_{> u}(0)$, and therefore we find that \begin{equation*} \frac{n_{> u}(0)}{n_{> 0}(0)} = e^{-mgh/kT} = e^{-mu^2/2kT}, \end{equation*} since $\tfrac{1}{2}mu^2 = mgh$. Thus, in words, the number of molecules per unit area per second passing the height $0$ with a $z$-component of velocity greater than $u$ is $e^{-mu^2/2kT}$ times the total number that are passing through the plane with velocity greater than zero.Now this is not only true at the arbitrarily chosen height $0$, but of course it is true at any other height(* $\exp(x+y)=\exp{x}+\exp{y}$ 성질 덕에) , and thus the distributions of velocities are all the same! (The final statement does not involve the height $h$, which appeared only in the intermediate argument.) The result is a general proposition that gives us the distribution of velocities. It tells us that if we drill a little hole in the side of a gas pipe, a very tiny hole, so that the collisions are few and far between, i.e., are farther apart than the diameter of the hole, then the particles which are coming out will have different velocities, but the fraction of particles which come out at a velocity greater than $u$ is $e^{-mu^2/2kT}$.

Now we return to the question about the neglect of collisions: Why does it not make any difference? We could have pursued the same argument, not with a finite height $h$, but with an infinitesimal height $h$, which is so small that there would be no room for collisions between $0$ and $h$. But that was not necessary: the argument is evidently based on an analysis of the energies involved, the conservation of energy, and in the collisions that occur there is an exchange of energies among the molecules. However, we do not really care whether we follow the same molecule if energy is merely exchanged with another molecule. So it turns out that even if the problem is analyzed more carefully (and it is more difficult, naturally, to do a rigorous job), it still makes no difference in the result. (* 에너지 전파나 속도 전파 그게 그거다. 여기서 equilibrium은 온돈데, 그건 kinetic energy의 평형이요, 충돌은 곧 '움직임' 전달 => simply exchange velocities)

It is interesting that the velocity distribution we have found is just \begin{equation} \label{Eq:I:40:4} n_{> u} \propto e^{-\text{kinetic energy}/kT}. \end{equation}

This way of describing the distribution of velocities, by giving the number of molecules that pass a given area with a certain minimum $z$-component, is not the most convenient way of giving the velocity distribution. For instance, inside the gas, one more often wants to know how many molecules are moving with a $z$-component of velocity between two given values, and that, of course, is not directly given by Eq. (40.4). We would like to state our result in the more conventional form, even though what we already have written is quite general. Note that it is not possible to say that any molecule has exactly some stated velocity; none of them has a velocity exactly equal to $1.7962899173$ meters per second. So in order to make a meaningful statement, we have to ask how many are to be found in some range of velocities. We have to say how many have velocities between $1.796$ and $1.797$, and so on. On mathematical terms, let $f(u)\,du$ be the fraction of all the molecules which have velocities between $u$ and $u + du$ or, what is the same thing (if $du$ is infinitesimal), all that have a velocity $u$ with a range $du$. Figure 40–5 shows a possible form for the function $f(u)$, and the shaded part, of width $du$ and mean height $f(u)$, represents this fraction $f(u)\,du$. That is, the ratio of the shaded area to the total area of the curve is the relative proportion of molecules with velocity $u$ within $du$. If we define $f(u)$ so that the fraction having a velocity in this range is given directly by the shaded area, then the total area must be $100$ percent of them, that is, \begin{equation} \label{Eq:I:40:5} \int_{-\infty}^\infty f(u)\,du = 1. \end{equation}

Now we have only to get this distribution by comparing it with the theorem we derived before. First we ask, what is the number of molecules passing through an area per second with a velocity greater than $u$, expressed in terms of $f(u)$? At first we might think it is merely the integral of $\int_u^\infty f(u)\,du$, but it is not, because we want the number that are passing the area per second. The faster ones pass more often, so to speak, than the slower ones, and in order to express how many pass, you have to multiply by the velocity. (We discussed that in the previous chapter when we talked about the number of collisions.) In a given time $t$ the total number which pass through the surface is all of those which have been able to arrive at the surface, and the number which arrive come from a distance $ut$. So the number of molecules which arrive is not simply the number which are there, but the number that are there per unit volume, multiplied by the distance that they sweep through in racing for the area through which they are supposed to go, and that distance is proportional to $u$. Thus we need the integral of $u$ times $f(u)\,du$, an infinite integral with a lower limit $u$, and this must be the same as we found before, namely $e^{-mu^2/2kT}$, with a proportionality constant which we will get later: \begin{equation} \label{Eq:I:40:6} \int_u^\infty uf(u)\,du = \text{const}\cdot e^{-mu^2/2kT}. \end{equation}

Now if we differentiate the integral with respect to $u$, we get the thing that is inside the integral, i.e., the integrand (with a minus sign, since $u$ is the lower limit), and if we differentiate the other side, we get $u$ times the same exponential (and some constants). The $u$’s cancel and we find \begin{equation} \label{Eq:I:40:7} f(u)\,du = Ce^{-mu^2/2kT}\,du. \end{equation} We retain the $du$ on both sides as a reminder that it is a distribution, and it tells what the proportion is for velocity between $u$ and $u + du$.

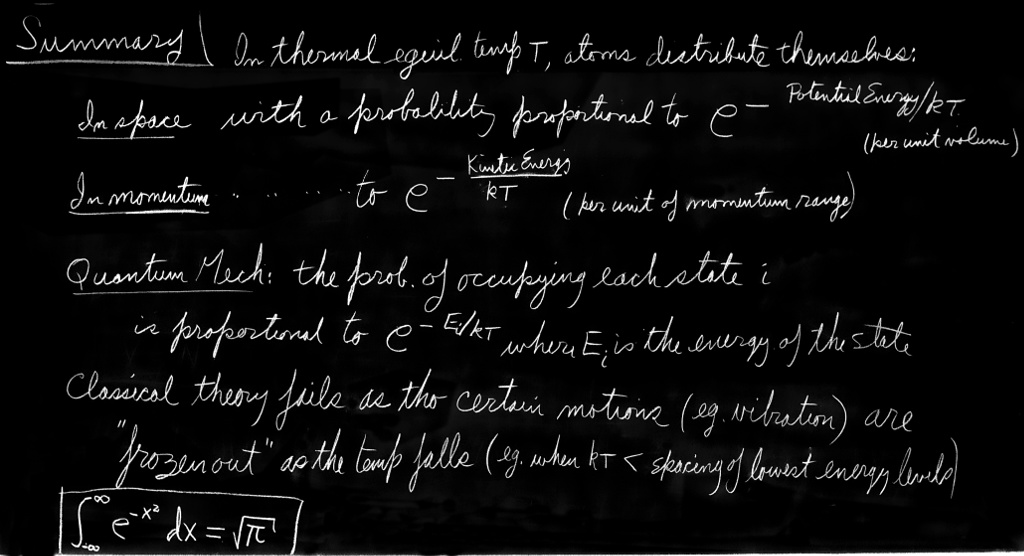

The constant $C$ must be so determined that the integral is unity, according to Eq. (40.5). Now we can prove1 that \begin{equation*} \int_{-\infty}^\infty e^{-x^2}\,dx = \sqrt{\pi}. \end{equation*} Using this fact, it is easy to find that $C = \sqrt{m/2\pi kT}$.

Since velocity and momentum are proportional, we may say that the distribution of momenta is also proportional to $e^{-\text{K.E.}/kT}$ per unit momentum range. It turns out that this theorem is true in relativity too, if it is in terms of momentum, while if it is in velocity it is not, so it is best to learn it in momentum instead of in velocity: \begin{equation} \label{Eq:I:40:8} f(p)\,dp = Ce^{-\text{K.E.}/kT}\,dp. \end{equation} So we find that the probabilities of different conditions of energy, kinetic and potential, are both given by $e^{-\text{energy}/kT}$, a very easy thing to remember and a rather beautiful proposition.

So far we have, of course, only the distribution of the velocities “vertically.” We might want to ask, what is the probability that a molecule is moving in another direction? Of course these distributions are connected, and one can obtain the complete distribution from the one we have, because the complete distribution depends only on the square of the magnitude of the velocity, not upon the $z$-component. It must be something that is independent of direction, and there is only one function involved, the probability of different magnitudes. We have the distribution of the $z$-component, and therefore we can get the distribution of the other components from it. The result is that the probability is still proportional to $e^{-\text{K.E.}/kT}$, but now the kinetic energy involves three parts, $mv_x^2/2$, $mv_y^2/2$, and $mv_z^2/2$, summed in the exponent. Or we can write it as a product: \begin{equation} \begin{aligned} f(v_x,v_y,v_z)&\;dv_x dv_y dv_z\\[.5ex] &\kern{-3.5em}\propto e^{-mv_x^2/2kT}\!\!\cdot e^{-mv_y^2/2kT}\!\!\cdot e^{-mv_z^2/2kT}\,dv_x dv_y dv_z. \end{aligned} \label{Eq:I:40:9} \end{equation} You can see that this formula must be right because, first, it is a function only of $v^2$, as required, and second, the probabilities of various values of $v_z$ obtained by integrating over all $v_x$ and $v_y$ is just (40.7). But this one function (40.9) can do both those things!

40–5The specific heats of gases

Now we shall look at some ways to test the theory, and to see how successful is the classical theory of gases. We saw earlier that if $U$ is the internal energy of $N$ molecules, then $PV =$ $NkT =$ $(\gamma - 1)U$ holds, sometimes, for some gases, maybe. If it is a monatomic gas, we know this is also equal to $\tfrac{2}{3}$ of the kinetic energy of the center-of-mass motion of the atoms. If it is a monatomic gas, then the kinetic energy is equal to the internal energy, and therefore $\gamma - 1 = \tfrac{2}{3}$. But suppose it is, say, a more complicated molecule, that can spin and vibrate, and let us suppose (it turns out to be true according to classical mechanics) that the energies of the internal motions are also proportional to $kT$. Then at a given temperature, in addition to kinetic energy $\tfrac{3}{2}kT$, it has internal vibrational and rotational energies. So the total $U$ includes not just the kinetic energy, but also the rotational and vibrational energies, and we get a different value of $\gamma$. Technically, the best way to measure $\gamma$ is by measuring the specific heat, which is the change in energy with temperature. We will return to that approach later. For our present purposes, we may suppose $\gamma$ is found experimentally from the $PV^\gamma$ curve for adiabatic compression.

Let us make a calculation of $\gamma$ for some cases. First, for a monatomic gas $U$ is the total energy, the same as the kinetic energy, and we know already that $\gamma$ should be $\tfrac{5}{3}$. For a diatomic gas, we may take, as an example, oxygen, hydrogen iodide, hydrogen, etc., and suppose that the diatomic gas can be represented as two atoms held together by some kind of force like the one of Fig. 40–3. We may also suppose, and it turns out to be quite true, that at the temperatures that are of interest for the diatomic gas, the pairs of atoms tend strongly to be separated by $r_0$, the distance of potential minimum. If this were not true, if the probability were not strongly varying enough to make the great majority sit near the bottom, we would have to remember that oxygen gas is a mixture of O$_2$ and single oxygen atoms in a nontrivial ratio. We know that there are, in fact, very few single oxygen atoms, which means that the potential energy minimum is very much greater in magnitude than $kT$, as we have seen. Since they are clustered strongly around $r_0$, the only part of the curve that is needed is the part near the minimum, which may be approximated by a parabola. A parabolic potential implies a harmonic oscillator, and in fact, to an excellent approximation, the oxygen molecule can be represented as two atoms connected by a spring.

Now what is the total energy of this molecule at temperature $T$ ? We know that for each of the two atoms, each of the kinetic energies should be $\tfrac{3}{2}kT$, so the kinetic energy of both of them is $\tfrac{3}{2}kT + \tfrac{3}{2}kT$. We can also put this in a different way: the same $\tfrac{3}{2}$ plus $\tfrac{3}{2}$ can also be looked at as kinetic energy of the center of mass ($\tfrac{3}{2}$), kinetic energy of rotation ($\tfrac{2}{2}$), and kinetic energy of vibration ($\tfrac{1}{2}$). We know that the kinetic energy of vibration is $\frac{1}{2}$, since there is just one dimension involved and each degree of freedom has $\tfrac{1}{2}kT$. Regarding the rotation, it can turn about either of two axes, so there are two independent motions. We assume that the atoms are some kind of points, and cannot spin about the line joining them; this is something to bear in mind, because if we get a disagreement, maybe that is where the trouble is. But we have one more thing, which is the potential energy of vibration; how much is that? In a harmonic oscillator the average kinetic energy and average potential energy are equal, and therefore the potential energy of vibration is $\tfrac{1}{2}kT$, also. The grand total of energy is $U = \tfrac{7}{2}kT$, or $kT$ is $\tfrac{2}{7}U$ per atom. That means, then, that $\gamma$ is $\tfrac{9}{7}$ instead of $\tfrac{5}{3}$, i.e., $\gamma = 1.286$.

2023.1.30: 실험결과에 맞추려고 애쓴 흔적들

각 원자의 운동 에너지를 합쳐 $3kT$... 그것은 통체로 움직이는 (질량중심) 에너지+ 세분화된 회전, 진동 에너지로 표현될 수 있다면서 갑자기 진동의 위치 에너지?

클래식 물리에서 실험결과에 맞추려고 논리 전개를 어떻게 해왔는가를 보여준 것으로 밖에 볼 수 없고 잘 맞지 않는다.

답은 기하학적 structure에 있다 => 색의 기하구조

1. 2021.7.1일자 메모

2. 1.31 보충: diatomic 개스 입자는 진동+fluctuating geometric 2차 곡면으로서 다른 입자의 곡면과 충돌

(1) 순간적으로 합쳐지고(connected sum)하며 진동 간섭이 일어나고, disconnected하면서 에너지 저장소(제로점) 이동 발생

(2) 진동수와 제로점 수가 같아지며 평형상태가 된다. 여기서 평형상태란 입자들 사이를 의미하는 것이고 입자내 자체는 아님.

3. specific heat는 1도 올리는 데 드는 열량이니 일종의 일당 또는 그릇의 크기 => diffusion과의 관계

$PV=(\gamma -1)U$로부터 그릇의 크기를 추산했고 좀 더 세부적으로 하는 작업을 추정하려고 진동, 회전으로 설명하려고 했으나 어떻게 해도 수치들에 맞지 않는 거지.

단순히 움직이는 것만이 아닌 structure를 고려하지 않았으니까, 에너지 저장되는 거에 대해 소홀히 한 거야. 저장이란 결국 갇힌다는 거고 그건 structure인데... confined waves

* degree of freedom은 평형상태에서 산지사방 거침없이 자유롭게 움직일 수 있는 linearly independent 길의 수

We may compare these numbers with the relevant measured values shown in Table 40–1. Looking first at helium, which is a monatomic gas, we find very nearly $\tfrac{5}{3}$, and the error is probably experimental, although at such a low temperature there may be some forces between the atoms. Krypton and argon, both monatomic, agree also within the accuracy of the experiment.

| Gas | $T({}^\circ$C) | $\gamma$ |

| $\text{He}$ | $-180$ | $1.660$ |

| $\text{Kr}$ | $\phantom{-1}19$ | $1.68\phantom{0}$ |

| $\text{Ar}$ | $\phantom{-1}15$ | $1.668$ |

| $\text{H}_2$ | $\phantom{-}100$ | $1.404$ |

| $\text{O}_2$ | $\phantom{-}100$ | $1.399$ |

| $\text{HI}$ | $\phantom{-}100$ | $1.40\phantom{0}$ |

| $\text{Br}_2$ | $\phantom{-}300$ | $1.32\phantom{0}$ |

| $\text{I}_2$ | $\phantom{-}185$ | $1.30\phantom{0}$ |

| $\text{NH}_3$ | $\phantom{-1}15$ | $1.310$ |

| $\text{C}_2\text{H}_6$ | $\phantom{-1}15$ | $1.22\phantom{0}$ |

We turn to the diatomic gases and find hydrogen with $1.404$, which does not agree with the theory, $1.286$. Oxygen, $1.399$, is very similar, but again not in agreement. Hydrogen iodide again is similar at $1.40$. It begins to look as though the right answer is $1.40$, but it is not, because if we look further at bromine we see $1.32$, and at iodine we see $1.30$. Since $1.30$ is reasonably close to $1.286$, iodine may be said to agree rather well, but oxygen is far off. So here we have a dilemma. We have it right for one molecule, we do not have it right for another molecule, and we may need to be pretty ingenious in order to explain both.

* 2021.7.1 여기까지 온 상황을 보니, 실험식 그래프 40–3을 보고 'approximated by a parabola. A parabolic potential implies a harmonic oscillator, and in fact, to an excellent approximation, the oxygen molecule can be represented as two atoms connected by a spring'라며 밀어붙인 건데, 실험(즉 현실)과 잘 맞지 않는다는 것.

여기서 topological energy을(nonconservative energy) 제안한다. diatomic 분자들이 만드는 2-dimensional topology가 품는 에너지를 말이다.

Let us look further at a still more complicated molecule with large numbers of parts, for example, C$_2$H$_6$, which is ethane. It has eight different atoms, and they are all vibrating and rotating in various combinations, so the total amount of internal energy must be an enormous number of $kT$’s, at least $12kT$ for kinetic energy alone, and $\gamma - 1$ must be very close to zero, or $\gamma$ almost exactly $1$. In fact, it is lower, but $1.22$ is not so much lower, and is higher than the $1\tfrac{1}{12}$ calculated from the kinetic energy alone, and it is just not understandable!

Furthermore, the whole mystery is deep, because the diatomic molecule cannot be made rigid by a limit. Even if we made the couplings stiffer indefinitely, although it might not vibrate much, it would nevertheless keep vibrating. The vibrational energy inside is still $kT$, since it does not depend on the strength of the coupling. But if we could imagine absolute rigidity, stopping all vibration to eliminate a variable, then we would get $U = \tfrac{5}{2}kT$ and $\gamma = 1.40$ for the diatomic case. This looks good for H$_2$ or O$_2$. On the other hand, we would still have problems, because $\gamma$ for either hydrogen or oxygen varies with temperature! From the measured values shown in Fig. 40–6, we see that for H$_2$, $\gamma$ varies from about $1.6$ at $-185^\circ$C to $1.3$ at $2000^\circ$C. The variation is more substantial in the case of hydrogen than for oxygen, but nevertheless, even in oxygen, $\gamma$ tends definitely to go up as we go down in temperature.

40–6The failure of classical physics

So, all in all, we might say that we have some difficulty. We might try some force law other than a spring, but it turns out that anything else will only make $U$ higher. If we include more forms of energy, $\gamma$ approaches unity more closely, contradicting the facts. All the classical theoretical things that one can think of will only make it worse. The fact is that there are electrons in each atom, and we know from their spectra that there are internal motions; each of the electrons should have at least $\tfrac{1}{2}kT$ of kinetic energy, and something for the potential energy, so when these are added in, $\gamma$ gets still smaller. It is ridiculous. It is wrong.

The first great paper on the dynamical theory of gases was by Maxwell in 1859. On the basis of ideas we have been discussing, he was able accurately to explain a great many known relations, such as Boyle’s law, the diffusion theory, the viscosity of gases, and things we shall talk about in the next chapter. He listed all these great successes in a final summary, and at the end he said, “Finally, by establishing a necessary relation between the motions of translation and rotation (he is talking about the $\tfrac{1}{2}kT$ theorem) of all particles not spherical, we proved that a system of such particles could not possibly satisfy the known relation between the two specific heats.” He is referring to $\gamma$ (which we shall see later is related to two ways of measuring specific heat), and he says we know we cannot get the right answer.

Ten years later, in a lecture, he said, “I have now put before you what I consider to be the greatest difficulty yet encountered by the molecular theory.” These words represent the first discovery that the laws of classical physics were wrong. This was the first indication that there was something fundamentally impossible, because a rigorously proved theorem did not agree with experiment. About 1905, Sir James Hopwood Jeans and Lord Rayleigh (John William Strutt) were to talk about this puzzle again. One often hears it said that physicists at the latter part of the nineteenth century thought they knew all the significant physical laws and that all they had to do was to calculate more decimal places. Someone may have said that once, and others copied it. But a thorough reading of the literature of the time shows they were all worrying about something. Jeans said about this puzzle that it is a very mysterious phenomenon, and it seems as though as the temperature falls, certain kinds of motions “freeze out.”

If we could assume that the vibrational motion, say, did not exist at low temperature and did exist at high temperature, then we could imagine that a gas might exist at a temperature sufficiently low that vibrational motion does not occur, so $\gamma = 1.40$, or a higher temperature at which it begins to come in, so $\gamma$ falls. The same might be argued for the rotation. If we can eliminate the rotation, say it “freezes out” at sufficiently low temperature, then we can understand the fact that the $\gamma$ of hydrogen approaches $1.66$ as we go down in temperature. How can we understand such a phenomenon? Of course that these motions “freeze out” cannot be understood by classical mechanics. It was only understood when quantum mechanics was discovered.

Without proof, we may state the results for statistical mechanics of the quantum-mechanical theory. We recall that according to quantum mechanics, a system which is bound by a potential, for the vibrations, for example, will have a discrete set of energy levels, i.e., states of different energy. Now the question is: how is statistical mechanics to be modified according to quantum-mechanical theory? It turns out, interestingly enough, that although most problems are more difficult in quantum mechanics than in classical mechanics, problems in statistical mechanics are much easier in quantum theory! The simple result we have in classical mechanics, that $n = n_0e^{-\text{energy}/kT}$, becomes the following very important theorem: If the energies of the set of molecular states are called, say, $E_0$, $E_1$, $E_2$, …, $E_i$, …, then in thermal equilibrium the probability of finding a molecule in the particular state of having energy $E_i$ is proportional to $e^{-E_i/kT}$. That gives the probability of being in various states. In other words, the relative chance, the probability, of being in state $E_1$ relative to the chance of being in state $E_0$, is \begin{equation} \label{Eq:I:40:10} \frac{P_1}{P_0} = \frac{e^{-E_1/kT}}{e^{-E_0/kT}}, \end{equation} which, of course, is the same as \begin{equation} \label{Eq:I:40:11} n_1 = n_0e^{-(E_1 - E_0)/kT}, \end{equation} since $P_1 = n_1/N$ and $P_0 = n_0/N$. So it is less likely to be in a higher energy state than in a lower one. The ratio of the number of atoms in the upper state to the number in the lower state is $e$ raised to the power (minus the energy difference, over $kT$)—a very simple proposition.

Now it turns out that for a harmonic oscillator the energy levels are evenly spaced. Calling the lowest energy $E_0 = 0$ (it actually is not zero, it is a little different, but it does not matter if we shift all energies by a constant), the first one is then $E_1 = \hbar\omega$, and the second one is $2\hbar\omega$, and the third one is $3\hbar\omega$, and so on.

Now let us see what happens. We suppose we are studying the vibrations of a diatomic molecule, which we approximate as a harmonic oscillator. Let us ask what is the relative chance of finding a molecule in state $E_1$ instead of in state $E_0$. The answer is that the chance of finding it in state $E_1$, relative to that of finding it in state $E_0$, goes down as $e^{-\hbar\omega/kT}$. Now suppose that $kT$ is much less than $\hbar\omega$, and we have a low-temperature circumstance. Then the probability of its being in state $E_1$ is extremely small. Practically all the atoms are in state $E_0$. If we change the temperature but still keep it very small, then the chance of its being in state $E_1 = \hbar\omega$ remains infinitesimal—the energy of the oscillator remains nearly zero; it does not change with temperature so long as the temperature is much less than $\hbar\omega$. All oscillators are in the bottom state, and their motion is effectively “frozen”; there is no contribution of it to the specific heat. We can judge, then, from Table 40–1, that at $100^\circ$C, which is $373$ degrees absolute, $kT$ is much less than the vibrational energy in the oxygen or hydrogen molecules, but not so in the iodine molecule. The reason for the difference is that an iodine atom is very heavy, compared with hydrogen, and although the forces may be comparable in iodine and hydrogen, the iodine molecule is so heavy that the natural frequency of vibration is very low compared with the natural frequency of hydrogen.

* 양자학적 설명이 어째 별로다, 'so long as the temperature is much less than $\hbar\omega$' 이 부분을 제외하곤.

With $\hbar\omega$ higher than $kT$ at room temperature for hydrogen, but lower for iodine, only the latter, iodine, exhibits the classical vibrational energy. As we increase the temperature of a gas, starting from a very low value of $T$, with the molecules almost all in their lowest state, they gradually begin to have an appreciable probability to be in the second state, and then in the next state, and so on. When the probability is appreciable for many states, the behavior of the gas approaches that given by classical physics, because the quantized states become nearly indistinguishable from a continuum of energies, and the system can have almost any energy. Thus, as the temperature rises, we should again get the results of classical physics, as indeed seems to be the case in Fig. 40–6. It is possible to show in the same way that the rotational states of atoms are also quantized, but the states are so much closer together that in ordinary circumstances $kT$ is bigger than the spacing. Then many levels are excited, and the rotational kinetic energy in the system participates in the classical way. The one example where this is not quite true at room temperature is for hydrogen.This is the first time that we have really deduced, by comparison with experiment, that there was something wrong with classical physics,

2023.2.2: 정리

온도 1도 올리는 데 드는 열량을 측정하고, 이론적으로 생각한 입자 내부적으로 소용되는 회전, 진동 등의 각 열량을 종합함으로써, 이론이 맞았나 검토했더니

1. 이렇게 저렇게 수정해도 맞지 않고... 더 큰 고민은

2. 온도가 절대온도 0에 가까와 질 때는 거의 소비 열량이 없다, 즉 회전, 진동이 다 멈춘다는 것. 하여

3. 그를 설명하기 위해 옛물리에서 얻은 입자분포 공식에 에너지가 discrete하다는 걸 가미했다는 거. 적어도 왜? 낮은 온도에서 에너지가 오르지 않는가는 설명.

* 에너지가 연속이 아닌 것은 움직임이 뛰놀 structure가 마련되어야 하기 때문

- To get the value of the integral, let \begin{equation*} I = \int_{-\infty}^\infty e^{-x^2}\,dx. \end{equation*} Then \begin{align*} I^2 &= \int_{-\infty}^\infty e^{-x^2}\,dx\cdot \int_{-\infty}^\infty e^{-y^2}\,dy\\[1.5ex] &= \int_{-\infty}^\infty\int_{-\infty}^\infty e^{-(x^2+y^2)}\,dy\,dx, \end{align*} which is a double integral over the whole $xy$-plane. But this can also be written in polar coordinates as \begin{align*} I^2 &= \int_0^\infty e^{-r^2}\cdot 2\pi r\,dr\\[1.5ex] &= \pi\int_0^\infty e^{-t}\,dt = \pi. \end{align*} ↩