41 The Brownian Movement

The Brownian Movement

41–1Equipartition of energy

The Brownian movement was discovered in 1827 by Robert Brown, a botanist. While he was studying microscopic life, he noticed little particles of plant pollens jiggling around in the liquid he was looking at in the microscope, and he was wise enough to realize that these were not living, but were just little pieces of dirt moving around in the water. In fact he helped to demonstrate that this had nothing to do with life by getting from the ground an old piece of quartz in which there was some water trapped. It must have been trapped for millions and millions of years, but inside he could see the same motion. What one sees is that very tiny particles are jiggling all the time.

This was later proved to be one of the effects of molecular motion, and we can understand it qualitatively by thinking of a great push ball on a playing field, seen from a great distance, with a lot of people underneath, all pushing the ball in various directions. We cannot see the people because we imagine that we are too far away, but we can see the ball, and we notice that it moves around rather irregularly.

We also know, from the theorems that we have discussed in previous chapters, that the mean kinetic energy of a small particle suspended in a liquid or a gas will be $\tfrac{3}{2}kT$ even though it is very heavy compared with a molecule. If it is very heavy, that means that the speeds are relatively slow, but it turns out, actually, that the speed is not really so slow. In fact, we cannot see the speed of such a particle very easily because although the mean kinetic energy is $\tfrac{3}{2}kT$, which represents a speed of a millimeter or so per second for an object a micron or two in diameter, this is very hard to see even in a microscope, because the particle continuously reverses its direction and does not get anywhere. How far it does get we will discuss at the end of the present chapter. This problem was first solved by Einstein at the beginning of the 20th century.Incidentally, when we say that the mean kinetic energy of this particle is $\tfrac{3}{2}kT$, we claim to have derived this result from the kinetic theory, that is, from Newton’s laws. We shall find that we can derive all kinds of things—marvelous things—from the kinetic theory, and it is most interesting that we can apparently get so much from so little. Of course we do not mean that Newton’s laws are “little”—they are enough to do it, really—what we mean is that we did not do very much. How do we get so much out? The answer is that we have been perpetually making a certain important assumption, which is that if a given system is in thermal equilibrium at some temperature, it will also be in thermal equilibrium with anything else at the same temperature. For instance, if we wanted to see how a particle would move if it was really colliding with water, we could imagine that there was a gas present, composed of another kind of particle, little fine pellets that (we suppose) do not interact with water, but only hit the particle with “hard” collisions. Suppose the particle has a prong sticking out of it; all our pellets have to do is hit the prong. We know all about this imaginary gas of pellets at temperature $T$—it is an ideal gas. Water is complicated, but an ideal gas is simple. Now, our particle has to be in equilibrium with the gas of pellets. Therefore, the mean motion of the particle must be what we get for gaseous collisions, because if it were not moving at the right speed relative to the water but, say, was moving faster, that would mean that the pellets would pick up energy from it and get hotter than the water. But we had started them at the same temperature, and we assume that if a thing is once in equilibrium, it stays in equilibrium—parts of it do not get hotter and other parts colder, spontaneously.

This proposition is true and can be proved from the laws of mechanics, but the proof is very complicated and can be established only by using advanced mechanics. It is much easier to prove in quantum mechanics than it is in classical mechanics. It was proved first by Boltzmann, but for now we simply take it to be true, and then we can argue that our particle has to have $\tfrac{3}{2}kT$ of energy if it is hit with artificial pellets, so it also must have $\tfrac{3}{2}kT$ when it is being hit with water at the same temperature and we take away the pellets; so it is $\tfrac{3}{2}kT$. It is a strange line of argument, but perfectly valid.

In addition to the motion of colloidal particles for which the Brownian movement was first discovered, there are a number of other phenomena, both in the laboratory and in other situations, where one can see Brownian movement. If we are trying to build the most delicate possible equipment, say a very small mirror on a thin quartz fiber for a very sensitive ballistic galvanometer (Fig. 41–1), the mirror does not stay put, but jiggles all the time—all the time—so that when we shine a light on it and look at the position of the spot, we do not have a perfect instrument because the mirror is always jiggling. Why? Because the average kinetic energy of rotation of this mirror has to be, on the average, $\tfrac{1}{2}kT$.

What is the mean-square angle over which the mirror will wobble? (* Surely, you are joking Mr. Feynman에 보면 학생 식당에서 food war가 벌어졌을 때 날아 다니는 접시를 보고 그의 와블과 회전 주기와의 관계식을 구했다고 한다) Suppose we find the natural vibration period of the mirror by tapping on one side and seeing how long it takes to oscillate back and forth, and we also know the moment of inertia, $I$. We know the formula for the kinetic energy of rotation—it is given by Eq. (19.8): $T = \tfrac{1}{2}I\omega^2$. That is the kinetic energy, and the potential energy that goes with it will be proportional to the square of the angle—it is $V = \tfrac{1}{2}\alpha\theta^2$. But, if we know the period $t_0$ and calculate from that the natural frequency $\omega_0 = 2\pi/t_0$, then the potential energy is $V = \tfrac{1}{2}I\omega_0^2\theta^2$.

* 2021.7.19: 자꾸 까먹는데, get to the basic.

pendulum 방정식, $l\frac{d^2\theta}{dt^2}=

-g\sin{\theta}$, 용수철에서 보듯이 진동하는 이유가 뭐냐? 뭔가 원위치로 끄는 힘이 있는 거잖아.

그 중 간단하게 $F=-kx$ 즉 그 힘이 원위치로 부터의 거리에 비례하는 것이 harmonic 진동이고, 알간? 그러니 거기에 잠재된, 포텐셜 에너지는 거기까지 밀고 간 것이고...

$V=-\int{F}dx=\frac12 kx^2 $이니, 각 점의 infinitesimal 질량들에 대한 포텐셜을 총합하면, 총 포텐셜 $V=\sum{V_i}=\frac12k_i(r_i\theta)^2$

$F=-kx => m_i\frac{d^2x}{dt^2}=-kx$의 해는 $x=a\cos(\omega_0{t}+\Delta)$이니, $k_i=m_i{\omega_0}^2$

$=>

V=\frac12\sum{k_i{r_i}^2\theta^2=\frac12\sum{m_i{\omega_0}^2}{r_i}^2\theta^2}

= \frac12 \theta^2 {\omega_0}^2\sum{ m_i{r_i}^2}=\frac12 \theta^2 {\omega_0}^2 I$, where $I=\sum{ m_i{r_i}^2}= \int{mr^2}$

~ 든 생각: 2차원 surface에 형성되는 field는 구조고 각 소용돌이 zero점들이 에너지 저장소, 즉 프리 전자들이 그 소용돌이에 갇혀 있는 것. 갇힌 것이 외부로부터의 에너지를 받고 튀어나고 하는 것이 양전자 또는 $\hslash\omega$? Think about 총열강선, 회오리, 수채구녕에 물 빠지는 것 등

2023.3.16: natural frequency 에너지의 반

$k=m\omega_0^2 \text{인 경우, }\frac{1}{2}k\theta^2=\frac{1}{2}m\omega_0^2 \theta^2

\text{ => sum of masses인 경우 },\frac{1}{2} \sum_m=\frac{1}{2}I\omega_0^2\theta^2$,

2023.2.1: rachet과pawl, 자석화

The same thing works, amazingly enough, in electrical circuits. Suppose that we are building a very sensitive, accurate amplifier for a definite frequency and have a resonant circuit (Fig. 41–2) in the input so as to make it very sensitive to this certain frequency, like a radio receiver, but a really good one. Suppose we wish to go down to the very lowest limit of things, so we take the voltage, say off the inductance, and send it into the rest of the amplifier. Of course, in any circuit like this, there is a certain amount of loss. It is not a perfect resonant circuit, but it is a very good one and there is a little resistance, say (we put the resistor in so we can see it, but it is supposed to be small). Now we would like to find out: How much does the voltage across the inductance fluctuate? Answer: We know that $\tfrac{1}{2}LI^2$ is the “kinetic energy” —the energy associated with a coil in a resonant circuit (Chapter 25 & see Induction). Therefore the mean value of $\tfrac{1}{2}LI^2$ is equal to $\tfrac{1}{2}kT$—that tells us what the rms current is and we can find out what the rms voltage is from the rms current. For if we want the voltage across the inductance the formula is $\hat{V}_L = i\omega L\hat{I}$, and the mean absolute square voltage on the inductance is $\avg{V_L^2} = L^2\omega_0^2\avg{I^2}$, and putting in $\tfrac{1}{2}L\avg{I^2} = \tfrac{1}{2}kT$, we obtain \begin{equation} \label{Eq:I:41:2} \avg{V_L^2} = L\omega_0^2 kT. \end{equation} So now we can design circuits and tell when we are going to get what is called Johnson noise, the noise associated with thermal fluctuations!

Where do the fluctuations come from this time? They come again from the resistor—they come from the fact that the electrons in the resistor are jiggling around because they are in thermal equilibrium with the matter in the resistor, and they make fluctuations in the density of electrons. They thus make tiny electric fields which drive the resonant circuit.

2023.3.27: 든 생각, 인간 세상에서 벌어지는 것과 비슷하다는

1. $m, L$로 표현되는 뭉치/structure(구조, 조직)으로 움직이는 것과 개개 움직이는 거는 다르고

2. 그들 kinetics가 같아 평형상태가(equilibrium) 유지

Electrical engineers represent the answer in another way. Physically, the resistor is effectively the source of noise. However, we may replace the real circuit having an honest, true physical resistor which is making noise, by an artificial circuit which contains a little generator that is going to represent the noise, and now the resistor is otherwise ideal—no noise comes from it. All the noise is in the artificial generator. And so if we knew the characteristics of the noise generated by a resistor, if we had the formula for that, then we could calculate what the circuit is going to do in response to that noise. So, we need a formula for the noise fluctuations. Now the noise that is generated by the resistor is at all frequencies, since the resistor by itself is not resonant. Of course the resonant circuit only “listens” to the part that is near the right frequency,

2023.5.29: listen이란 말은 적분할 때 효과 발휘된다는 거. Dirac delta 함수게 적용되어 41.2식이 나오듯.

but the resistor has many different frequencies in it. We may describe how strong the generator is, as follows: The mean power that the resistor would absorb if it were connected directly across the noise generator would be $\avg{E^2}/R$, if $E$ were the voltage from the generator.2023.3.31: 평형이니 $\frac{1}{2}L\avg{I^2} = \frac{1}{2}kT$ => $\avg{I^2} = \frac{kT}{L}$

resonant circuit 파워 => $\avg P = R \avg{I^2}=\frac{R}{L}kT=\gamma kT$ from the table

41–2Thermal equilibrium of radiation

Now we go on to consider a still more advanced and interesting

proposition that is as follows. Suppose we have a charged oscillator

like those we were talking about when we were discussing light, let us

say an electron oscillating up and down in an atom. If it oscillates up

and down, it radiates light. Now suppose that this oscillator is in a

very thin gas of other atoms, and that from time to time the atoms

collide with it. Then in equilibrium, after a long time, this oscillator

will pick up energy such that its kinetic energy of oscillation

is $\tfrac{1}{2}kT$, and since it is a harmonic oscillator, its entire

energy will become $kT$. That is, of course, a wrong description so far,

because the oscillator carries electric charge, and if it has an

energy $kT$ it is shaking up and down and radiating light.

Therefore it is impossible to have equilibrium of real matter alone

without the charges in it emitting light

* 같은 실수 반복하는데...

1. 오바하지 말고 글자 그대로 받아들여라 2. 그랬는데, 이상하면 그때서 implication을 생각하자

파인만 얘기는 빛을 발하는 전하가 없으면 물질은 equilibrium에 도달할 수 없다는 거다.

2021.8.22: 전하가 진동하면 역동적인 전자기장이 발생하는데, 그 방사하는 전자기장은 에너지를 가지고 있다.

반면에 정적인 전기장은 에너지 전파하지 못한다. 왜냐하면, energy flow vector $S=\epsilon_O c^2 E\times B$이니, $E$ static => $B=0, S=0$

On the other hand, if we enclose the whole thing in a box so that the light does not go away to infinity, then we can eventually get thermal equilibrium. We may either put the gas in a box where we can say that there are other radiators in the box walls sending light back or, to take a nicer example, we may suppose the box has mirror walls. It is easier to think about that case. Thus we assume that all the radiation that goes out from the oscillator keeps running around in the box. Then, of course, it is true that the oscillator starts to radiate, but pretty soon it can maintain its $kT$ of energy in spite of the fact that it is radiating, because it is being illuminated, we may say, by its own light reflected from the walls of the box. That is, after a while there is a great deal of light rushing around in the box, and although the oscillator is radiating some, the light comes back and returns some of the energy that was radiated.

We shall now determine how much light there must be in such a box at temperature $T$ in order that the shining of the light on this oscillator will generate just enough energy to account for the light it radiated.

Let the gas atoms be very few and far between, so that we have an ideal oscillator with no resistance except radiation resistance. Then we consider that at thermal equilibrium the oscillator is doing two things at the same time. First, it has a mean energy $kT$, and we calculate how much radiation it emits. Second, this radiation should be exactly the amount that would result because of the fact that the light shining on the oscillator is scattered. Since there is nowhere else the energy can go, this effective radiation is really just scattered light from the light that is in there.

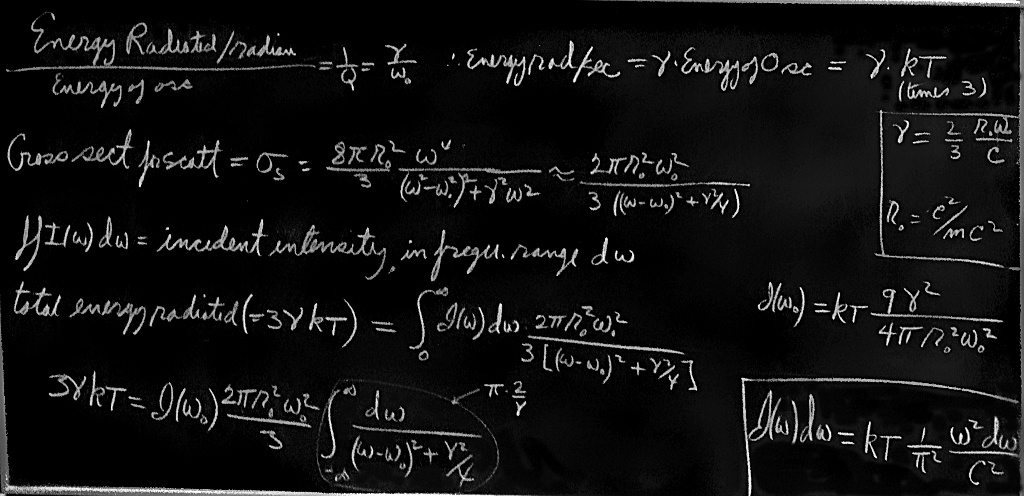

Thus we first calculate the energy that is radiated by the oscillator per second, if the oscillator has a certain energy. (We borrow from Chapter 32 on radiation resistance a number of equations without going back over their derivation.) The energy radiated per radian divided by the energy of the oscillator is called $1/Q$ (Eq. 32.8): $1/Q = (dW/dt)/\omega_0W$. Using the quantity $\gamma$, the damping constant, this can also be written as $1/Q = \gamma/\omega_0$, where $\omega_0$ is the natural frequency of the oscillator—if gamma is very small, $Q$(정의) is very large. The energy radiated per second is then \begin{equation} \label{Eq:I:41:4} \ddt{W}{t} = \frac{\omega_0W}{Q} = \frac{\omega_0W\gamma}{\omega_0} = \gamma W. \end{equation} The energy radiated per second is thus simply gamma times the energy of the oscillator. Now the oscillator should have an average energy $kT$, so we see that gamma $kT$ is the average amount of energy radiated per second: \begin{equation} \label{Eq:I:41:5} \avg{dW/dt} = \gamma kT. \end{equation} Now we only have to know what gamma is. Gamma is easily found from Eq. (32.13). It is \begin{equation} \label{Eq:I:41:6} \gamma = \frac{\omega_0}{Q} = \frac{2}{3}\, \frac{r_0\omega_0^2}{c}, \end{equation} where $r_0 = e^2/mc^2$ is the classical electron radius, and we have set $\lambda = 2\pi c/\omega_0$.

Our final result for the average rate of radiation of light near the frequency $\omega_0$ is therefore \begin{equation} \label{Eq:I:41:7} \avg{dW/dt} = \frac{2}{3}\, \frac{r_0\omega_0^2kT}{c}. \end{equation}

Next we ask how much light must be shining on the oscillator. It must be enough that the energy absorbed from the light (and thereupon scattered) is just exactly this much. In other words, the emitted light is accounted for as scattered light from the light that is shining on the oscillator in the cavity. So we must now calculate how much light is scattered from the oscillator if there is a certain amount—unknown—of radiation incident on it. Let $I(\omega)\,d\omega$ be the amount of light energy there is at the frequency $\omega$, within a certain range $d\omega$ (because there is no light at exactly a certain frequency; it is spread all over the spectrum). So $I(\omega)$ is a certain spectral distribution which we are now going to find—it is the color of a furnace at temperature $T$ that we see when we open the door and look in the hole. Now how much light is absorbed? We worked out the amount of radiation absorbed from a given incident light beam, and we calculated it in terms of a cross section. It is just as though we said that all of the light that falls on a certain cross section is absorbed. So the total amount that is re-radiated (scattered) is the incident intensity $I(\omega)\,d\omega$ multiplied by the cross section $\sigma$.

2021.10.16: cross section 개념 => 액화, 증발의 경우에도

2023.6.21: 2023.3.3&5&19에 복잡하게 생각했는데,

cross-section에 대하여 모르고 떠든 소리. 물리학자들 답게 꿰맞추는 생각으로 고안된 거.

1. 파가 전자와 충돌하지 않고 지나가는 것도 있는데, 실험상 분산은 입증되었고 그 정확한 위치는 알 수 없지만(* 전자가 움직이는 활동 2차 곡면)

2. 전자에 전자파가 충돌하면 분산하는 공식으로부터 가상의 영역에(* 2차 곡면에 의해 형성) 충돌한다고 상상, 그 영역에 도달한 파만 사방으로 분산시킨다는 거.

The formula for the cross section that we derived (Eq. 32.19) did not have the damping included. It is not hard to go through the derivation again and put in the resistance term, which we neglected. If we do that, and calculate the cross section the same way, we get \begin{equation} \label{Eq:I:41:8} \sigma_s = \frac{8\pi r_0^2}{3}\biggl( \frac{\omega^4}{(\omega^2 - \omega_0^2)^2 + \gamma^2\omega^2} \biggr). \end{equation}

2021.9.1: 먼저 헤갈리는 것부터 정리하자. $\hat{F}$ 정의 를 보고는, $\hat{x}$도 $\Delta$가 들어가는 같은 형태 정의를 생각하는데, 그건 착각! ^는 그냥 복소수라는 것을 표시일 뿐이다.

$P=\frac{q^2\omega^4x_0^2}{12\pi\epsilon_0 c^3}$의 $x_0$ 신경쓰지 말고 더 기본적인 32.5, $P=\frac{q^2a'^2}{6\pi\epsilon_0 c^3}$에 집중. $a'$는 이른 시간의 가속도지만 프라임 없이 계산한다.

$x=\hat x e^{i\omega t} \Rightarrow a=-\omega^2 \frac{q_eE_0 e^{i(\omega t+\Delta)}}{m(\omega_0^2-\omega^2+i\gamma\omega)} \Rightarrow

복소수\, a의\, 실수부분= -\frac{\omega ^2 q_e E_0}{m((\omega_0^2-\omega^2)^2+\gamma^2\omega^2)} (\cos(\omega t+\Delta)(\omega_0^2-\omega^2)+\sin(\omega t+\Delta)\gamma \omega)$, let $ K=\frac{\omega ^2 q_e E_0}{m((\omega_0^2-\omega^2)^2+\gamma^2\omega^2)}$

Then, 가속도 $a = - K ((\omega_0^2-\omega^2)\cos(\omega t+\Delta)+\gamma \omega\sin(\omega t+\Delta))$, $\avg{a^2} = K^2\avg{((\omega_0^2-\omega^2)^2\cos^2(\omega t+\Delta)+\gamma^2 \omega^2\sin^2(\omega t+\Delta)+ constant\sin2(\omega t+\Delta) )}$

$\cos^2과 \sin^2의\, 평균은\, \frac12이고,\, sin의\, 평균=0이니$,

$\avg{a^2}=K^2\frac12 ((\omega_0^2-\omega^2)^2+\gamma^2 \omega^2) =\frac{\omega^4 q_e^2 E_0^2}{2m^2((\omega_0^2-\omega^2)^2+\gamma^2 \omega^2)}$

$ P=\frac{\omega^4 q_e^4 E_0^2}{12m^2\pi\epsilon_0 c^3((\omega_0^2-\omega^2)^2+\gamma^2 \omega^2)}=(\frac12 \epsilon_0 c E_0^2)\frac{8\pi r_0^2}{3}\frac{\omega^4}{(\omega_0^2-\omega^2)^2+\gamma^2\omega^2}$, where

$r_0=\frac{q_e^2}{4\pi \epsilon_0 m_e c^2}$(see 32.7, 32.11)

Now, as a function of frequency, $\sigma_s$ is of significant size only for $\omega$ very near to the natural frequency $\omega_0$. (Remember that the $Q$ for a radiating oscillator is about $10^8$.) The oscillator scatters very strongly when $\omega$ is equal to $\omega_0$, and very weakly for other values of $\omega$. Therefore we can replace $\omega$ by $\omega_0$ and $\omega^2 - \omega_0^2$ by $2\omega_0(\omega - \omega_0)$(* $\omega^2 - \omega_0^2=(\omega + \omega_0)(\omega - \omega_0)\approx 2\omega_0(\omega - \omega_0)$, 더할 때 근사값 사용해도 차이가 크게 드러나지 않는다), and we get \begin{equation} \label{Eq:I:41:9} \sigma_s = \frac{2\pi r_0^2\omega_0^2} {3[(\omega - \omega_0)^2 + \gamma^2/4]}. \end{equation} Now the whole curve is localized near $\omega = \omega_0$. (We do not really have to make any approximations, but it is much easier to do the integrals if we simplify the equation a bit.) Now we multiply the intensity in a given frequency range by the cross section of scattering, to get the amount of energy scattered in the range $d\omega$. The total energy scattered is then the integral of this for all $\omega$. Thus \begin{equation} \begin{aligned} \ddt{W_s}{t} &= \int_0^\infty I(\omega)\sigma_s(\omega)\,d\omega\\[1ex] &= \int_0^\infty\frac{2\pi r_0^2\omega_0^2I(\omega)\,d\omega} {3[(\omega - \omega_0)^2 + \gamma^2/4]}. \end{aligned} \label{Eq:I:41:10} \end{equation}

Now we set $dW_s/dt = 3\gamma kT$. Why three? Because when we made our analysis of the cross section in Chapter 32, we assumed that the polarization was such that the light could drive the oscillator. If we had used an oscillator which could move only in one direction, and the light, say, was polarized in the wrong way, it would not give any scattering. So we must either average the cross section of an oscillator which can go only in one direction, over all directions of incidence and polarization of the light or, more easily, we can imagine an oscillator which will follow the field no matter which way the field is pointing. Such an oscillator, which can oscillate equally in three directions, would have $3kT$ average energy because there are $3$ degrees of freedom in that oscillator. So we should use $3\gamma kT$ because of the $3$ degrees of freedom.

Now we have to do the integral. Let us suppose that the unknown spectral distribution $I(\omega)$ of the light is a smooth curve and does not vary very much across the very narrow frequency region where $\sigma_s$ is peaked (Fig. 41–3). Then the only significant contribution comes when $\omega$ is very close to $\omega_0$, within an amount gamma, which is very small. So therefore, although $I(\omega)$ may be an unknown and complicated function, the only place where it is important is near $\omega = \omega_0$, and there we may replace the smooth curve by a flat one—a “constant”—at the same height. In other words, we simply take $I(\omega)$ outside the integral sign and call it $I(\omega_0)$. We may also take the rest of the constants out in front of the integral, and what we have left is \begin{equation} \label{Eq:I:41:11} \tfrac{2}{3}\pi r_0^2\omega_0^2I(\omega_0) \int_0^\infty\frac{d\omega} {(\omega - \omega_0)^2 + \gamma^2/4} = 3\gamma kT. \end{equation} Now, the integral should go from $0$ to $\infty$, but $0$ is so far from $\omega_0$ that the curve is all finished by that time, so we go instead to minus $\infty$—it makes no difference and it is much easier to do the integral. The integral is an inverse tangent function of the form $\int dx/(x^2 + a^2)$. If we look it up in a book we see that it is equal to $\pi/a$. So what it comes to for our case is $2\pi/\gamma$. Therefore we get, with some rearranging, \begin{equation} \label{Eq:I:41:12} I(\omega_0) = \frac{9\gamma^2kT}{4\pi^2r_0^2\omega_0^2}. \end{equation} Then we substitute the formula (41.6) for gamma (do not worry about writing $\omega_0$; since it is true of any $\omega_0$, we may just call it $\omega$

2023.5.23: 결국 다른 어떤 주파수로 쬐고 흔들어도 별 볼일 없고 고유 주파수만이 효과를 발휘한다는 거네. 광전자도 특정 주파수에만 튀어나오고, 효소도.

수학에서 잘 쓰는 수법, for any $\omega_0, I(\omega_0)=...$, 21.9.14: 헌데 문제는 $\omega_0$는 진동자의 질량과 damping constant와 관련있다는 거. 뱀이 자신의 꼬리 물고 있는 꼴 => iteration, i.e fixed point를 찾는 거)

Inside a closed box at temperature $T$, (41.13) is the distribution of energy of the radiation, according to classical theory. First, let us notice a remarkable feature of that expression. The charge of the oscillator, the mass of the oscillator, all properties specific to the oscillator, cancel out, because once we have reached equilibrium with one oscillator, we must be at equilibrium with any other oscillator of a different mass, or we will be in trouble. So this is an important kind of check on the proposition that equilibrium does not depend on what we are in equilibrium with, but only on the temperature.

2021.9.8: 위 공식이 자연스럽게 나온 것 같지만, 실상은 이 걸 염두에 두고 유도된 것.

Now let us draw a picture of the $I(\omega)$ curve (Fig. 41–4). It tells us how much light we have at different frequencies.The amount of intensity that there is in our box, per unit frequency range, goes, as we see, as the square of the frequency, which means that if we have a box at any temperature at all, and if we look at the x-rays that are coming out, there will be a lot of them!

Of course we know this is false. When we open the furnace and take a look at it, we do not burn our eyes out from x-rays at all. It is completely false. Furthermore, the total energy in the box, the total of all this intensity summed over all frequencies, would be the area under this infinite curve. Therefore, something is fundamentally, powerfully, and absolutely wrong.

Thus was the classical theory absolutely incapable of correctly describing the distribution of light from a blackbody, just as it was incapable of correctly describing the specific heats of gases. Physicists went back and forth over this derivation from many different points of view, and there is no escape. This is the prediction of classical physics. Equation (41.13) is called Rayleigh’s law, and it is the prediction of classical physics, and is obviously absurd.

41–3Equipartition and the quantum oscillator

The difficulty above was another part of the continual problem of classical physics, which started with the difficulty of the specific heat of gases, and now has been focused on the distribution of light in a blackbody. Now, of course, at the time that theoreticians studied this thing, there were also many measurements of the actual curve. And it turned out that the correct curve looked like the dashed curves in Fig. 41–4. That is, the x-rays were not there. If we lower the temperature, the whole curve goes down in proportion to $T$, according to the classical theory, but the observed curve also cuts off sooner at a lower temperature. Thus the low-frequency end of the curve is right, but the high-frequency end is wrong. Why? When Sir James Jeans was worrying about the specific heats of gases, he noted that motions which have high frequency are “frozen out” as the temperature goes too low. That is, if the temperature is too low, if the frequency is too high, the oscillators do not have $kT$ of energy on the average. Now recall how our derivation of (41.13) worked: It all depends on the energy of an oscillator at thermal equilibrium. What the $kT$ of (41.5) was, and what the same $kT$ in (41.13) is, is the mean energy of a harmonic oscillator of frequency $\omega$ at temperature $T$. Classically, this is $kT$, but experimentally, no!—not when the temperature is too low or the oscillator frequency is too high. And so the reason that the curve falls off is the same reason that the specific heats of gases fail. It is easier to study the blackbody curve than it is the specific heats of gases, which are so complicated, therefore our attention is focused on determining the true blackbody curve, because this curve is a curve which correctly tells us, at every frequency, what the average energy of harmonic oscillators actually is as a function of temperature.

Planck studied this curve. He first determined the answer empirically(*just like Lorentz and Einstein), by fitting the observed curve with a nice function that fitted very well. Thus he had an empirical formula for the average energy of a harmonic oscillator as a function of frequency. In other words, he had the right formula instead of $kT$, and then by fiddling around he found a simple derivation for it which involved a very peculiar assumption. That assumption was that the harmonic oscillator can take up energies only $\hbar\omega$ at a time. The idea that they can have any energy at all is false. Of course, that was the beginning of the end of classical mechanics.

The very first correctly determined quantum-mechanical formula will now be derived. Suppose that the permitted energy levels of a harmonic oscillator were equally spaced at $\hbar\omega_0$ apart, so that the oscillator could take on only these different energies (Fig. 41–5). Planck made a somewhat more complicated argument than the one that is being given here, because that was the very beginning of quantum mechanics and he had to prove some things. But we are going to take it as a fact (which he demonstrated in this case) that the probability of occupying a level of energy $E$ is $P(E) = \alpha e^{-E/kT}$. If we go along with that, we will obtain the right result.

Suppose now that we have a lot of oscillators, and each is a vibrator of frequency $\omega$(원래 $\omega_0$). Some of these vibrators will be in the bottom quantum state, some will be in the next one, and so forth. What we would like to know is the average energy of all these oscillators. To find out, let us calculate the total energy of all the oscillators and divide by the number of oscillators. That will be the average energy per oscillator in thermal equilibrium, and will also be the energy that is in equilibrium with the blackbody radiation and that should go in Eq. (41.13) in place of $kT$. Thus we let $N_0$ be the number of oscillators that are in the ground state (the lowest energy state); $N_1$ the number of oscillators in the state $E_1$; $N_2$ the number that are in state $E_2$; and so on. According to the hypothesis (which we have not proved) that in quantum mechanics the law that replaced the probability $e^{-\text{P.E.}/kT}$ or $e^{-\text{K.E.}/kT}$ in classical mechanics is that the probability goes down as $e^{-\Delta E/kT}$, where $\Delta E$ is the excess energy,

21.9.24: 입자 위치와 그 활동 에너지 관계 - 노력하는 만큼 얻는다

2023.2.9: intensity, cumulative

we shall assume that the number $N_1$ that are in the first state will be the number $N_0$ that are in the ground state, times $e^{-\hbar\omega/kT}$. Similarly, $N_2$, the number of oscillators in the second state, is $N_2 = N_0e^{-2\hbar\omega/kT}$. To simplify the algebra, let us call $e^{-\hbar\omega/kT} = x$. Then we simply have $N_1 = N_0x$, $N_2 = N_0x^2$, …, $N_n = N_0x^n$.The total energy of all the oscillators must first be worked out. If an oscillator is in the ground state, there is no energy. If it is in the first state, the energy is $\hbar\omega$, and there are $N_1$ of them. So $N_1\hbar\omega$, or $\hbar\omega N_0x$ is how much energy we get from those. Those that are in the second state have $2\hbar\omega$, and there are $N_2$ of them, so $N_2\cdot 2\hbar\omega = 2\hbar\omega N_0x^2$ is how much energy we get, and so on. Then we add it all together to get $E_{\text{tot}} = N_0\hbar\omega(0 + x +2x^2 + 3x^3 + \dotsb)$.

And now, how many oscillators are there? Of course, $N_0$ is the number that are in the ground state, $N_1$ in the first state, and so on, and we add them together: $N_{\text{tot}} = N_0(1 + x + x^2 + x^3 + \dotsb)$. Thus the average energy is \begin{equation} \label{Eq:I:41:14} \avg{E} = \frac{E_{\text{tot}}}{N_{\text{tot}}} = \frac{N_0\hbar\omega(0 + x +2x^2 + 3x^3 + \dotsb)} {N_0(1 + x + x^2 + x^3 + \dotsb)}. \end{equation} Now the two sums which appear here we shall leave for the reader to play with and have some fun with. When we are all finished summing and substituting for $x$ in the sum, we should get—if we make no mistakes in the sum— \begin{equation} \label{Eq:I:41:15} \avg{E} = \frac{\hbar\omega}{e^{\hbar\omega/kT} - 1}. \end{equation} This, then, was the first quantum-mechanical formula ever known, or ever discussed, and it was the beautiful culmination of decades of puzzlement. Maxwell knew that there was something wrong, and the problem was, what was right? Here is the quantitative answer of what is right instead of $kT$. This expression should, of course, approach $kT$ as $\omega \to 0$ or as $T \to \infty$. See if you can prove that it does—learn how to do the mathematics.

This is the famous cutoff factor that Jeans was looking for, and if we use it instead of $kT$ in (41.13), we obtain for the distribution of light in a black box \begin{equation} \label{Eq:I:41:16} I(\omega)\,d\omega = \frac{\hbar\omega^3\,d\omega} {\pi^2c^2(e^{\hbar\omega/kT} - 1)}. \end{equation} We see that for a large $\omega$, even though we have $\omega^3$ in the numerator, there is an $e$ raised to a tremendous power in the denominator, so the curve comes down again and does not “blow up”—we do not get ultraviolet light and x-rays where we do not expect them!

2021.9.20: 어젠가 그제 든 생각,

geometric model를 가정하면, 에너지 증감은 2차원 surface에 2차원 surface가 붙었다 떨어졌다(connected sum, split) 하는 것. 그러니 증감되는 에너지= 진동수(역동성) $\times$ 인덱스.

9.24: 실험적 함수를 만든 막스 플랑크는 그 함수에 맞아 떨어지는 cutoff 함수를 고민하였을 거다, 마이켈슨-몰리 실험 설명 목적의 로렌쯔 전환 같은.

1. $온도\, T와\, 진동수\, \omega\, 역수관계...$

2. 위 41.15 유형의 식과 입자 분포들과 그 활동 에너지 관계

3. $\lim_{n \rightarrow\infty}\sum_{i=0}^n e^{-\frac{x_i\hbar\omega}{kT}},\,

\lim_{n \rightarrow\infty} \lim_{m \rightarrow\infty} \sum_{i=1}^m e^{-\frac{x_i\hbar\omega}{kT}}\Delta x$, where $\Delta x =\frac{n}m, x_i=i\Delta x$, 앞식과 뒷식의 차이는 $m=n$으로 고정.

뒷식은 $\int_0^\infty e^{-\frac{x\hbar\omega}{kT}} dx=\frac{kT}{\hbar\omega}$...①,

마찬가지로

$\lim_{n \rightarrow\infty} \lim_{m \rightarrow\infty}\sum_{i=1}^m {x_i\hbar\omega} e^{-\frac{x_i\hbar\omega}{kT}}\Delta x=

\int_0^\infty{x\hbar\omega e^{-\frac{x\hbar\omega}{kT}}} dx=\frac{(kT)^2}{\hbar\omega})$...②.

This is fascinating. 에너지가 continuous하면 $kT=$ ②/①, $\hbar \rightarrow \infty$ 이면,

(41.15)식의 $\avg{E} \rightarrow kT$이니, 모든 게 consistent.

4. 플랑크는 위 1,2,3을 고려하여 에너지가 discrete하다는 주장을 하게 된 것

이처럼 수학적 표현에 근거한 발견 예 => 색의 기하구조

5. kinetic theory와 thermodynamics에서 나오는 결론들 비교로 얻는 상수 값, $\sigma$

2024.2.6: 빛 입자가 토랄 액션 갖는 4-manifold라는 모델이란 걸 가정하면, 빛 에너지가 disrete할 수 밖에 없음

One might complain that in our derivation of (41.16) we used the quantum theory for the energy levels of the harmonic oscillator, but the classical theory in determining the cross section $\sigma_s$. But the quantum theory of light interacting with a harmonic oscillator gives exactly the same result as that given by the classical theory. That, in fact, is why we were justified in spending so much time on our analysis of the index of refraction and the scattering of light, using a model of atoms like little oscillators—the quantum formulas are substantially the same.

Now let us return to the Johnson noise in a resistor. We have already remarked that the theory of this noise power is really the same theory as that of the classical blackbody distribution. In fact, rather amusingly, we have already said that if the resistance in a circuit were not a real resistance, but were an antenna (an antenna acts like a resistance because it radiates energy), a radiation resistance, it would be easy for us to calculate what the power would be. It would be just the power that runs into the antenna from the light that is all around, and we would get the same distribution, changed by only one or two factors. We can suppose that the resistor is a generator with an unknown power spectrum $P(\omega)$. The spectrum is determined by the fact that this same generator, connected to a resonant circuit of any frequency, as in Fig. 41–2(b), generates in the inductance a voltage of the magnitude given in Eq. (41.2). One is thus led to the same integral as in (41.10), and the same method works to give Eq. (41.3).

2023.4.15: voltage 진동 가정으로부터

1. $\hat{V}_L = i\omega L\hat{I}$로 비추어 => $V=V_0(\omega) e^{i\omega t},\, q=\frac{V_0 e^{i\omega t}}{L(\omega_0^2-\omega^2+i\gamma\omega)}, I(\omega, t)=\frac{dq}{dt}

=\frac{i \omega V_0 e^{i\omega t}}{L(\omega_0^2-\omega^2+i\gamma\omega)}

=\frac{\omega V_0}{L}\frac{-\sin{\omega t}+ i \cos{\omega t}}{\omega_0^2-\omega^2+i\gamma\omega}$ => real part of $I(\omega, t) =

\frac{\omega V_0}{L} \frac{-({\omega_0^2-\omega^2)\sin{\omega t}+\gamma\omega \cos{\omega t}}}{({(\omega_0^2-\omega^2)^2+ \gamma^2\omega ^2})}$

2. From 41.2,

$\avg{V_L ^2}= L \omega_0^2 kT$

= $L^2 \omega_0 ^2 \avg{I^2}= L^2 \omega_0 ^2 \int_0^\infty (\frac1b \int_0^b {\text{real part } I(\omega, t)}^2 dt)\, d{\omega}

\\ =L^2 \omega_0 ^2 \frac{1}{L^2} \int_0^\infty (\frac1b \int_0^b V_0^2 \omega ^2 \frac{(\omega_0^2-\omega^2)^2{\sin^2 \omega t}-(\omega_0^2-\omega^2)\sin{2\omega t} + \gamma^2\omega^2 \cos^2{\omega t}}{l^2}dt)\, d{\omega}

\\=\omega_0^2 \int_0^\infty{\frac{V_0^2\omega^2}{l^2} \frac{l}{2}} d\omega

= \frac{\omega_0^2}{2} \int_0^\infty{\frac{V_0^2\omega^2}{{(\omega_0^2-\omega^2)^2+ \gamma^2\omega ^2}}} d\omega

\\ \text{where } b=\frac{2\pi}{\omega} , \, l={(\omega_0^2-\omega^2)^2+ \gamma^2\omega ^2}, \text{ and by } \avg {\sin^2 {\omega t}}= \avg {\cos^2 {\omega t}}=\frac{b}{2}, \avg{\sin{2\omega t}}=0$

3. $P(\omega)=R\avg{\text{real part of }I(\omega)^2}= R \frac1b \int_0^b {\text{real part } I(\omega, t)}^2 dt \text{ 이니까,}

\text{ } L^2 \omega_0 ^2 \avg{I^2}= L^2 \omega_0 ^2 \int_0^\infty \frac{P(\omega)}{R} d{\omega}

\\= L^2 \omega_0 ^2 \int_0^\infty \frac{P(\omega) l}{Rl} d{\omega}

\,* \approx L^2 \omega_0 ^2 \int_0^\infty \frac{P(\omega_0) l(\omega_0)}{R(4(\omega-\omega_0)^2 \omega_0^2+\gamma^2 \omega_0^2)} d{\omega}

= L^2 \omega_0 ^2 \frac{P(\omega_0) l(\omega_0)}{R} \int_0^\infty \frac{1}{(4(\omega-\omega_0)^2+\gamma^2)\omega_0^2} d{\omega}

\\ =L^2 \omega_0 ^2 \frac{P(\omega_0)\gamma^2} {4R} \int_0^\infty \frac{1}{((\omega-\omega_0)^2+\frac{\gamma^2}{4})} d{\omega} (\Leftarrow l(\omega_0)=\gamma^2 \omega_0^2)

\\ \approx L^2 \omega_0 ^2 \frac{P(\omega_0) \gamma^2} {4R} \frac{2\pi}{\gamma}

= L \omega_0 ^2 P(\omega_0) \frac{\pi}{2} \text{ by } \gamma=\frac{R}{L}

\\ \text{ we get the desired (4.13) } \text{ from }L\omega_0^2 kT= L \omega_0 ^2 P(\omega_0) \frac{\pi}{2}$

* 여기처럼

2023.5.20 : 먼저 전류 기호는 $j$, $I$ 대신에.

위 4.15일 계산에 의해

$\avg j^2 = \frac{\omega^2 {V_0}^2}{L^2} \frac{1}{{(\omega_0^2-\omega^2)^2+ \gamma^2\omega ^2}}$, by radiation resistance $P(\omega)=R \avg j^2$이니까,

$P(\omega)=R \frac{\omega^2 {V_0}^2}{L^2} \frac{1}{{(\omega_0^2-\omega^2)^2+ \gamma^2\omega ^2}}= R \frac{\omega^2 {V_0}^2}{L^2 l}$

그리고 $P(\omega)= I(\omega) \sigma_s N$, where $N_1$ 단위 도선의 자유전자 갯수, $N=N_1 l$은 총합 => 헛소리, 정답은 아래에.

2023.6.18 - 7.27: 화장실에서 든 생각+ radiation resistence 계산에서와 마찬가지로, we have only to calculate for each electron.

다시 한번 강조, 물리 법칙은 극한 상황 묘사. 여기서 가정하는 상황은 평형, equilibrium, 즉 시작해서 충분한 시간 후 수렴하는 상태를 말한다.

수학적 표현으로는 fixed point 상황, 블랙 박스에서 $I$를 구하는 과정도 가만 보면, iteration!

1. 도선은 1차원이니 $P(\omega)=\frac13 I(\omega)\sigma_s$

2. 여기 $\gamma$는 41.6식의 $\frac{2}{3}\,\frac{r_0\omega_0^2}{c}$가 아니고 table 23–1의 $\frac{R}{L}$.

따라서 41.12의 $I$를 대입하면,

$P(\omega)=\frac13 I(\omega)\sigma_s= \frac13 \frac{9\gamma^2kT}{4\pi^2r_0^2\omega_0^2} \times

\frac{8\pi r_0^2}{3}\biggl(

\frac{\omega^4}{(\omega^2 - \omega_0^2)^2 + \gamma^2\omega^2}\biggr)

= \frac{2\gamma^2 kT}{\pi \omega_0^2} \biggl(\frac{\omega^4}{(\omega^2 - \omega_0^2)^2 + \gamma^2\omega^2}\biggr)$

위에 언급했듯이, 계산들은 실질적으로 natural frequency $\omega_0$에서의 계산이라는 거, fixed point... equilibrium에서.

$\omega$에 $\omega_0$를 대입하면, 우리가 바라는 $\frac{2}{\pi} kT$가 나온다, consistent with 32-13 and Fig.23-2. 일관성있게 하려고 그렇게 $\Delta \omega, Q$를 그렇게 정의했을듯.

All the things we have been talking about—the so-called Johnson noise and Planck’s distribution, and the correct theory of the Brownian movement which we are about to describe—are developments of the first decade or so of the 20th century. Now with those points and that history in mind, we return to the Brownian movement.

41–4The random walk

Let us consider how the position of a jiggling particle should change with time, for very long times compared with the time between “kicks.” Consider a little Brownian movement particle which is jiggling about because it is bombarded on all sides by irregularly jiggling water molecules. Query: After a given length of time, how far away is it likely to be from where it began? This problem was solved by Einstein and Smoluchowski. If we imagine that we divide the time into little intervals, let us say a hundredth of a second or so, then after the first hundredth of a second it moves here, and in the next hundredth it moves some more, in the next hundredth of a second it moves somewhere else, and so on. In terms of the rate of bombardment, a hundredth of a second is a very long time. The reader may easily verify that the number of collisions a single molecule of water receives in a second is about $10^{14}$, so in a hundredth of a second it has $10^{12}$ collisions, which is a lot! Therefore, after a hundredth of a second it is not going to remember what happened before. In other words, the collisions are all random, so that one “step” is not related to the previous “step.” It is like the famous drunken sailor problem: the sailor comes out of the bar and takes a sequence of steps, but each step is chosen at an arbitrary angle, at random (Fig. 41–6). The question is: After a long time, where is the sailor? Of course we do not know! It is impossible to say. What do we mean—he is just somewhere more or less random. Well then, on the average, where is he? On the average, how far away from the bar has he gone? We have already answered this question, because once we were discussing the superposition of light from a whole lot of different sources at different phases, and that meant adding a lot of arrows at different angles (Chapter 30, 1 & 2). There we discovered that the mean square of the distance from one end to the other of the chain of random steps, which was the intensity of the light, is the sum of the intensities of the separate pieces. And so, by the same kind of mathematics, we can prove immediately that if $\FLPR_N$ is the vector distance from the origin after $N$ steps, the mean square of the distance from the origin is proportional to the number $N$ of steps. That is, $\avg{R_N^2} = NL^2$, where $L$ is the length of each step. Since the number of steps is proportional to the time in our present problem, the mean square distance is proportional to the time: \begin{equation} \label{Eq:I:41:17} \avg{R^2} = \alpha t. \end{equation} This does not mean that the mean distance is proportional to the time. If the mean distance were proportional to the time it would mean that the drifting is at a nice uniform velocity. The sailor is making some relatively sensible headway, but only such that his mean square distance is proportional to time. That is the characteristic of a random walk.

We may show very easily that in each successive step the square of the distance increases, on the average, by $L^2$. For if we write $\FLPR_N = \FLPR_{N - 1} + \FLPL$, we find that $\FLPR_N^2$ is \begin{equation*} \FLPR_N\!\cdot\!\FLPR_N = R_N^2 = R_{N - 1}^2 + 2\FLPR_{N - 1}\!\cdot\!\FLPL + L^2, \end{equation*} and averaging over many trials, we have $\avg{R_N^2} = \avg{R_{N - 1}^2} + L^2$, since $\avg{\FLPR_{N - 1}\cdot\FLPL} = 0$.

2023.7.12: one “step” is not related to the previous “step.”에 대한 절묘한 수학적 묘사.

2023.10.2: drag

Now we would like to calculate the coefficient $\alpha$ in Eq. (41.17), and to do so we must add a feature. We are going to suppose that if we were to put a force on this particle (having nothing to do with the Brownian movement—we are taking a side issue for the moment), then it would react in the following way against the force. First, there would be inertia. Let $m$ be the coefficient of inertia, the effective mass of the object (not necessarily the same as the real mass of the real particle, because the water has to move around the particle if we pull on it). Thus if we talk about motion in one direction, there is a term like $m(d^2x/dt^2)$ on one side. And next, we want also to assume that if we kept a steady pull on the object, there would be a drag on it from the fluid, proportional to its velocity. Besides the inertia of the fluid, there is a resistance to flow due to the viscosity and the complexity of the fluid. It is absolutely essential that there be some irreversible losses, something like resistance, in order that there be fluctuations. There is no way to produce the $kT$ unless there are also losses. The source of the fluctuations is very closely related to these losses. What the mechanism of this drag is, we will discuss soon—we shall talk about forces that are proportional to the velocity and where they come from.

2023.8.31: drag, 방해, 장애, resistance는

주변 질량들과의 충돌, 다른 파의 간섭, inteference

Now let us use the same formula in the case where the force is not external, but is equal to the irregular forces of the Brownian movement. We shall then try to determine the mean square distance that the object goes. Instead of taking the distances in three dimensions, let us take just one dimension, and find the mean of $x^2$, just to prepare ourselves. (Obviously the mean of $x^2$ is the same as the mean of $y^2$ is the same as the mean of $z^2$, and therefore the mean square of the distance is just $3$ times what we are going to calculate.) The $x$-component of the irregular forces is, of course, just as irregular as any other component. What is the rate of change of $x^2$? It is $d(x^2)/dt = 2x(dx/dt)$, so what we have to find is the average of the position times the velocity. We shall show that this is a constant, and that therefore the mean square radius will increase proportionally to the time, and at what rate. Now if we multiply Eq. (41.19) by $x$, $mx(d^2x/dt^2) + \mu x(dx/dt) = xF_x$. We want the time average of $x(dx/dt)$, so let us take the average of the whole equation, and study the three terms. Now what about $x$ times the force? If the particle happens to have gone a certain distance $x$, then, since the irregular force is completely irregular and does not know where the particle started from, the next impulse can be in any direction relative to $x$. If $x$ is positive, there is no reason why the average force should also be in that direction. It is just as likely to be one way as the other. The bombardment forces are not driving it in a definite direction. So the average value of $x$ times $F$ is zero. On the other hand, for the term $mx(d^2x/dt^2)$ we will have to be a little fancy, and write this as \begin{equation*} mx\,\frac{d^2x}{dt^2} = m\,\frac{d[x(dx/dt)]}{dt} - m\biggl(\ddt{x}{t}\biggr)^2. \end{equation*} Thus we put in these two terms and take the average of both. So let us see how much the first term should be. Now $x$ times the velocity has a mean that does not change with time, because when it gets to some position it has no remembrance of where it was before, so things are no longer changing with time. So this quantity, on the average, is zero.

21.10.4: $xF_x, x\frac{dx}{dt}$ zero에 대하여

이건 수학적 설명이 편하다, 적어도 나에겐. 즉, For each $x$, 힘과 속도는 중구난방 임의적이니, 그 합 $\sum F=\sum \frac{dx}{dt}=0$ 그러니 For all $x$, zero.

10.8일 뛰다가 생각난 것 => 평균 운동에너지

21.9.29&10.10: 방향성을 갖는다면? $R_N=cR_{N-1}+L$ 여기서 $R_i$는, 본문과 달리, 목표를 원점으로 하는 벡터. $c$는 상수. 이트이스 센트럴포스, $v_n=v_{n-1}+a$(가속도), 디비에이션은 좀 중구난방이지만. 어떤 점으로 끌리면서 우왕좌왕 정도에 따라 가까워 질 수도 있고 멀어질 수도 있는, 뭐 그런거