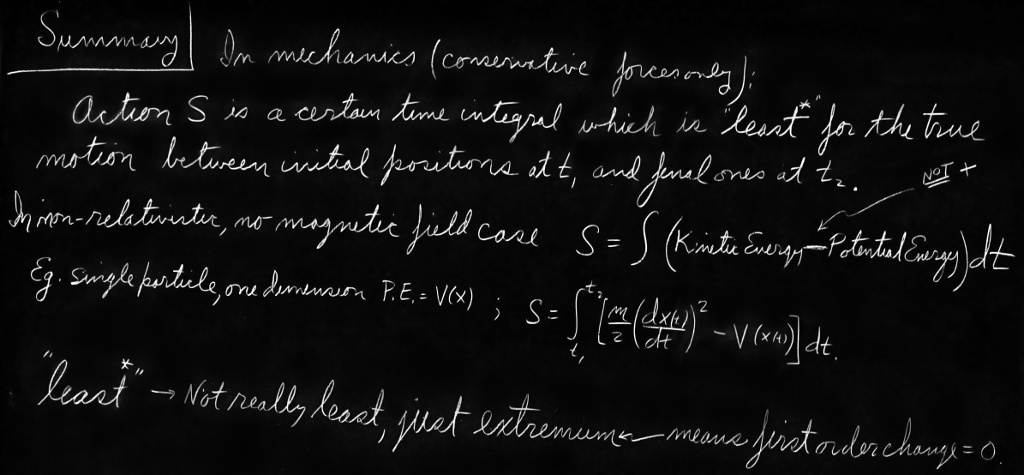

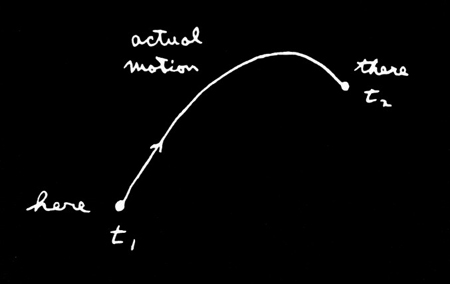

19 The Principle of Least Action

The Principle of Least Action

19–1A special lecture—almost verbatim1

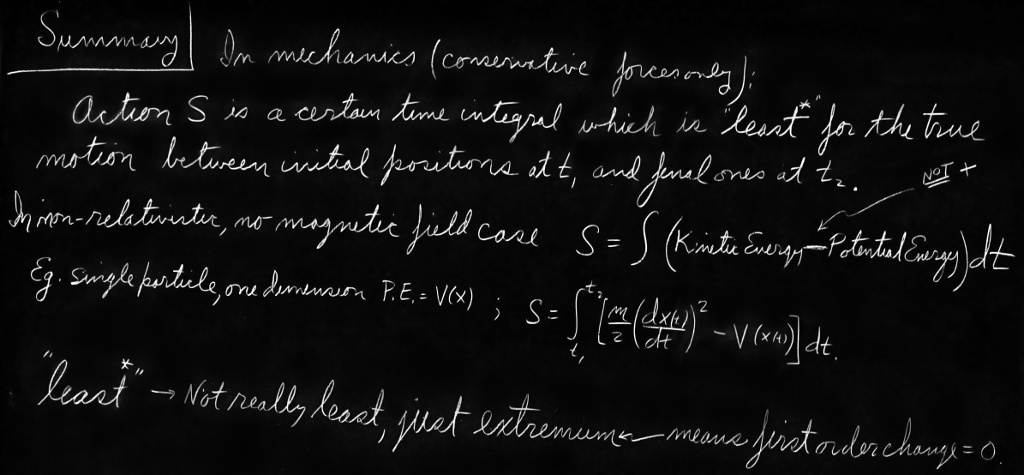

“When I was in high school, my physics teacher—whose name was Mr. Bader—called me down one day after physics class and said, ‘You look bored; I want to tell you something interesting.’ Then he told me something which I found absolutely fascinating, and have, since then, always found fascinating. Every time the subject comes up, I work on it. In fact, when I began to prepare this lecture I found myself making more analyses on the thing. Instead of worrying about the lecture, I got involved in a new problem. The subject is this—the principle of least action.

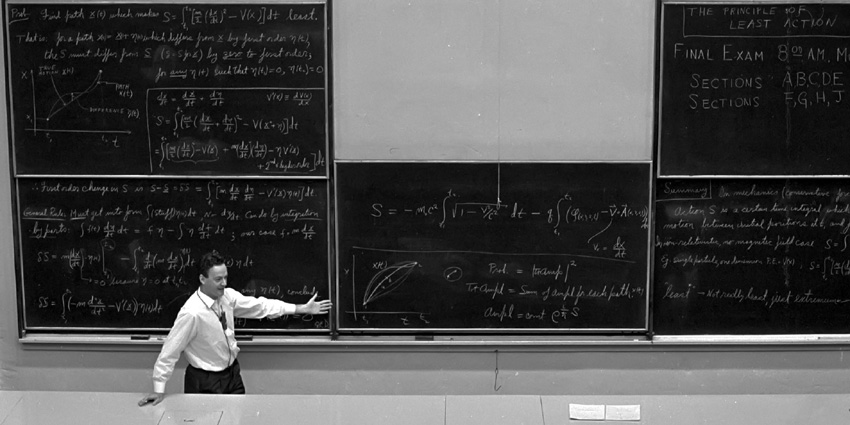

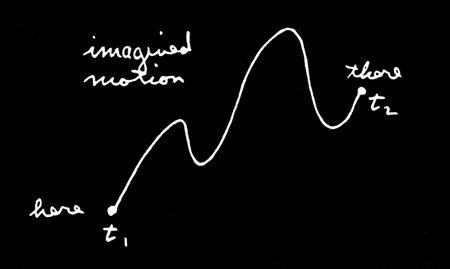

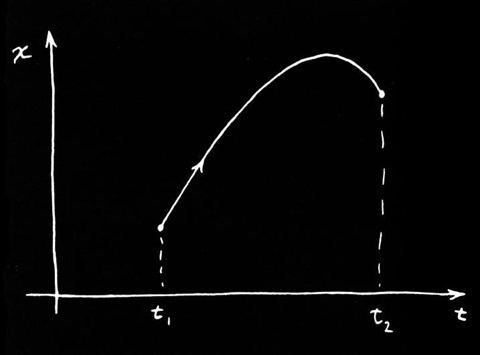

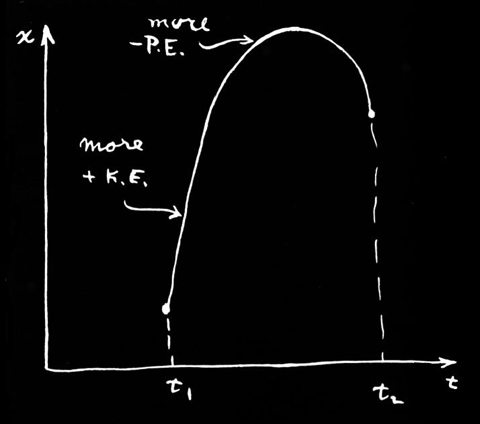

“Mr. Bader told me the following: Suppose you have a particle (in a gravitational field, for instance) which starts somewhere and moves to some other point by free motion—you throw it, and it goes up and comes down (Fig. 19–1). It goes from the original place to the final place in a certain amount of time. Now, you try a different motion. Suppose that to get from here to there, it went as shown in Fig. 19–2 but got there in just the same amount of time. Then he said this: If you calculate the kinetic energy at every moment on the path, take away the potential energy, and integrate it over the time during the whole path, you’ll find that the number you’ll get is bigger than that for the actual motion.

“In other words,the laws of Newton could be stated not in the form $F=ma$ but in the form: the average kinetic energy less the average potential energy is as little as possible for the path of an object going from one point to another.

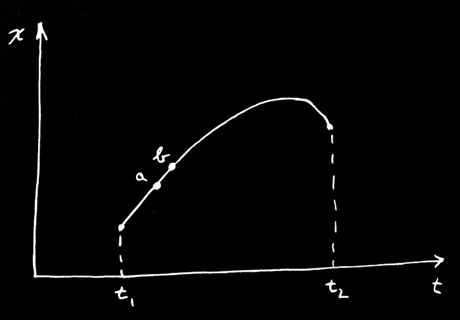

“Let me illustrate a little bit better what it means. If you take the case of the gravitational field, then if the particle has the path $x(t)$ (let’s just take one dimension for a moment; we take a trajectory that goes up and down and not sideways), where $x$ is the height above the ground, the kinetic energy is $\tfrac{1}{2}m\,(dx/dt)^2$, and the potential energy at any time is $mgx$. Now I take the kinetic energy minus the potential energy at every moment along the path and integrate that with respect to time from the initial time to the final time. Let’s suppose that at the original time $t_1$ we started at some height and at the end of the time $t_2$ we are definitely ending at some other place (Fig. 19–3).

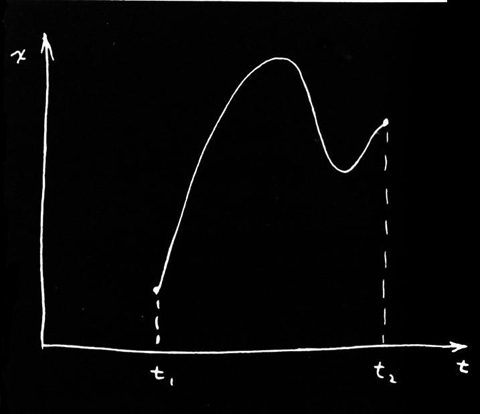

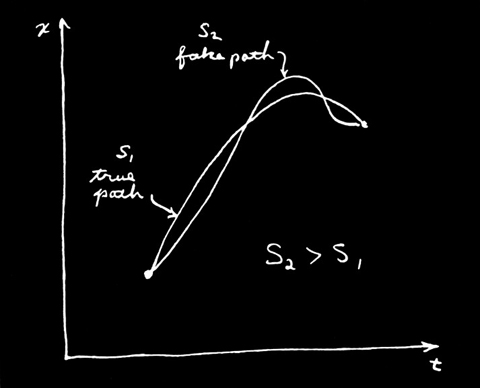

“Then the integral is \begin{equation*} \int_{t_1}^{t_2}\biggl[ \frac{1}{2}m\biggl(\ddt{x}{t}\biggr)^2-mgx\biggr]dt. \end{equation*} The actual motion is some kind of a curve—it’s a parabola if we plot against the time—and gives a certain value for the integral. But we could imagine some other motion that went very high and came up and down in some peculiar way (Fig. 19–4). We can calculate the kinetic energy minus the potential energy and integrate for such a path … or for any other path we want. The miracle is that the true path is the one for which that integral is least.

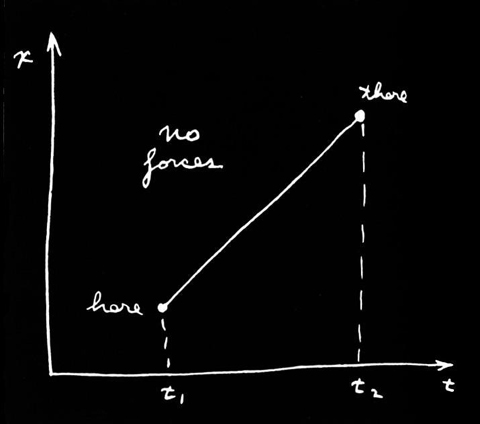

“Let’s try it out. First, suppose we take the case of a free particle for which there is no potential energy at all. Then the rule says that in going from one point to another in a given amount of time, the kinetic energy integral is least, so it must go at a uniform speed. (We know that’s the right answer—to go at a uniform speed.) Why is that? Because if the particle were to go any other way, the velocities would be sometimes higher and sometimes lower than the average. The average velocity is the same for every case because it has to get from ‘here’ to ‘there’ in a given amount of time.

“As an example, say your job is to start from home and get to school in a given length of time with the car. You can do it several ways: You can accelerate like mad at the beginning and slow down with the brakes near the end, or you can go at a uniform speed, or you can go backwards for a while and then go forward, and so on. The thing is that the average speed has got to be, of course, the total distance that you have gone over the time. But if you do anything but go at a uniform speed, then sometimes you are going too fast and sometimes you are going too slow. Now the mean square of something that deviates around an average, as you know, is always greater than the square of the mean; so the kinetic energy integral would always be higher if you wobbled your velocity than if you went at a uniform velocity. So we see that the integral is a minimum if the velocity is a constant (when there are no forces). The correct path is shown in Fig. 19–5.

“Now, an object thrown up in a gravitational field does rise faster first and then slow down. That is because there is also the potential energy, and we must have the least difference of kinetic and potential energy on the average. Because the potential energy rises as we go up in space, we will get a lower difference if we can get as soon as possible up to where there is a high potential energy. Then we can take that potential away from the kinetic energy and get a lower average. So it is better to take a path which goes up and gets a lot of negative stuff from the potential energy (Fig. 19–6).

“On the other hand, you can’t go up too fast, or too far, because you will then have too much kinetic energy involved—you have to go very fast to get way up and come down again in the fixed amount of time available. So you don’t want to go too far up, but you want to go up some. So it turns out that the solution is some kind of balance between trying to get more potential energy with the least amount of extra kinetic energy—trying to get the difference, kinetic minus the potential, as small as possible.

“That is all my teacher told me, because he was a very good teacher and knew when to stop talking. But I don’t know when to stop talking. So instead of leaving it as an interesting remark, I am going to horrify and disgust you with the complexities of life by proving that it is so. The kind of mathematical problem we will have is very difficult and a new kind. We have a certain quantity which is called the action, $S$. It is the kinetic energy, minus the potential energy, integrated over time. \begin{equation*} \text{Action}=S=\int_{t_1}^{t_2} (\text{KE}-\text{PE})\,dt. \end{equation*} Remember that the PE and KE are both functions of time. For each different possible path you get a different number for this action. Our mathematical problem is to find out for what curve that number is the least.

“You say—Oh, that’s just the ordinary calculus of maxima and minima. You calculate the action and just differentiate to find the minimum.

“But watch out. Ordinarily we just have a function of some variable, and we have to find the value of that variable where the function is least or most. For instance, we have a rod which has been heated in the middle and the heat is spread around. For each point on the rod we have a temperature, and we must find the point at which that temperature is largest. But now for each path in space we have a number—quite a different thing—and we have to find the path in space for which the number is the minimum. That is a completely different branch of mathematics. It is not the ordinary calculus. In fact, it is called the calculus of variations.

“There are many problems in this kind of mathematics. For example, the circle is usually defined as the locus of all points at a constant distance from a fixed point, but another way of defining a circle is this: a circle is that curve of given length which encloses the biggest area. Any other curve encloses less area for a given perimeter than the circle does. So if we give the problem: find that curve which encloses the greatest area for a given perimeter, we would have a problem of the calculus of variations—a different kind of calculus than you’re used to.

“So we make the calculation for the path of an object. Here is the way we are going to do it. The idea is that we imagine that there is a true path and that any other curve we draw is a false path, so that if we calculate the action for the false path we will get a value that is bigger than if we calculate the action for the true path (Fig. 19–7).

“Problem: Find the true path. Where is it? One way, of course, is to calculate the action for millions and millions of paths and look at which one is lowest. When you find the lowest one, that’s the true path.

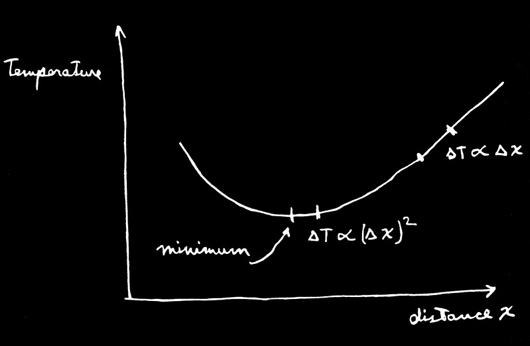

“That’s a possible way. But we can do it better than that. When we have a quantity which has a minimum—for instance, in an ordinary function like the temperature—one of the properties of the minimum is that if we go away from the minimum in the first order, the deviation of the function from its minimum value is only second order. At any place else on the curve, if we move a small distance the value of the function changes also in the first order. But at a minimum, a tiny motion away makes, in the first approximation, no difference (Fig. 19–8).

“That is what we are going to use to calculate the true path. If we have the true path, a curve which differs only a little bit from it will, in the first approximation, make no difference in the action. Any difference will be in the second approximation, if we really have a minimum.

“That is easy to prove. If there is a change in the first order when I deviate the curve a certain way, there is a change in the action that is proportional to the deviation. The change presumably makes the action greater; otherwise we haven’t got a minimum. But then if the change is proportional to the deviation, reversing the sign of the deviation will make the action less. We would get the action to increase one way and to decrease the other way. The only way that it could really be a minimum is that in the first approximation it doesn’t make any change, that the changes are proportional to the square of the deviations from the true path.

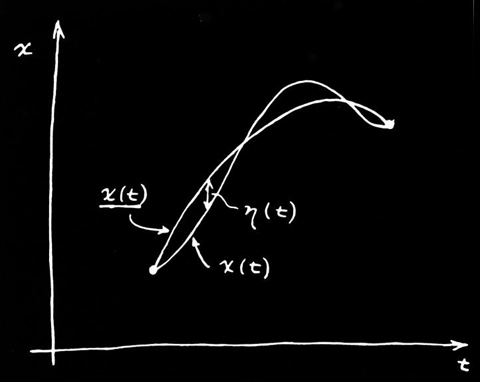

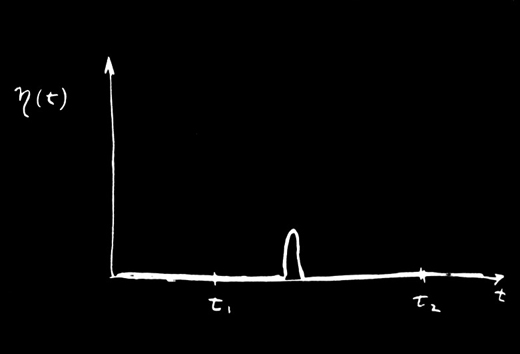

“So we work it this way: We call $\underline{x(t)}$ (with an underline) the true path—the one we are trying to find. We take some trial path $x(t)$ that differs from the true path by a small amount which we will call $\eta(t)$ (eta of $t$; Fig. 19–9).

“Now the idea is that if we calculate the action $S$ for the path $x(t)$, then the difference between that $S$ and the action that we calculated for the path $\underline{x(t)}$—to simplify the writing we can call it $\underline{S}$—the difference of $\underline{S}$ and $S$ must be zero in the first-order approximation of small $\eta$. It can differ in the second order, but in the first order the difference must be zero.

“And that must be true for any $\eta$ at all. Well, not quite. The method doesn’t mean anything unless you consider paths which all begin and end at the same two points—each path begins at a certain point at $t_1$ and ends at a certain other point at $t_2$, and those points and times are kept fixed. So the deviations in our $\eta$ have to be zero at each end, $\eta(t_1)=0$ and $\eta(t_2)=0$. With that condition, we have specified our mathematical problem.

“If you didn’t know any calculus, you might do the same kind of thing to find the minimum of an ordinary function $f(x)$. You could discuss what happens if you take $f(x)$ and add a small amount $h$ to $x$ and argue that the correction to $f(x)$ in the first order in $h$ must be zero at the minimum. You would substitute $x+h$ for $x$ and expand out to the first order in $h$ … just as we are going to do with $\eta$.

“The idea is then that we substitute $x(t)=\underline{x(t)}+\eta(t)$ in the formula for the action: \begin{equation*} S=\int\biggl[ \frac{m}{2}\biggl(\ddt{x}{t}\biggr)^2-V(x) \biggr]dt, \end{equation*} where I call the potential energy $V(x)$. The derivative $dx/dt$ is, of course, the derivative of $\underline{x(t)}$ plus the derivative of $\eta(t)$, so for the action I get this expression: \begin{equation*} S=\int_{t_1}^{t_2}\biggl[ \frac{m}{2}\biggl( \ddt{\underline{x}}{t}+\ddt{\eta}{t} \biggr)^2-V(\underline{x}+\eta) \biggr]dt. \end{equation*}

“Now I must write this out in more detail. For the squared term I get \begin{equation*} \biggl(\ddt{\underline{x}}{t}\biggr)^2+ 2\,\ddt{\underline{x}}{t}\,\ddt{\eta}{t}+ \biggl(\ddt{\eta}{t}\biggr)^2. \end{equation*} But wait. I’m not worrying about higher than the first order, so I will take all the terms which involve $\eta^2$ and higher powers and put them in a little box called ‘second and higher order.’ From this term I get only second order, but there will be more from something else. So the kinetic energy part is \begin{equation*} \frac{m}{2}\biggl(\ddt{\underline{x}}{t}\biggr)^2+ m\,\ddt{\underline{x}}{t}\,\ddt{\eta}{t}+ (\text{second and higher order}). \end{equation*}

“Now we need the potential $V$ at $\underline{x}+\eta$. I consider $\eta$ small, so I can write $V(x)$ as a Taylor series. It is approximately $V(\underline{x})$; in the next approximation (from the ordinary nature of derivatives) the correction is $\eta$ times the rate of change of $V$ with respect to $x$, and so on: \begin{equation*} V(\underline{x}+\eta)=V(\underline{x})+ \eta V'(\underline{x})+\frac{\eta^2}{2}\,V''(\underline{x})+\dotsb \end{equation*} I have written $V'$ for the derivative of $V$ with respect to $x$ in order to save writing. The term in $\eta^2$ and the ones beyond fall into the ‘second and higher order’ category and we don’t have to worry about them. Putting it all together, \begin{aligned} S=\int_{t_1}^{t_2}\biggl[ &\frac{m}{2}\biggl(\ddt{\underline{x}}{t}\biggr)^2-V(\underline{x})+ m\,\ddt{\underline{x}}{t}\,\ddt{\eta}{t}\notag\\ &-\eta V'(\underline{x})+(\text{second and higher order})\biggr]dt.\notag \end{aligned} Now if we look carefully at the thing, we see that the first two terms which I have arranged here correspond to the action $\underline{S}$ that I would have calculated with the true path $\underline{x}$. The thing I want to concentrate on is the change in $S$—the difference between the $S$ and the $\underline{S}$ that we would get for the right path. This difference we will write as $\delta S$, called the variation in $S$. Leaving out the ‘second and higher order’ terms, I have for $\delta S$ \begin{equation*} \delta S=\int_{t_1}^{t_2}\biggl[ m\,\ddt{\underline{x}}{t}\,\ddt{\eta}{t}-\eta V'(\underline{x}) \biggr]dt. \end{equation*}

“Now the problem is this: Here is a certain integral. I don’t know what the $\underline{x}$ is yet, but I do know that no matter what $\eta$ is, this integral must be zero. Well, you think, the only way that that can happen is that what multiplies $\eta$ must be zero. But what about the first term with $d\eta/dt$? Well, after all, if $\eta$ can be anything at all, its derivative is anything also, so you conclude that the coefficient of $d\eta/dt$ must also be zero. That isn’t quite right. It isn’t quite right because there is a connection between $\eta$ and its derivative; they are not absolutely independent, because $\eta(t)$ must be zero at both $t_1$ and $t_2$.

“The method of solving all problems in the calculus of variations always uses the same general principle. You make the shift in the thing you want to vary (as we did by adding $\eta$); you look at the first-order terms; then you always arrange things in such a form that you get an integral of the form ‘some kind of stuff times the shift $(\eta)$,’ but with no other derivatives (no $d\eta/dt$). It must be rearranged so it is always ‘something’ times $\eta$. You will see the great value of that in a minute. (There are formulas that tell you how to do this in some cases without actually calculating, but they are not general enough to be worth bothering about; the best way is to calculate it out this way.)

“How can I rearrange the term in $d\eta/dt$ to make it have an $\eta$? I can do that by integrating by parts. It turns out that the whole trick of the calculus of variations consists of writing down the variation of $S$ and then integrating by parts so that the derivatives of $\eta$ disappear. It is always the same in every problem in which derivatives appear.

“You remember the general principle for integrating by parts. If you have any function $f$ times $d\eta/dt$ integrated with respect to $t$, you write down the derivative of $\eta f$: \begin{equation*} \ddt{}{t}(\eta f)=\eta\,\ddt{f}{t}+f\,\ddt{\eta}{t}. \end{equation*} The integral you want is over the last term, so \begin{equation*} \int f\,\ddt{\eta}{t}\,dt=\eta f-\int\eta\,\ddt{f}{t}\,dt. \end{equation*}

“In our formula for $\delta S$, the function $f$ is $m$ times $d\underline{x}/dt$; therefore, I have the following formula for $\delta S$. \begin{equation*} \delta S=\left.m\,\ddt{\underline{x}}{t}\,\eta(t)\right|_{t_1}^{t_2}- \int_{t_1}^{t_2}\ddt{}{t}\biggl(m\,\ddt{\underline{x}}{t}\biggr)\eta(t)\,dt- \int_{t_1}^{t_2}V'(\underline{x})\,\eta(t)\,dt. \end{equation*} \begin{align*} \delta S=\left.m\,\ddt{\underline{x}}{t}\,\eta(t)\right|_{t_1}^{t_2}- \int_{t_1}^{t_2}\ddt{}{t}\biggl(m\,\ddt{\underline{x}}{t}\biggr)\eta(t)\,&dt\\[1ex] -\int_{t_1}^{t_2}V'(\underline{x})\,\eta(t)\,&dt. \end{align*} The first term must be evaluated at the two limits $t_1$ and $t_2$. Then I must have the integral from the rest of the integration by parts. The last term is brought down without change.

“Now comes something which always happens—the integrated part disappears. (In fact, if the integrated part does not disappear, you restate the principle, adding conditions to make sure it does!)

2024.6.22: 흐름의 뼈대를 유지시키는 내에서 assume or neglect, 예: assumptions, gauge 선택

맥락을 따른다는 점에서... 새로운 변화 시도할 때는 consistent with everything else we know, 예: 로렌쯔 수축, 프랑크 상수 발견

“We have that an integral of something or other times $\eta(t)$ is always zero: \begin{equation*} \int F(t)\,\eta(t)\,dt=0. \end{equation*} I have some function of $t$; I multiply it by $\eta(t)$; and I integrate it from one end to the other. And no matter what the $\eta$ is, I get zero. That means that the function $F(t)$ is zero. That’s obvious, but anyway I’ll show you one kind of proof.

“Suppose that for $\eta(t)$ I took something which was zero for all $t$ except right near one particular value. It stays zero until it gets to this $t$, then it blips up for a moment and blips right back down (Fig. 19–10). When we do the integral of this $\eta$ times any function $F$, the only place that you get anything other than zero was where $\eta(t)$ was blipping, and then you get the value of $F$ at that place times the integral over the blip. The integral over the blip alone isn’t zero, but when multiplied by $F$ it has to be; so the function $F$ has to be zero where the blip was. But the blip was anywhere I wanted to put it, so $F$ must be zero everywhere.

“We see that if our integral is zero for any $\eta$, then the coefficient of $\eta$ must be zero. The action integral will be a minimum for the path that satisfies this complicated differential equation: \begin{equation*} \biggl[-m\,\frac{d^2\underline{x}}{dt^2}-V'(\underline{x})\biggr]=0. \end{equation*} It’s not really so complicated; you have seen it before. It is just $F=ma$. The first term is the mass times acceleration, and the second is the derivative of the potential energy, which is the force.

2024.5.28: least action이란 기가 움직이는 만큼만 움직여 평형을 이루는 거

중력은 기의 흐름으로부터 발생하는 것으로 인간이 의지를 가지고 망치를 휘두르거나 물건 옮기는 그런 거와 다른 거. 아인슈타인의 만류인력의 거리 지수 수정!

12.14: space-time 공간에서는 least action = 최대한 time lapse

“So, for a conservative system at least, we have demonstrated that the principle of least action gives the right answer; it says that the path that has the minimum action is the one satisfying Newton’s law.

“One remark: I did not prove it was a minimum—maybe it’s a maximum. In fact, it doesn’t really have to be a minimum. It is quite analogous to what we found for the ‘principle of least time’ which we discussed in optics. There also, we said at first it was ‘least’ time. It turned out, however, that there were situations in which it wasn’t the least time. The fundamental principle was that for any first-order variation away from the optical path, the change in time was zero; it is the same story. What we really mean by ‘least’ is that the first-order change in the value of $S$, when you change the path, is zero. It is not necessarily a ‘minimum.’

“Next, I remark on some generalizations. In the first place, the thing can be done in three dimensions. Instead of just $x$, I would have $x$, $y$, and $z$ as functions of $t$; the action is more complicated. For three-dimensional motion, you have to use the complete kinetic energy—$(m/2)$ times the whole velocity squared. That is, \begin{equation*} \text{KE}=\frac{m}{2}\biggl[ \biggl(\ddt{x}{t}\biggr)^2\!\!+\biggl(\ddt{y}{t}\biggr)^2\!\!+ \biggl(\ddt{z}{t}\biggr)^2\,\biggr]. \end{equation*} Also, the potential energy is a function of $x$, $y$, and $z$. And what about the path? The path is some general curve in space, which is not so easily drawn, but the idea is the same. And what about the $\eta$? Well, $\eta$ can have three components. You could shift the paths in $x$, or in $y$, or in $z$—or you could shift in all three directions simultaneously. So $\eta$ would be a vector. This doesn’t really complicate things too much, though. Since only the first-order variation has to be zero, we can do the calculation by three successive shifts. We can shift $\eta$ only in the $x$-direction and say that coefficient must be zero. We get one equation. Then we shift it in the $y$-direction and get another. And in the $z$-direction and get another. Or, of course, in any order that you want. Anyway, you get three equations. And, of course, Newton’s law is really three equations in the three dimensions—one for each component. I think that you can practically see that it is bound to work, but we will leave you to show for yourself that it will work for three dimensions. Incidentally, you could use any coordinate system you want, polar or otherwise, and get Newton’s laws appropriate to that system right off by seeing what happens if you have the shift $\eta$ in radius, or in angle, etc.

“Similarly, the method can be generalized to any number of particles. If you have, say, two particles with a force between them, so that there is a mutual potential energy, then you just add the kinetic energy of both particles and take the potential energy of the mutual interaction. And what do you vary? You vary the paths of both particles. Then, for two particles moving in three dimensions, there are six equations. You can vary the position of particle $1$ in the $x$-direction, in the $y$-direction, and in the $z$-direction, and similarly for particle $2$; so there are six equations. And that’s as it should be. There are the three equations that determine the acceleration of particle $1$ in terms of the force on it and three for the acceleration of particle $2$, from the force on it. You follow the same game through, and you get Newton’s law in three dimensions for any number of particles.

“I have been saying that we get Newton’s law. That is not quite true, because Newton’s law includes nonconservative forces like friction. Newton said that $ma$ is equal to any $F$. But the principle of least action only works for conservative systems—where all forces can be gotten from a potential function. You know, however, that on a microscopic level—on the deepest level of physics—there are no nonconservative forces. Nonconservative forces, like friction, appear only because we neglect microscopic complications—there are just too many particles to analyze. But the fundamental laws can be put in the form of a principle of least action.

2024.6.16: 모든 물리법칙이 최소 에너지 원칙으로부터 나온다는 걸 파인만 자신이 발견했다는 거, differential statement only involves the derivatives of the potential

“Let me generalize still further. Suppose we ask what happens if the particle moves relativistically. We did not get the right relativistic equation of motion; $F=ma$ is only right nonrelativistically. The question is: Is there a corresponding principle of least action for the relativistic case? There is. The formula in the case of relativity is the following: \begin{equation*} S=-m_0c^2\int_{t_1}^{t_2}\sqrt{1-v^2/c^2}\,dt- q\int_{t_1}^{t_2}[\phi(x,y,z,t)-\FLPv\cdot \FLPA(x,y,z,t)]\,dt. \end{equation*} \begin{align*} S=-m_0c^2&\int_{t_1}^{t_2}\sqrt{1-v^2/c^2}\,dt\\[1.25ex] -q&\int_{t_1}^{t_2}[\phi(x,y,z,t)-\FLPv\cdot \FLPA(x,y,z,t)]\,dt. \end{align*} The first part of the action integral is the rest mass $m_0$ times $c^2$ times the integral of a function of velocity, $\sqrt{1-v^2/c^2}$. Then instead of just the potential energy, we have an integral over the scalar potential $\phi$ and over $\FLPv$ times the vector potential $\FLPA$. Of course, we are then including only electromagnetic forces. All electric and magnetic fields are given in terms of $\phi$ and $\FLPA$. This action function gives the complete theory of relativistic motion of a single particle in an electromagnetic field.

“Of course, wherever I have written $\FLPv$, you understand that before you try to figure anything out, you must substitute $dx/dt$ for $v_x$ and so on for the other components. Also, you put the point along the path at time $t$, $x(t)$, $y(t)$, $z(t)$ where I wrote simply $x$, $y$, $z$. Properly, it is only after you have made those replacements for the $\FLPv$’s that you have the formula for the action for a relativistic particle. I will leave to the more ingenious of you the problem to demonstrate that this action formula does, in fact, give the correct equations of motion for relativity. May I suggest you do it first without the $\FLPA$, that is, for no magnetic field? Then you should get the components of the equation of motion, $d\FLPp/dt=-q\,\FLPgrad{\phi}$, where, you remember, $\FLPp=m_0\FLPv/\sqrt{1-v^2/c^2}$.

“It is much more difficult to include also the case with a vector potential. The variations get much more complicated. But in the end, the force term does come out equal to $q(\FLPE+\FLPv\times\FLPB)$, as it should. But I will leave that for you to play with.

2024.6.3: $(x, y, z, t)=X(t)=(x(t), y(t), z(t))$라 하면,

1. - $m_0c^2 (\sqrt{1-v^2(X(t)+\eta(t))/c^2}-\sqrt{1-v^2(X(t))/c^2})$

= - $m_0c^2 \frac{{\sqrt{1-v^2(X(t)+\eta(t))/c^2}-\sqrt{1-v^2(X(t))/c^2}}}

{v^2(X(t)+\eta(t))-v^2(X(t))} ({v^2(X(t)+\eta(t))-v^2(X(t))})$

=$ -\frac{m_0c^2}{2} \frac{1}{\sqrt{1-v^2(X(t))/c^2}}

(|X'(t)+\eta'(t)|^2 -|X'(t)^2|)$ +so', where so=second order term

=$ -\frac{m_0c^2}{-2c^2} \frac{1}{\sqrt{1-v^2/c^2}}

2(X' \cdot \eta'(t)) = m_0

(\frac{\vec v}{\sqrt{1-v^2/c^2}} \cdot \eta'(t))

= p \cdot \eta'(t)= (p \cdot \eta(t))' - \frac{dp}{dt} \cdot \eta(t) $ +so

2. $\phi(X(t)+\eta(t))-\phi(X(t))=\nabla\phi \cdot \eta(t)$+ so

3. $\vec v \cdot A(X(t)+\eta(t)) - \vec v \cdot A(X(t))=\nabla (\vec v\cdot A)\cdot \eta(t)$+ so

공식 (d),

$a \times(b\times c)=b(a\cdot c)-c(a \cdot b)$에

$a=\vec v, b=A, c=\nabla$를 대입하면 => $\vec v \times(\nabla \times A)=- A(\nabla \cdot \vec v)+\nabla(\vec v \cdot A)$

=> $(\vec v \cdot A(X(t)+\eta(t)) - \vec v \cdot A(X(t))= (\vec v \times(\nabla \times A)+ A(\nabla \cdot \vec v))\cdot \eta(t)$+ so,

but $\nabla \cdot v=0$ since $\frac{\partial v_r}{\partial r}=\frac{\partial ^2 r}{\partial r \partial t}=0$ for $r=x, y, z$

2024.6.16&17: 장님 코끼리 만지듯하여 얻은 정보들을,

1. 마이켈슨 몰리 실험과 기존 관념과의 모순 해결로 나온 로렌쯔 압축 및 아인슈타인의 에너지 식을 얻게 된 과정

2. 뉴튼 접근방법과 물리적 수학 묘사의 궁극적 목표는 자연에 대한 근사 simulation이란 걸 감안하여 정리해보면,

(1) Raymond에 의하면, $CP^2$ has a $T^2$ action whose orbit space is a weighted disk $D^2$

(2) $CP^2$ embedded into $R^7$ ... 수학적 논리들과

(3) 물리적으로 얻은

① $F=qE+v \times B$

② 물리적 기존 관습에 근거 두고 유도된 디랙 operator

③ 파인만의 least action principle 들이

조화롭게 아구가 맞으려면, 아래 투사의 합성 함수의 $F=qE+v \times B$의 pullback이 디랙 operator가 되어야 한다. 다른 경우, 아인슈타인이 뉴튼의 만류인력 법칙 분모의 지수 수정했듯이, 수정해야 한다.

4. $CP^2 \hookrightarrow R^7 \,\\ \pi \downarrow \uparrow \chi \, \text{cross section} \\ D^2 \hookrightarrow R^3$

“I would like to emphasize that in the general case, for instance in the relativistic formula, the action integrand no longer has the form of the kinetic energy minus the potential energy. That’s only true in the nonrelativistic approximation. For example, the term $m_0c^2\sqrt{1-v^2/c^2}$ is not what we have called the kinetic energy. The question of what the action should be for any particular case must be determined by some kind of trial and error. It is just the same problem as determining what are the laws of motion in the first place. You just have to fiddle around with the equations that you know and see if you can get them into the form of the principle of least action.

“One other point on terminology. The function that is integrated over time to get the action $S$ is called the Lagrangian, $\Lagrangian$, which is a function only of the velocities and positions of particles. So the principle of least action is also written \begin{equation*} S=\int_{t_1}^{t_2}\Lagrangian(x_i,v_i)\,dt, \end{equation*} where by $x_i$ and $v_i$ are meant all the components of the positions and velocities. So if you hear someone talking about the ‘Lagrangian,’ you know they are talking about the function that is used to find $S$. For relativistic motion in an electromagnetic field \begin{equation*} \Lagrangian=-m_0c^2\sqrt{1-v^2/c^2}-q(\phi-\FLPv\cdot\FLPA). \end{equation*}

“Also, I should say that $S$ is not really called the ‘action’ by the most precise and pedantic people. It is called ‘Hamilton’s first principal function.’ Now I hate to give a lecture on ‘the-principle-of-least-Hamilton’s-first-principal-function.’ So I call it ‘the action.’ Also, more and more people are calling it the action. You see, historically something else which is not quite as useful was called the action, but I think it’s more sensible to change to a newer definition. So now you too will call the new function the action, and pretty soon everybody will call it by that simple name.

“Now I want to say some things on this subject which are similar to the discussions I gave about the principle of least time. There is quite a difference in the characteristic of a law which says a certain integral from one place to another is a minimum—which tells something about the whole path—and of a law which says that as you go along, there is a force that makes it accelerate. The second way tells how you inch your way along the path, and the other is a grand statement about the whole path. In the case of light, we talked about the connection of these two. Now, I would like to explain why it is true that there are differential laws when there is a least action principle of this kind. The reason is the following: Consider the actual path in space and time. As before, let’s take only one dimension, so we can plot the graph of $x$ as a function of $t$. Along the true path, $S$ is a minimum. Let’s suppose that we have the true path and that it goes through some point $a$ in space and time, and also through another nearby point $b$ (Fig. 19–11). Now if the entire integral from $t_1$ to $t_2$ is a minimum, it is also necessary that the integral along the little section from $a$ to $b$ is also a minimum. It can’t be that the part from $a$ to $b$ is a little bit more. Otherwise you could just fiddle with just that piece of the path and make the whole integral a little lower.

“So every subsection of the path must also be a minimum. And this is true no matter how short the subsection. Therefore, the principle that the whole path gives a minimum can be stated also by saying that an infinitesimal section of path also has a curve such that it has a minimum action. Now if we take a short enough section of path—between two points $a$ and $b$ very close together—how the potential varies from one place to another far away is not the important thing, because you are staying almost in the same place over the whole little piece of the path. The only thing that you have to discuss is the first-order change in the potential. The answer can only depend on the derivative of the potential and not on the potential everywhere. So the statement about the gross property of the whole path becomes a statement of what happens for a short section of the path—a differential statement. And this differential statement only involves the derivatives of the potential, that is, the force at a point. That’s the qualitative explanation of the relation between the gross law and the differential law.

2024.6.2: force는 2nd derivative which is the same degree of a curvature.

모든 게 가우스-보넷 정리, 구조보존 법칙으로.

6.18: see 가우스 법칙

7.29: local 상황을 리만은 individual로 표현

“In the case of light we also discussed the question: How does the particle find the right path? From the differential point of view, it is easy to understand. Every moment it gets an acceleration and knows only what to do at that instant. But all your instincts on cause and effect go haywire when you say that the particle decides to take the path that is going to give the minimum action. Does it ‘smell’ the neighboring paths to find out whether or not they have more action? In the case of light, when we put blocks in the way so that the photons could not test all the paths, we found that they couldn’t figure out which way to go, and we had the phenomenon of diffraction.

“Is the same thing true in mechanics? Is it true that the particle doesn’t just ‘take the right path’ but that it looks at all the other possible trajectories? And if by having things in the way, we don’t let it look, that we will get an analog of diffraction? The miracle of it all is, of course, that it does just that. That’s what the laws of quantum mechanics say. So our principle of least action is incompletely stated. It isn’t that a particle takes the path of least action but that it smells all the paths in the neighborhood and chooses the one that has the least action by a method analogous to the one by which light chose the shortest time. You remember that the way light chose the shortest time was this: If it went on a path that took a different amount of time, it would arrive at a different phase. And the total amplitude at some point is the sum of contributions of amplitude for all the different ways the light can arrive. All the paths that give wildly different phases don’t add up to anything. But if you can find a whole sequence of paths which have phases almost all the same, then the little contributions will add up and you get a reasonable total amplitude to arrive. The important path becomes the one for which there are many nearby paths which give the same phase.

2024.7.4: smell이란 좌충우돌하며 가장 힘이 덜 드는 방향을 알아보는 거야

온도 정의를 봐~ 좌충우돌 평균이잖아, 그리고 아인슈타인의 drift 방정식를 보면 질량 희박한 곳으로 흐르고.

즉, 가장 만만한, 힘이 덜 드는 방향으로 움직인다는 거야

7.21: 좌충우돌 현상, 크리스탈 자라는 현상, 브라운 운동

7.31: thermal fluctuation, 좌충우돌의 다른 표현... 요리조리 빠져 나가 온도 강하, 전자파 내지 소리 방출

“It is just exactly the same thing for quantum mechanics. The complete quantum mechanics (for the nonrelativistic case and neglecting electron spin) works as follows: The probability that a particle starting at point $1$ at the time $t_1$ will arrive at point $2$ at the time $t_2$ is the square of a probability amplitude. The total amplitude can be written as the sum of the amplitudes for each possible path—for each way of arrival. For every $x(t)$ that we could have—for every possible imaginary trajectory—we have to calculate an amplitude. Then we add them all together. What do we take for the amplitude for each path? Our action integral tells us what the amplitude for a single path ought to be. The amplitude is proportional to some constant times $e^{iS/\hbar}$, where $S$ is the action for that path. That is, if we represent the phase of the amplitude by a complex number, the phase angle is $S/\hbar$. The action $S$ has dimensions of energy times time, and Planck’s constant $\hbar$ has the same dimensions. It is the constant that determines when quantum mechanics is important.

204.7.3: magnetic phase change

“Here is how it works: Suppose that for all paths, $S$ is very large compared to $\hbar$. One path contributes a certain amplitude. For a nearby path, the phase is quite different, because with an enormous $S$ even a small change in $S$ means a completely different phase—because $\hbar$ is so tiny. So nearby paths will normally cancel their effects out in taking the sum—except for one region, and that is when a path and a nearby path all give the same phase in the first approximation (more precisely, the same action within $\hbar$). Only those paths will be the important ones.

2024.6.18: $\hbar$ might be a sort of volume of basic block of color structure, i.e. $CP^2, S^2 \times S^2$ or the twisted $S^2$ bundle of $S^2$

7.2: 빛이 여러 경로 탐색(smell)한다고 한 것은 action, 즉 각각의 path 에너지가 phase로 나타나고 그것이 확률에 기여한 해석에 기인... 색의 기하구조로부터 명백하듯 폐기 시켜야

“Now I want to talk about other minimum principles in physics. There are many very interesting ones. I will not try to list them all now but will only describe one more. Later on, when we come to a physical phenomenon which has a nice minimum principle, I will tell about it then. I want now to show that we can describe electrostatics, not by giving a differential equation for the field, but by saying that a certain integral is a maximum or a minimum. First, let’s take the case where the charge density is known everywhere, and the problem is to find the potential $\phi$ everywhere in space. You know that the answer should be \begin{equation*} \nabla^2\phi=-\rho/\epsO. \end{equation*} But another way of stating the same thing is this: Calculate the integral $U\stared$, where \begin{equation*} U\stared=\frac{\epsO}{2}\int(\FLPgrad{\phi})^2\,dV- \int\rho\phi\,dV, \end{equation*} which is a volume integral to be taken over all space. This thing is a minimum for the correct potential distribution $\phi(x,y,z)$.

“We can show that the two statements about electrostatics are equivalent. Let’s suppose that we pick any function $\phi$. We want to show that when we take for $\phi$ the correct potential $\underline{\phi}$, plus a small deviation $f$, then in the first order, the change in $U\stared$ is zero. So we write \begin{equation*} \phi=\underline{\phi}+f. \end{equation*} The $\underline{\phi}$ is what we are looking for, but we are making a variation of it to find what it has to be so that the variation of $U\stared$ is zero to first order. For the first part of $U\stared$, we need \begin{equation*} (\FLPgrad{\phi})^2=(\FLPgrad{\underline{\phi}})^2+ 2\,\FLPgrad{\underline{\phi}}\cdot\FLPgrad{f}+ (\FLPgrad{f})^2. \end{equation*} The only first-order term that will vary is \begin{equation*} 2\,\FLPgrad{\underline{\phi}}\cdot\FLPgrad{f}. \end{equation*} In the second term of the quantity $U\stared$, the integrand is \begin{equation*} \rho\phi=\rho\underline{\phi}+\rho f, \end{equation*} whose variable part is $\rho f$. So, keeping only the variable parts, we need the integral \begin{equation*} \Delta U\stared=\int(\epsO\FLPgrad{\underline{\phi}}\cdot\FLPgrad{f}- \rho f)\,dV. \end{equation*}

“Now, following the old general rule, we have to get the darn thing all clear of derivatives of $f$. Let’s look at what the derivatives are. The dot product is \begin{equation*} \ddp{\underline{\phi}}{x}\,\ddp{f}{x}+ \ddp{\underline{\phi}}{y}\,\ddp{f}{y}+ \ddp{\underline{\phi}}{z}\,\ddp{f}{z}, \end{equation*} which we have to integrate with respect to $x$, to $y$, and to $z$. Now here is the trick: to get rid of $\ddpl{f}{x}$ we integrate by parts with respect to $x$. That will carry the derivative over onto the $\underline{\phi}$. It’s the same general idea we used to get rid of derivatives with respect to $t$. We use the equality \begin{equation*} \int\ddp{\underline{\phi}}{x}\,\ddp{f}{x}\,dx= f\,\ddp{\underline{\phi}}{x}- \int f\,\frac{\partial^2\underline{\phi}}{\partial x^2}\,dx. \end{equation*} The integrated term is zero, since we have to make $f$ zero at infinity. (That corresponds to making $\eta$ zero at $t_1$ and $t_2$. So our principle should be more accurately stated: $U\stared$ is less for the true $\phi$ than for any other $\phi(x,y,z)$ having the same values at infinity.) Then we do the same thing for $y$ and $z$. So our integral $\Delta U\stared$ is \begin{equation*} \Delta U\stared=\int(-\epsO\nabla^2\underline{\phi}-\rho)f\,dV. \end{equation*} In order for this variation to be zero for any $f$, no matter what, the coefficient of $f$ must be zero and, therefore, \begin{equation*} \nabla^2\underline{\phi}=-\rho/\epsO. \end{equation*} We get back our old equation. So our ‘minimum’ proposition is correct.

“We can generalize our proposition if we do our algebra in a little different way. Let’s go back and do our integration by parts without taking components. We start by looking at the following equality: \begin{equation*} \FLPdiv{(f\,\FLPgrad{\underline{\phi}})}= \FLPgrad{f}\cdot\FLPgrad{\underline{\phi}}+f\,\nabla^2\underline{\phi}. \end{equation*} If I differentiate out the left-hand side, I can show that it is just equal to the right-hand side. Now we can use this equation to integrate by parts. In our integral $\Delta U\stared$, we replace $\FLPgrad{\underline{\phi}}\cdot\FLPgrad{f}$ by $\FLPdiv{(f\,\FLPgrad{\underline{\phi}})}-f\,\nabla^2\underline{\phi}$, which gets integrated over volume. The divergence term integrated over volume can be replaced by a surface integral: \begin{equation*} \int\FLPdiv{(f\,\FLPgrad{\underline{\phi}})}\,dV= \int f\,\FLPgrad{\underline{\phi}}\cdot\FLPn\,da. \end{equation*} Since we are integrating over all space, the surface over which we are integrating is at infinity. There, $f$ is zero and we get the same answer as before.

“Only now we see how to solve a problem when we don’t know where all the charges are. Suppose that we have conductors with charges spread out on them in some way. We can still use our minimum principle if the potentials of all the conductors are fixed. We carry out the integral for $U\stared$ only in the space outside of all conductors. Then, since we can’t vary $\underline{\phi}$ on the conductor, $f$ is zero on all those surfaces, and the surface integral \begin{equation*} \int f\,\FLPgrad{\underline{\phi}}\cdot\FLPn\,da \end{equation*} is still zero. The remaining volume integral \begin{equation*} \Delta U\stared=\int(-\epsO\,\nabla^2\underline{\phi}-\rho)f\,dV \end{equation*} is only to be carried out in the spaces between conductors. Of course, we get Poisson’s equation again, \begin{equation*} \nabla^2\underline{\phi}=-\rho/\epsO. \end{equation*}

2024.6.6: 헷갈린다, 정리 좀 해보자

$\rho$가 주어지면, 최소 원리는 $\nabla^2 \underline{\phi}=-\rho/\epsilon_0$를 유도된다는 걸 보여주며 다이버전스 정리 사용법도 보여 주었고.

$\Delta U\stared=\int(\epsO\, \FLPgrad{\underline\phi}\cdot\FLPgrad{f}-\rho f)\,dV$=$\int\epsO\,(\FLPdiv{(f\,\FLPgrad{\underline{\phi}})}-f\,\nabla^2\underline{\phi}) -\rho f\,dV$, by $\FLPgrad{f}\cdot\FLPgrad{\underline{\phi}}=\FLPdiv{(f\,\FLPgrad{\underline{\phi}})}-f\,\nabla^2\underline{\phi}$

그리고 이걸 도체 안팎으로 나눠서 적분, 도체 경계에 다이버전스 정리 사용하려고

“There is an interesting case when the only charges are on conductors. Then \begin{equation*} U\stared=\frac{\epsO}{2}\int(\FLPgrad{\phi})^2\,dV. \end{equation*} Our minimum principle says that in the case where there are conductors set at certain given potentials, the potential between them adjusts itself so that integral $U\stared$ is least. What is this integral? The term $\FLPgrad{\phi}$ is the electric field, so the integral is the electrostatic energy. The true field is the one, of all those coming from the gradient of a potential, with the minimum total energy.

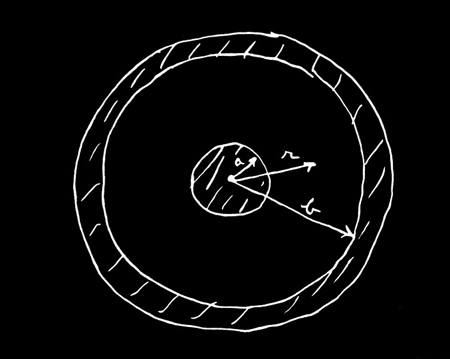

“I would like to use this result to calculate something particular to show you that these things are really quite practical. Suppose I take two conductors in the form of a cylindrical condenser (Fig. 19–12). The inside conductor has the potential $V$, and the outside is at the potential zero.

2024.6.9: 전도체내 포텐셜은 constant하고 그 차이만이 의미 있다. 하여 바깥 것을 0으로 하고 안의 것은 그 차이인 $V$로 정한 거.

7.5: see coaxial transmission line

“Suppose I don’t know the capacity of a cylindrical condenser. I can use this principle to find it. I just guess at the potential function $\phi$ until I get the lowest $C$. Suppose, for instance, I pick a potential that corresponds to a constant field. (You know, of course, that the field isn’t really constant here; it varies as $1/r$.) A field which is constant means a potential which goes linearly with distance. To fit the conditions at the two conductors, it must be \begin{equation*} \phi=V\biggl(1-\frac{r-a}{b-a}\biggr). \end{equation*} This function is $V$ at $r=a$, zero at $r=b$, and in between has a constant slope equal to $-V/(b-a)$. So what one does to find the integral $U\stared$ is multiply the square of this gradient by $\epsO/2$ and integrate over all volume. Let’s do this calculation for a cylinder of unit length. A volume element at the radius $r$ is $2\pi r\,dr$. Doing the integral, I find that my first try at the capacity gives \begin{equation*} \frac{1}{2}\,CV^2(\text{first try})=\frac{\epsO}{2} \int_a^b\frac{V^2}{(b-a)^2}\,2\pi r\,dr. \end{equation*} The integral is easy; it is just \begin{equation*} \pi V^2\biggl(\frac{b+a}{b-a}\biggr). \end{equation*} So I have a formula for the capacity which is not the true one but is an approximate job: \begin{equation*} \frac{C}{2\pi\epsO}=\frac{b+a}{2(b-a)}. \end{equation*} It is, naturally, different from the correct answer $C=2\pi\epsO/\ln(b/a)$, but it’s not too bad.

2024.6.11: 단위 두께의 실린더에 Gauss law를 적용하면,

$2\pi rE=\frac{\pi a^2 \sigma}{\epsilon_0}, Q=\pi a^2 \sigma$

$V= \int_a^b E dr= \int_a^b \frac{a^2 \sigma}{2r \epsilon_0}dr= \frac{a^2 \sigma}{2 \epsilon_0} \ln{\frac ba} $ =$\frac{Q}{2\pi \epsilon_0} \ln{\frac ba}=\frac{Q}{C}$

| $\displaystyle\frac{b}{a}$ | $\displaystyle\frac{C_{\text{true}}}{2\pi\epsO}$ | $\displaystyle\frac{C (\text{first approx.})}{2\pi\epsO}$ |

| $\phantom{00}2\phantom{.0}$ | $\phantom{0}1.4427\phantom{00}$ | $\phantom{0}1.500\phantom{000}$ |

| $\phantom{00}4\phantom{.0}$ | $\phantom{0}0.721\phantom{000}$ | $\phantom{0}0.833\phantom{000}$ |

| $\phantom{0}10\phantom{.0}$ | $\phantom{0}0.434\phantom{000}$ | $\phantom{0}0.611\phantom{000}$ |

| $100\phantom{.0}$ | $\phantom{0}0.217\phantom{000}$ | $\phantom{0}0.51\phantom{0000}$ |

| $\phantom{00}1.5$ | $\phantom{0}2.4663\phantom{00}$ | $\phantom{0}2.50\phantom{0000}$ |

| $\phantom{00}1.1$ | $10.492059$ | $10.500000$ |

“Now I would like to tell you how to improve such a calculation. (Of course, you know the right answer for the cylinder, but the method is the same for some other odd shapes, where you may not know the right answer.) The next step is to try a better approximation to the unknown true $\phi$. For example, we might try a constant plus an exponential $\phi$, etc. But how do you know when you have a better approximation unless you know the true $\phi$? Answer: You calculate $C$; the lowest $C$ is the value nearest the truth. Let us try this idea out. Suppose that the potential is not linear but say quadratic in $r$—that the electric field is not constant but linear. The most general quadratic form that fits $\phi=0$ at $r=b$ and $\phi=V$ at $r=a$ is \begin{equation*} \phi=V\biggl[1+\alpha\biggl(\frac{r-a}{b-a}\biggr)- (1+\alpha)\biggl(\frac{r-a}{b-a}\biggr)^2 \biggr], \end{equation*} where $\alpha$ is any constant number. This formula is a little more complicated. It involves a quadratic term in the potential as well as a linear term. It is very easy to get the field out of it. The field is just \begin{equation*} E=-\ddt{\phi}{r}=-\frac{\alpha V}{b-a}+ 2(1+\alpha)\,\frac{(r-a)V}{(b-a)^2}. \end{equation*} Now we have to square this and integrate over volume. But wait a moment. What should I take for $\alpha$? I can take a parabola for the $\phi$; but what parabola? Here’s what I do: Calculate the capacity with an arbitrary $\alpha$. What I get is \begin{equation*} \frac{C}{2\pi\epsO}=\frac{a}{b-a} \biggl[\frac{b}{a}\biggl(\frac{\alpha^2}{6}+ \frac{2\alpha}{3}+1\biggr)+ \frac{1}{6}\,\alpha^2+\frac{1}{3}\biggr]. \end{equation*} It looks a little complicated, but it comes out of integrating the square of the field. Now I can pick my $\alpha$. I know that the truth lies lower than anything that I am going to calculate, so whatever I put in for $\alpha$ is going to give me an answer too big. But if I keep playing with $\alpha$ and get the lowest possible value I can, that lowest value is nearer to the truth than any other value. So what I do next is to pick the $\alpha$ that gives the minimum value for $C$. Working it out by ordinary calculus, I get that the minimum $C$ occurs for $\alpha=-2b/(b+a)$. Substituting that value into the formula, I obtain for the minimum capacity \begin{equation*} \frac{C}{2\pi\epsO}=\frac{b^2+4ab+a^2}{3(b^2-a^2)}. \end{equation*}

“I’ve worked out what this formula gives for $C$ for various values of $b/a$. I call these numbers $C (\text{quadratic})$. Table 19–2 compares $C (\text{quadratic})$ with the true $C$.

| $\displaystyle\frac{b}{a}$ | $\displaystyle\frac{C_{\text{true}}}{2\pi\epsO}$ | $\displaystyle\frac{C (\text{quadratic})}{2\pi\epsO}$ |

| $\phantom{00}2\phantom{.0}$ | $\phantom{0}1.4427\phantom{00}$ | $\phantom{0}1.444\phantom{000}$ |

| $\phantom{00}4\phantom{.0}$ | $\phantom{0}0.721\phantom{000}$ | $\phantom{0}0.733\phantom{000}$ |

| $\phantom{0}10\phantom{.0}$ | $\phantom{0}0.434\phantom{000}$ | $\phantom{0}0.475\phantom{000}$ |

| $100\phantom{.0}$ | $\phantom{0}0.217\phantom{000}$ | $\phantom{0}0.347\phantom{000}$ |

| $\phantom{00}1.5$ | $\phantom{0}2.4663\phantom{00}$ | $\phantom{0}2.4667\phantom{00}$ |

| $\phantom{00}1.1$ | $10.492059$ | $10.492063$ |

“For example, when the ratio of the radii is $2$ to $1$, I have $1.444$, which is a very good approximation to the true answer, $1.4427$. Even for larger $b/a$, it stays pretty good—it is much, much better than the first approximation. It is even fairly good—only off by $10$ percent—when $b/a$ is $10$ to $1$. But when it gets to be $100$ to $1$—well, things begin to go wild. I get that $C$ is $0.347$ instead of $0.217$. On the other hand, for a ratio of radii of $1.5$, the answer is excellent; and for a $b/a$ of $1.1$, the answer comes out $10.492063$ instead of $10.492059$. Where the answer should be good, it is very, very good.

“I have given these examples, first, to show the theoretical value of the principles of minimum action and minimum principles in general and, second, to show their practical utility—not just to calculate a capacity when we already know the answer. For any other shape, you can guess an approximate field with some unknown parameters like $\alpha$ and adjust them to get a minimum. You will get excellent numerical results for otherwise intractable problems.”

19–2A note added after the lecture

“I should like to add something that I didn’t have time for in the lecture. (I always seem to prepare more than I have time to tell about.) As I mentioned earlier, I got interested in a problem while working on this lecture. I want to tell you what that problem is. Among the minimum principles that I could mention, I noticed that most of them sprang in one way or another from the least action principle of mechanics and electrodynamics. But there is also a class that does not. As an example, if currents are made to go through a piece of material obeying Ohm’s law, the currents distribute themselves inside the piece so that the rate at which heat is generated is as little as possible. Also we can say (if things are kept isothermal) that the rate at which energy is generated is a minimum. Now, this principle also holds, according to classical theory, in determining even the distribution of velocities of the electrons inside a metal which is carrying a current. The distribution of velocities is not exactly the equilibrium distribution [Chapter 40, Vol. I, Eq. (40.6)] because they are drifting sideways. The new distribution can be found from the principle that it is the distribution for a given current for which the entropy developed per second by collisions is as small as possible. The true description of the electrons’ behavior ought to be by quantum mechanics, however. The question is: Does the same principle of minimum entropy generation also hold when the situation is described quantum-mechanically? I haven’t found out yet.

2024.6.10: 장님 코끼리 만지듯이 여기저기 흘린 연구들을 종합해 전체적 그림 그려 보면, structure 보존 정리는 least action principle, caculation of variation이라고 하지만 boundary problem이기도

1. structure 보존 정리의 또 다른 표현은 가우스 보넷 정리

자연스럽게 보는 법

2. potential을 다양체의 volume element로 잡고

디랙 operator를 그 'path', 즉 움직임으로 간주하고

3. 가우시안 곡률의 principal axis는 디랙 operator의 direction들, circular action하는 전자기장 방향. 커질 때 작아지고 작아지질 때 커지며 서로 보완하는 연동된 2 진자처럼.

“The question is interesting academically, of course. Such principles are fascinating, and it is always worthwhile to try to see how general they are. But also from a more practical point of view, I want to know. I, with some colleagues, have published a paper in which we calculated by quantum mechanics approximately the electrical resistance felt by an electron moving through an ionic crystal Slike NaCl. [Feynman, Hellwarth, Iddings, and Platzman, “Mobility of Slow Electrons in a Polar Crystal,” Phys. Rev. 127, 1004 (1962).] But if a minimum principle existed, we could use it to make the results much more accurate, just as the minimum principle for the capacity of a condenser permitted us to get such accuracy for that capacity even though we had only a rough knowledge of the electric field.”

- Later chapters do not depend on the material of this special lecture—which is intended to be for “entertainment.” ↩